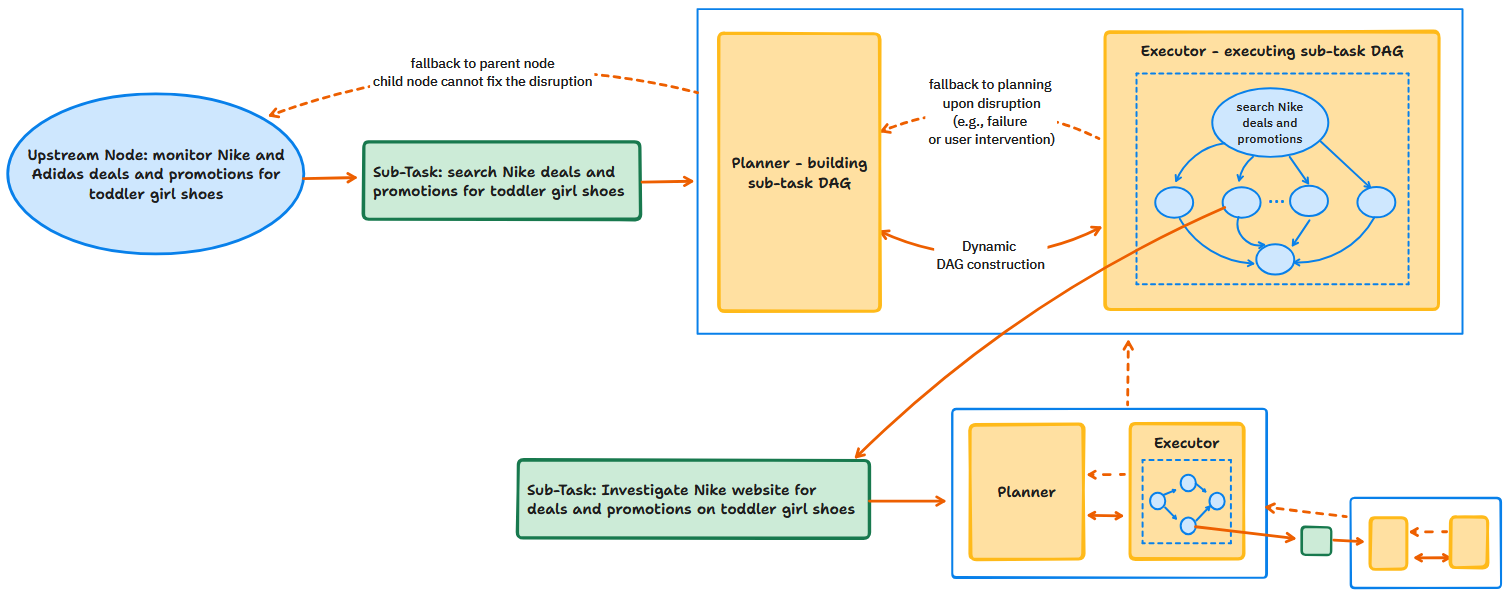

- The paper introduces a recursive two-stage planner-executor architecture within a Hierarchical Task DAG to dynamically manage complex workflows.

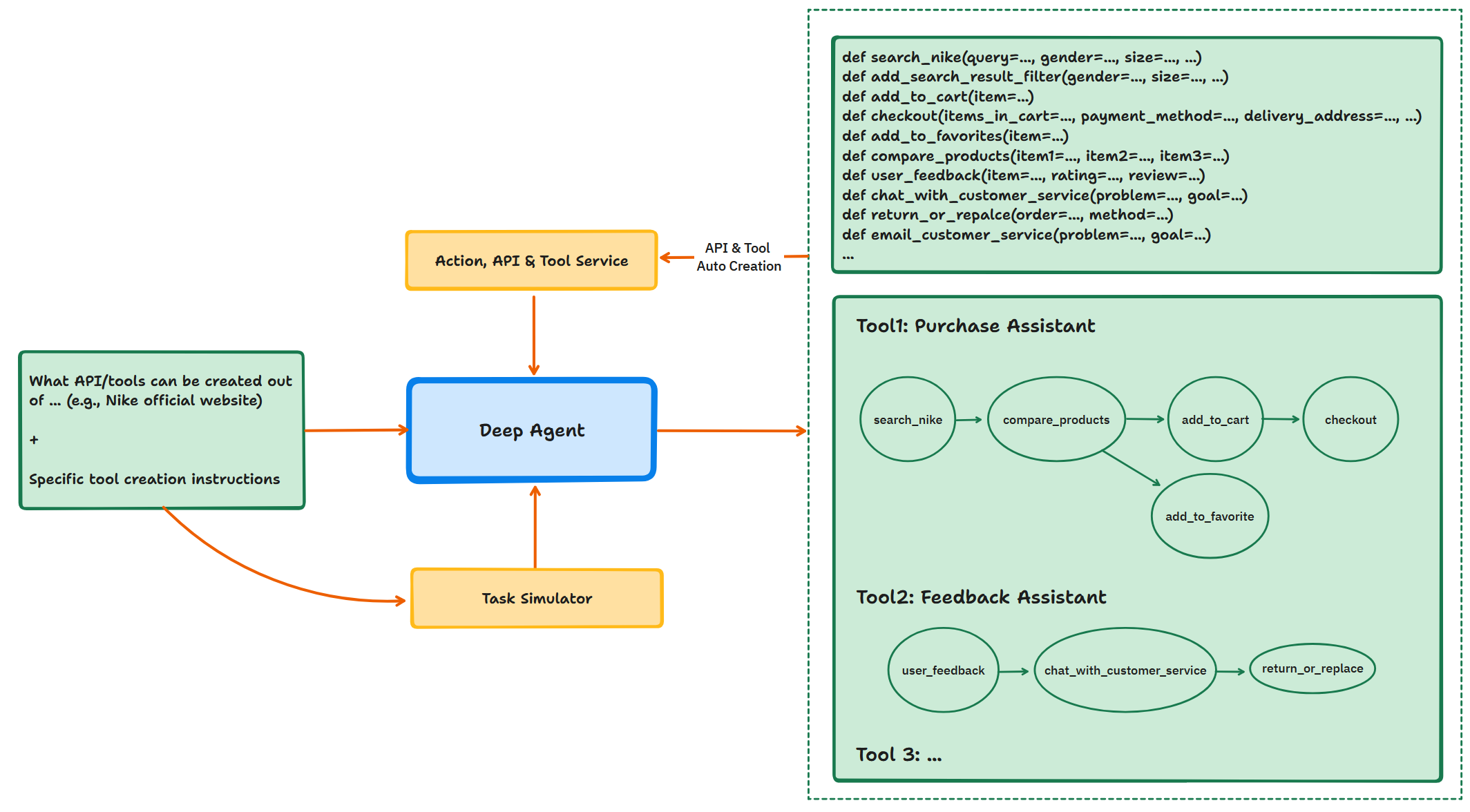

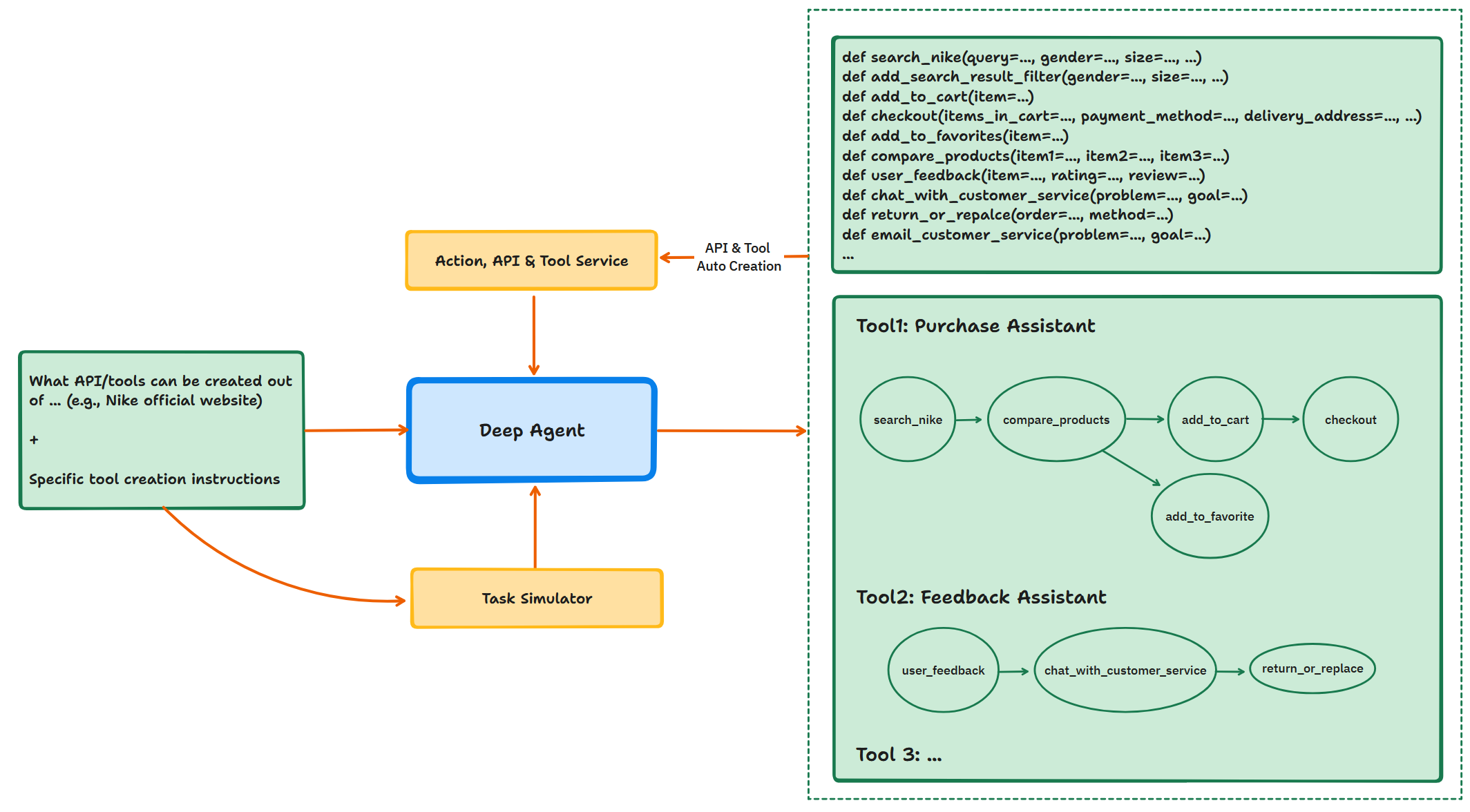

- It implements an Autonomous API and Tool Creation system, significantly enhancing scalability and reducing operational costs through auto-generated components.

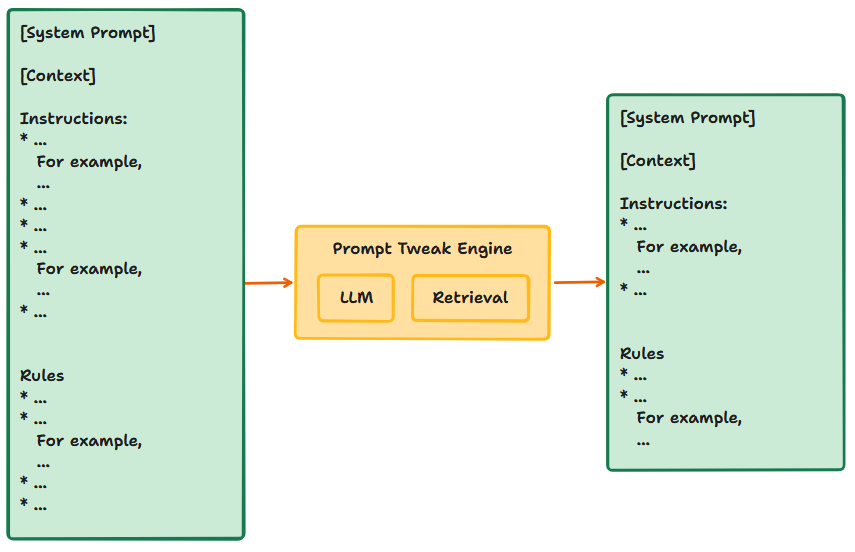

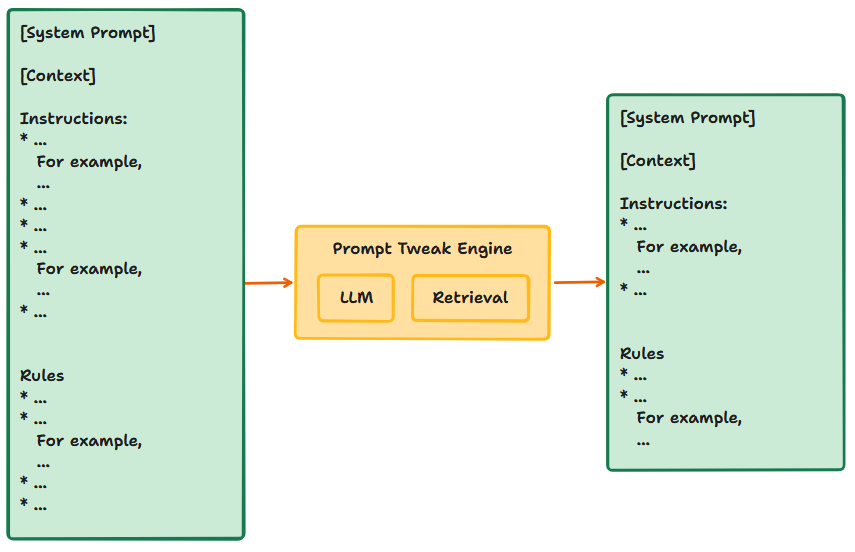

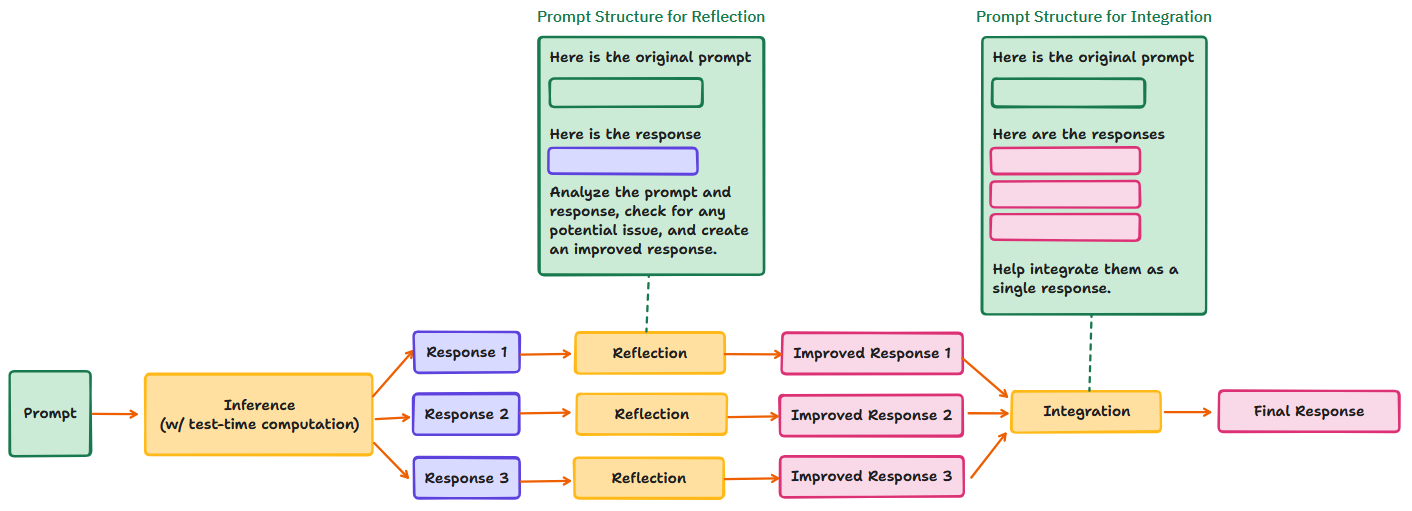

- It employs a Prompt Tweaking Engine and test-time reflection mechanism, which optimize language model performance and improve overall inference accuracy.

Autonomous Deep Agent

Introduction

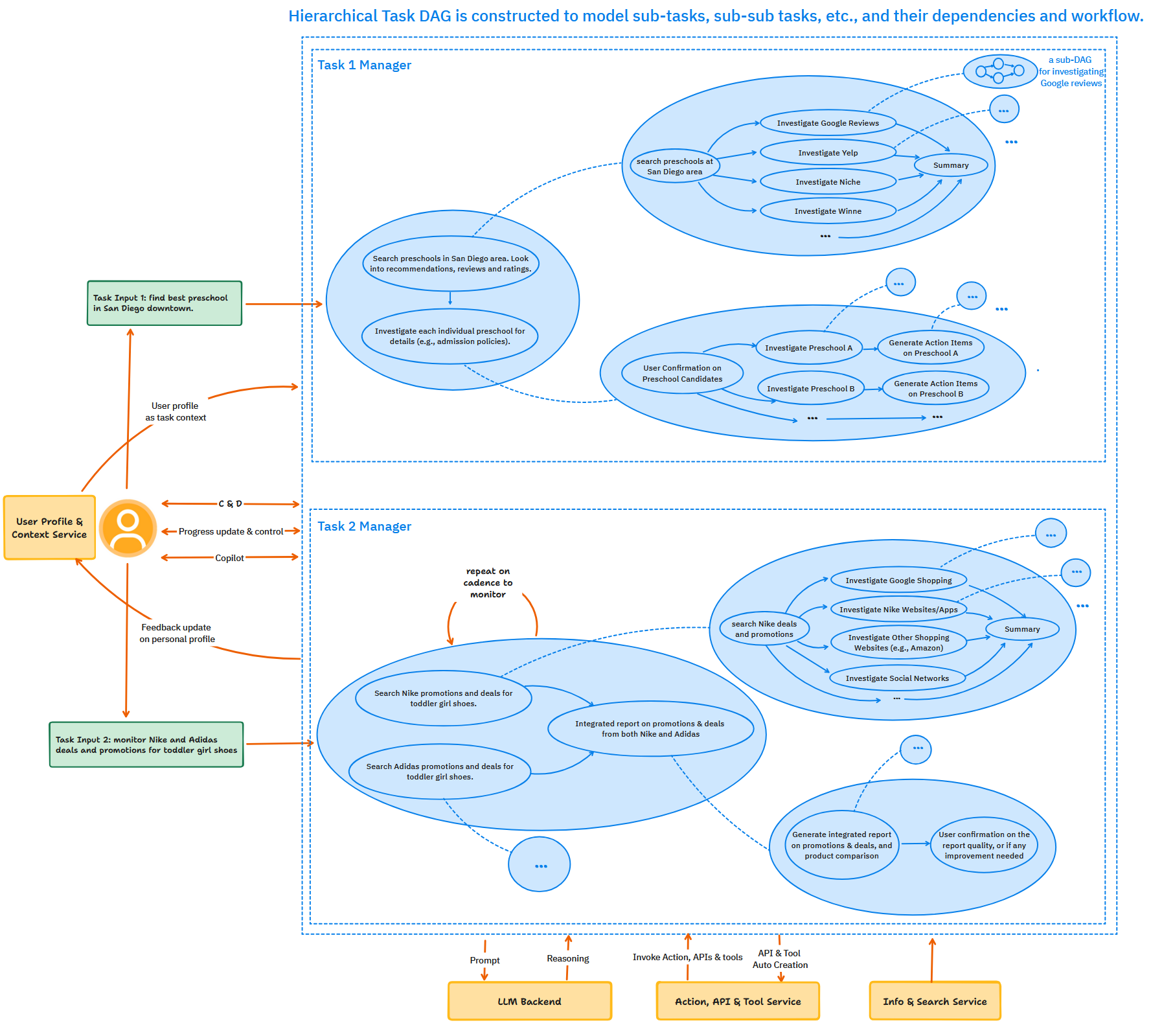

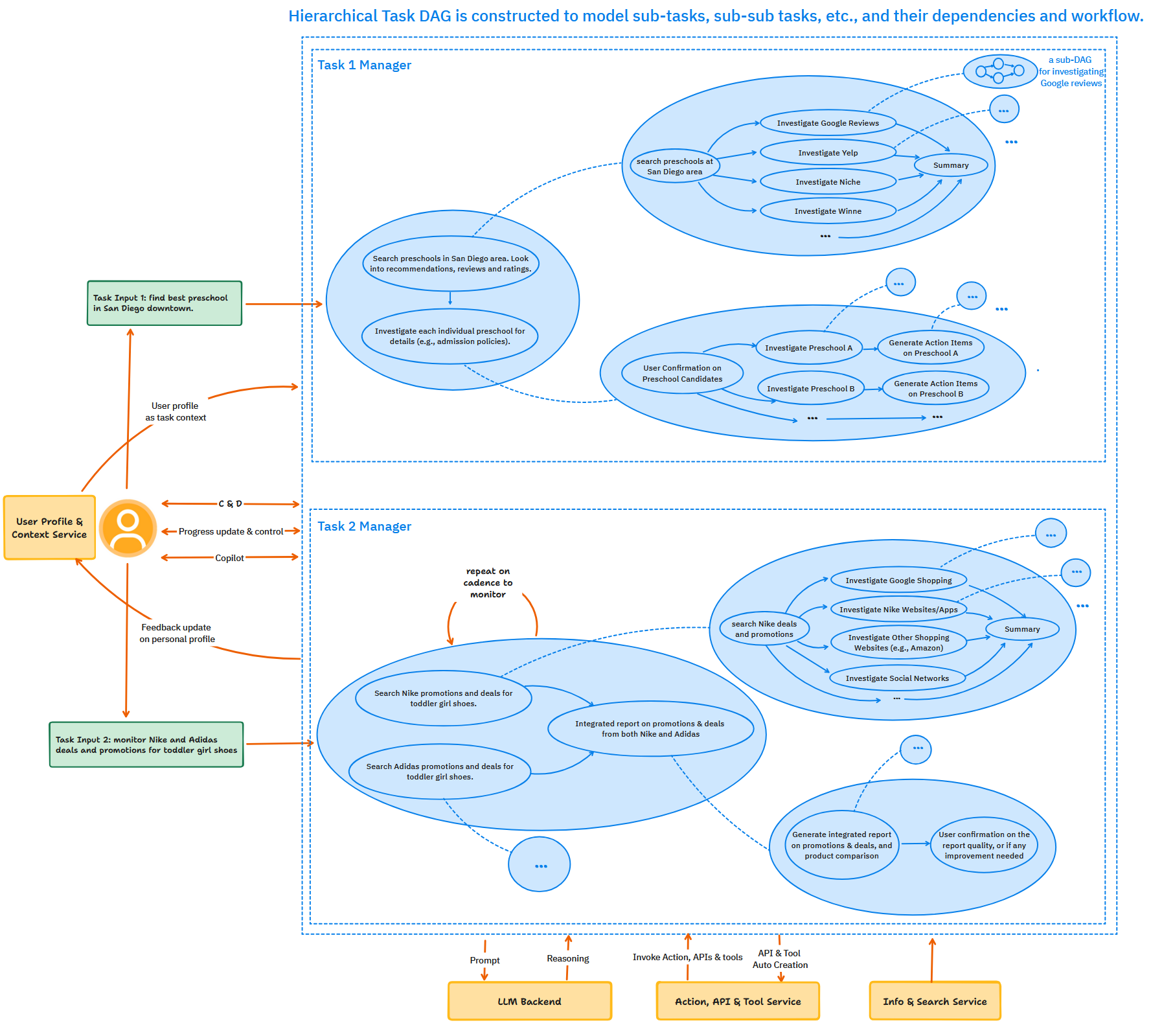

The paper "Autonomous Deep Agent" introduces Deep Agent, a sophisticated autonomous AI framework specifically devised to manage complex multi-phase tasks via a Hierarchical Task Directed Acyclic Graph (HTDAG) framework. This paper outlines three seminal advancements: a recursive two-stage planner-executor architecture, an Autonomous API and Tool Creation (AATC) system, and components for optimizing LLM prompts, which collectively enable a highly adaptive and scalable AI agent. The proposed system represents a methodical shift towards efficient management of dynamic dependencies and task workflows, leveraging LLMs for continuous task adaptation and execution.

Figure 1: Deep Agent system overview illustrating hierarchical task decomposition and workflow management.

Framework Design

Hierarchical Task DAG

The Hierarchical Task DAG (HTDAG) facilitates dynamic decomposition of high-level objectives into interconnected sub-task networks while maintaining execution coherence. The recursive nature of this design allows for adaptive task management, where sub-tasks are decomposed recursively as needed, driven by the available context and user input.

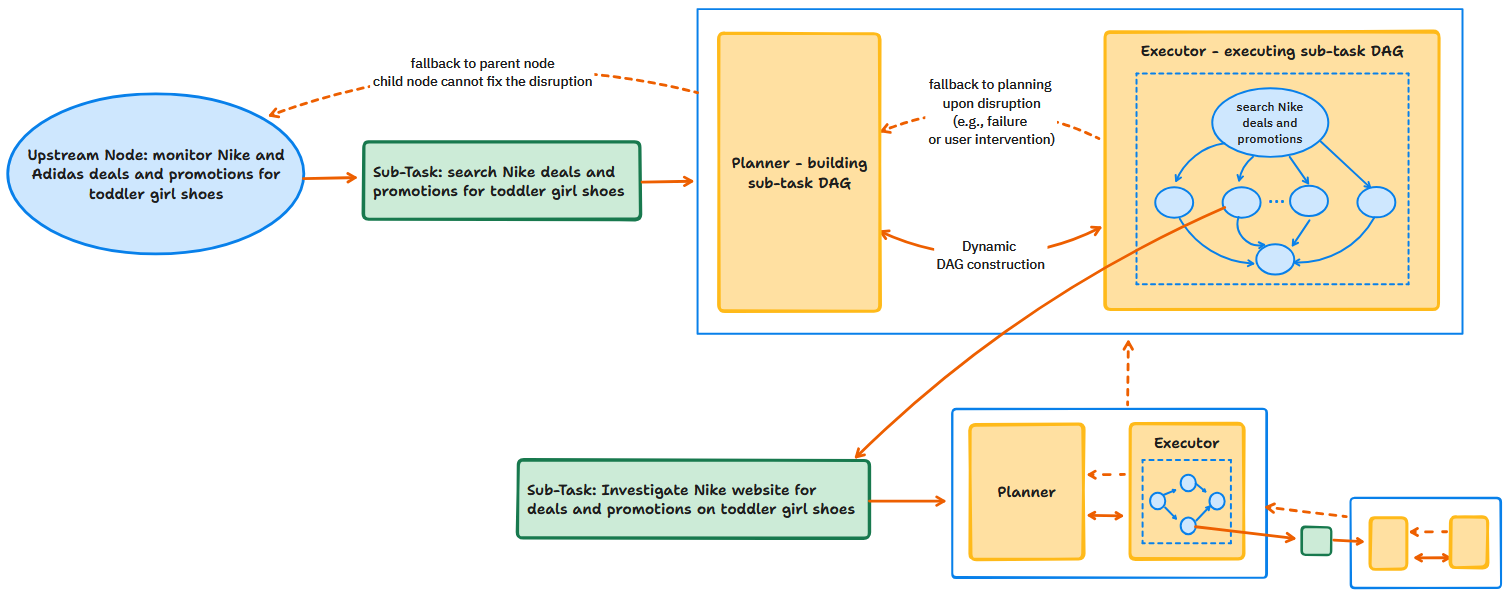

Figure 2: Two-stage planner-executor architecture illustrated through a Nike deals monitoring example.

This architecture is vital for managing tasks with evolving requirements, ensuring disruptions are contained within specific DAG levels, thus avoiding wider systemic impacts. The HTDAG structure supports runtime user interventions, offering flexibility by enabling real-time task pausing, resumption, or modification, which is essential in dynamic environments.

Deep Agent's AATC component autonomously generates APIs and composite tools by analyzing target UIs, extending the agent's capabilities through auto-generated, reusable components. This closed-loop system results in significant operational cost reductions by utilizing task simulations to identify API gaps and generate new functionalities.

Figure 3: Autonomous API and Tool Creation (AATC) framework and examples.

This generation process enhances the system's scalability, as new APIs and composite tools become accessible for repeated use, significantly lowering inference overhead and improving industrial applicability.

Prompt Tweaking Engine

To address the prompt complexity issue in generic AI agents, the Prompt Tweaking Engine (PTE) dynamically optimizes prompts by removing irrelevant instructions, thereby improving inference accuracy and stability. PTE serves as a pre-processing step, streamlining prompts based on specific task contexts, enhancing LLM performance, and minimizing unnecessary computation overhead.

Figure 4: The Prompt Tweaking Engine to reduce irrelevant instructions and rules.

Test-Time Computation and Reflection

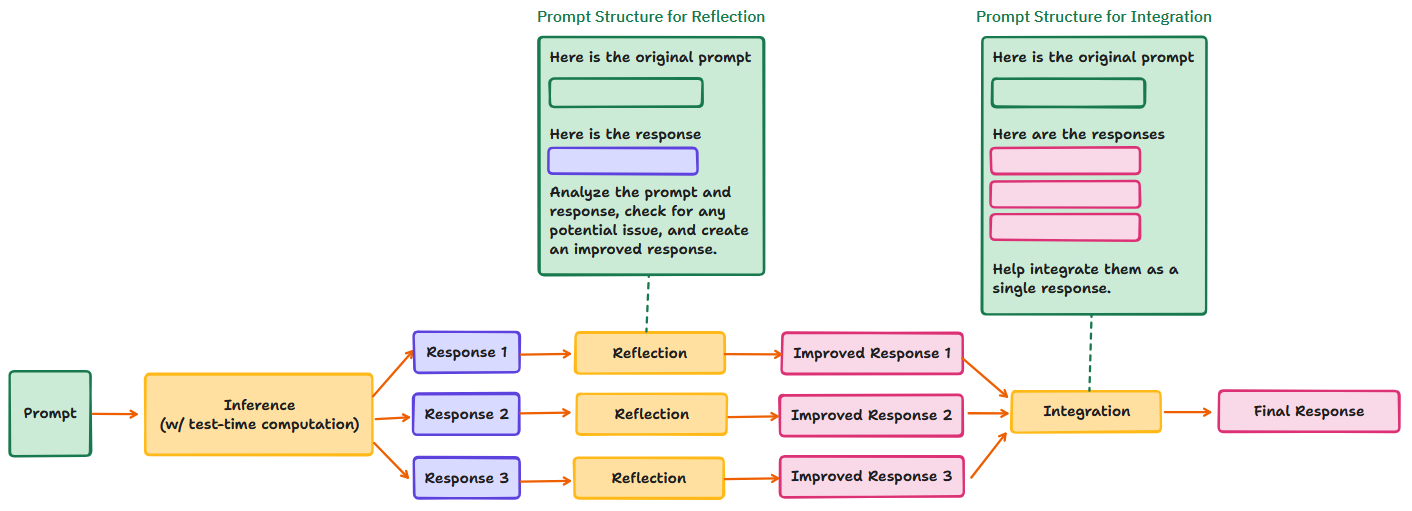

Deep Agent incorporates test-time computation strategies whereby LLMs generate multiple responses concurrently, each undergoing a reflection phase to improve quality before integration into final outputs. This enhances performance in complex scenarios requiring nuanced, user-specific responses.

Figure 5: Starting from a prompt, the system generates multiple responses test-time computation.

Furthermore, a Validator ensures execution reliability, facilitating snapshot analyses post-action, thus enabling targeted re-planning when errors are detected, thereby enhancing robustness.

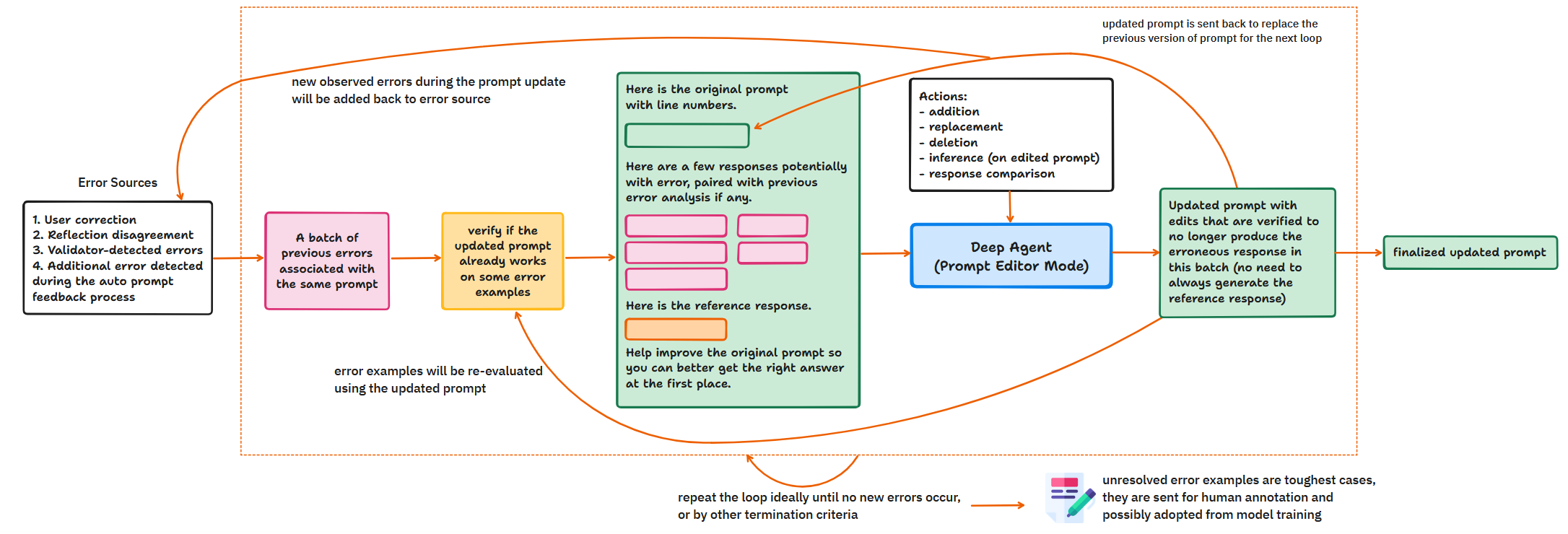

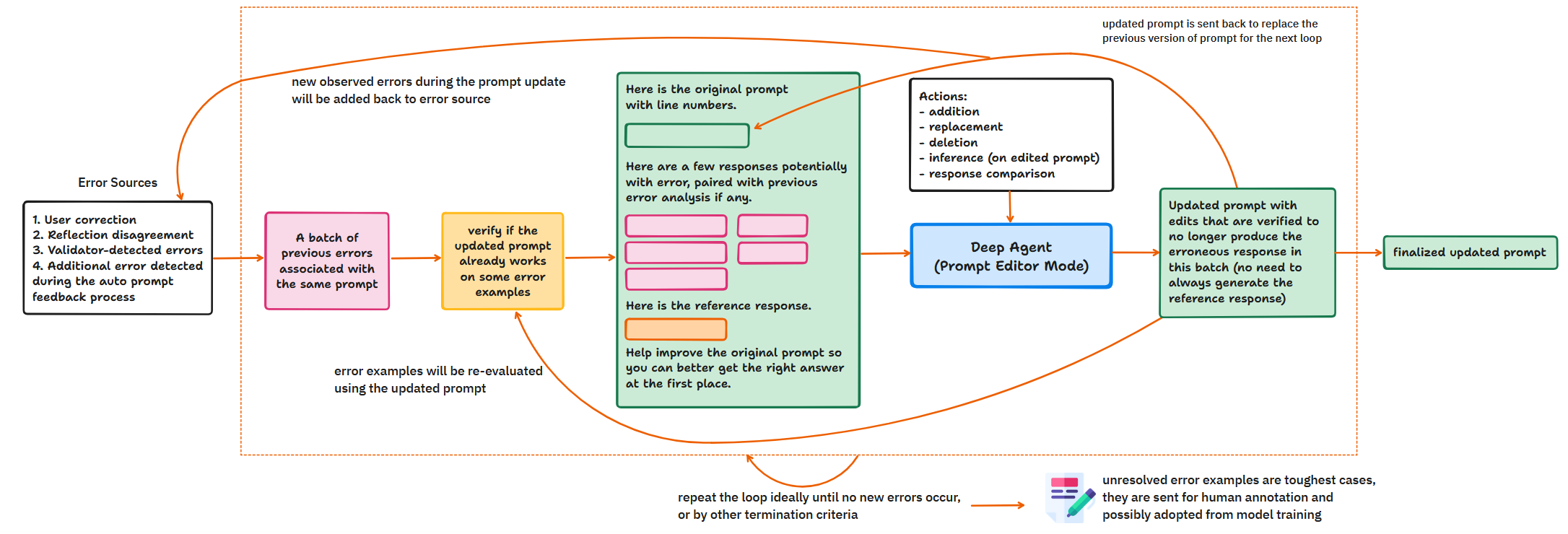

Autonomous Prompt Feedback Learning

The paper also discusses an autonomous feedback learning pipeline where error cases from various sources are compiled to iteratively refine prompts. This enables continuous enhancement of the agent's prompt repertoire without necessitating model retraining.

Figure 6: Autonomous prompt feedback learning pipeline illustrating the closed-loop process for continuous prompt improvement.

This closed-loop learning mechanism acts as a self-improving cycle that adapts over time, ensuring scalability and adaptability to new task requirements.

Conclusion

The "Autonomous Deep Agent" introduces an advanced framework for AI agents built around a Hierarchical Task DAG, autonomous API and tool creation, and advanced prompt optimization techniques. It demonstrates a methodical evolution in autonomous agent design, emphasizing scalable and efficient management of complex, interdependent tasks. Through dynamic task decomposition, continuous self-improvement, and runtime adaptability, Deep Agent exemplifies a significant progression toward effective multi-task AI systems capable of managing intricate real-world workflows. Future research directions may involve expanding its API generation capabilities and further refining its adaptive prompt strategies for even greater operational efficacy.