Exploring Efficient Full Ranking with Long-Context LLMs

In the paper, "Sliding Windows Are Not the End: Exploring Full Ranking with Long-Context LLMs," the authors investigate the limitations and possibilities of utilizing LLMs to perform listwise passage ranking without resorting to the conventional sliding window methodology, which often incurs considerable computational redundancy and inefficiencies due to overlapping content evaluations and serialized processing. Instead, they explore the application of long-context LLMs capable of handling larger input sizes in a single inference, thereby aiming to enhance both the efficiency and effectiveness in passage ranking tasks.

Motivation and Key Insights

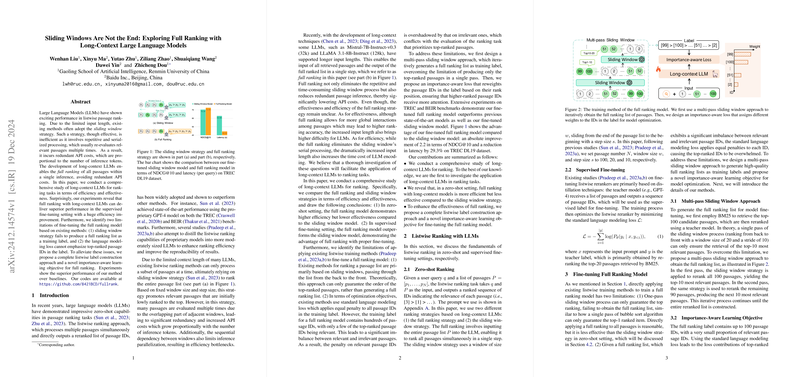

The customary use of sliding windows in passage ranking, although effective, leads to substantial inefficiency in terms of computational resources and redundant API costs. By re-evaluating the same passages multiple times, and due to its serialized nature, the sliding window strategy imposes sequential dependencies that hinder parallel processing capabilities. With the advent of models supporting considerably longer contexts, such as Mistral-7B-Instruct-v0.3 with a 32k token limit and LLaMA 3.1-8B-Instruct at 128k tokens, there is potential to input a complete set of passages for ranking in one go, termed "full ranking."

Methodology

The authors posit two major challenges with adapting full ranking approaches using existing methods for fine-tuning: first, the creation of a comprehensive and accurate list for training, as sliding windows naturally segment and order only top items; second, the imbalance attention given to top-ranked passages during training with existing LLM loss functions. To address these, a multi-pass sliding window technique is proposed, which generates an entire ranked list by iteratively re-ranking passages, and an importance-aware learning objective is crafted to prioritize and appropriately penalize based on a passage's rank, ensuring discernance towards top-ranked passages.

Experimental Analysis

Extensive experimentation shows that full ranking can attain superior effectiveness over sliding window approaches, particularly when the model undergoes task-specific supervised fine-tuning. While efficiency gains are immediate due to reduced computation from eliminating redundant passage evaluations, this efficiency is compounded in real-world scenarios where only a subset of results, typically the top ranks, are outputted. For example, modeling tests using Mistral-7B as a baseline indicate notable efficiency improvements, demonstrating that the full model surpasses traditional methods in both speed and reduced overhead costs.

Results and Implications

Results indicate a significant performance improvement of the fine-tuned full ranking model over both proprietary and open-source benchmarks, with the full ranking approach showing a 2.2 point increase in NDCG@10 on TREC DL19 and a 29.3% reduction in latency per query under practical conditions. The insights gained suggest that full ranking with proper training can overcome initial accuracy shortcomings observed in zero-shot settings, providing a roadmap for improving passage ranking tasks in large-scale applications, particularly where efficiency aligns closely to computational cost and throughput concerns.

Speculative Outlook

The research opens further avenues for investigation into the scalability of long-context LLMs, potentially exceeding their currently prescribed lengths, thus incorporating even larger data contexts without compromising performance. Re-alignment of model architecture and the development of specialized LLM frameworks designed to handle ranking-specific tasks more efficiently could mark the next frontier in the field. There may also be merit in exploring how these models can be integrated or fine-tuned in hybrid systems that collectively utilize retrieval tasks and the broader scope of LLM applications beyond traditional ranking mechanisms.

In conclusion, the exploration into the use of long-context LLMs for passage ranking not only addresses inherent inefficiencies of previous models but also signals a fundamental shift towards more holistic and contextually aware AI systems capable of deriving nuanced predictions and outcomes without the prohibitive costs traditionally associated with large-scale LLM computing.