Investigating LLMs as Passage Re-Ranking Agents

The paper "Is ChatGPT Good at Search? Investigating LLMs as Re-Ranking Agents" explores the utility of LLMs, specifically ChatGPT and GPT-4, for information retrieval tasks, focusing on passage re-ranking. The authors aim to explore how LLMs, originally designed for generative tasks, can be effectively employed to rank passages based on relevance, an area where traditional methods require substantial human annotations and demonstrate limited generalizability.

Key Contributions

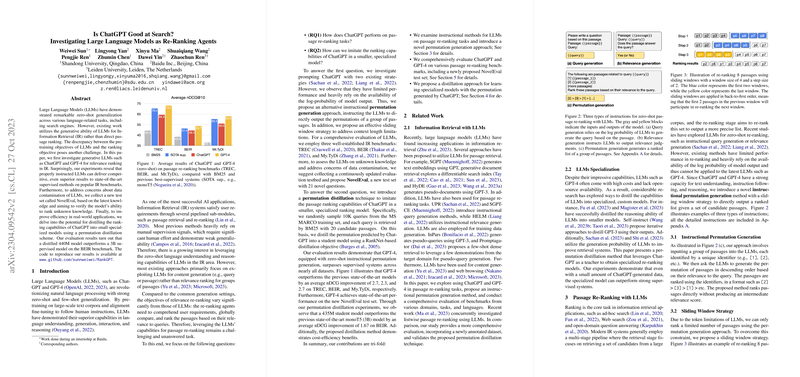

- Instructional Permutation Generation: The paper introduces a novel instructional approach for LLMs, termed permutation generation, which ranks a set of passages by instructing the model to directly generate their permutation based on relevance. This approach addresses the limitations of prior methods that rely heavily on the model's log-probabilities and provides a robust alternative that aligns more closely with the LLM's operational characteristics.

- Comprehensive Evaluation: The authors evaluate ChatGPT and GPT-4 on established IR benchmarks, including TREC, BEIR, and Mr.TyDi datasets. In their experiments, GPT-4 demonstrates significant improvements in nDCG metrics over state-of-the-art supervised systems, notably outperforming them by an average nDCG improvement of 2.7 on TREC.

- NovelEval Test Set: To address concerns regarding data contamination, the authors construct a new test set, NovelEval. This set focuses on verifying the LLMs’ ability to rank passages about recent and unknown knowledge—ensuring that LLMs have not been pre-exposed to the test content.

- Permutation Distillation Technique: The paper explores distilling the decision-making capability of ChatGPT into smaller models, achieving efficiency and improving cost-effectiveness for deployment in real-world applications. Specifically, a distilled 440M model outperformed a 3B monoT5 model on the BEIR benchmark, demonstrating that specialized models can capture the nuanced decision-making of larger LLMs with far fewer resources.

Implications and Future Directions

The implications of these findings are substantial for both practical and theoretical spheres in AI and information retrieval:

- Efficiency: Deploying distilled models as opposed to large LLMs like GPT-4 in commercial settings can lead to enhanced efficiency, with reduced computational overhead and latency while maintaining competitive performance.

- Adaptability: The proposed permutation generation method shows promise for leveraging LLMs in tasks beyond their initial design scope, opening avenues for LLMs to tackle diverse ranking and decision-making tasks with simple instructional paradigms.

- Regular Test Update: The authors’ introduction of continuously updated test sets like NovelEval could set a precedent in maintaining the relevance and robustness of LLM evaluation methodologies.

In terms of future research, a further investigation into the stability and robustness of instructional methods across different languages and domains could be beneficial. Additionally, exploring the integration of these re-ranking paradigms with the initial retrieval stages could yield insights into constructing end-to-end retrieval systems that are both efficient and highly performant.

The paper offers a methodical exploration of adapting LLMs for search-related tasks, providing empirically validated strategies that significantly elevate performance while maintaining a focus on practical applicability for real-world systems.