An Evaluation Framework for Long-Context Understanding in LLMs: The LongIns Benchmark

The paper "LongIns: A Challenging Long-context Instruction-based Exam for LLMs" addresses a critical gap in the evaluation of LLMs, specifically their ability to handle long-context tasks. Existing benchmarks, while proficient in assessing retrieval capabilities, fall short in evaluating the comprehensive understanding of long sequences. This paper introduces LongIns, an innovative benchmark designed to realistically evaluate LLMs' capacity to process long contexts beyond simple retrieval tasks.

The Problem Statement

Most current benchmarks focus on retrieval tasks where models need to identify key information within long contexts. However, these benchmarks do not adequately test LLMs' reasoning capabilities when faced with extended texts. Additionally, although LLMs boast impressive context window sizes—ranging from 32k to 10M tokens—these benchmarks do not truly reflect the maximum comprehensible length that models can handle. The dynamic between claimed context window sizes and realistic processing abilities remains unexplored.

The LongIns Benchmark

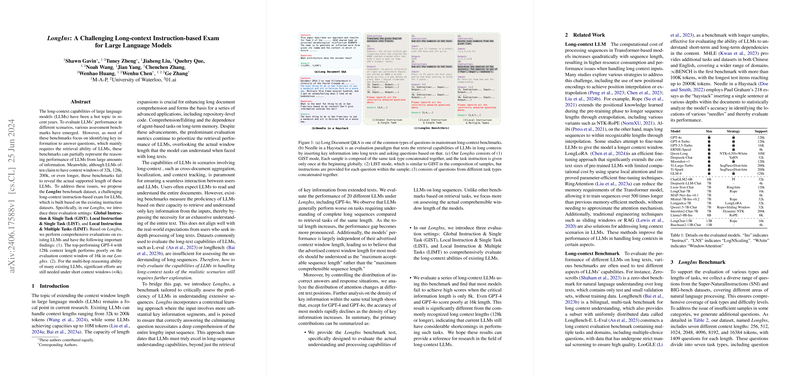

To address these issues, the authors present LongIns, a benchmark dataset synthesizing multiple tasks derived from existing instruction datasets. LongIns evaluates the proficiency of LLMs through three distinct evaluation settings:

- Global Instruction content Single Task (GIST): Samples composed of a single task type with one overarching instruction.

- Local Instruction content Single Task (LIST): Similar to GIST but with instructions provided for each individual task within the sample.

- Local Instruction content Multiple Tasks (LIMT): Samples containing questions from different tasks, each with local instructions.

Main Findings

The authors tested LongIns on 20 existing LLMs, including state-of-the-art models such as GPT-4. The key findings were:

- Performance Degradation with Increased Context: GPT-4, despite supporting a 128k context length, performed poorly on tasks with a context window of only 16k, highlighting a significant performance gap.

- Multi-hop Reasoning Challenges: Models, in general, struggled with multi-hop reasoning within short context windows (<4k), indicating that significant improvements are still needed for better understanding and reasoning over long texts.

Detailed Analysis

- Instruction Dependence: The LIST setting generally led to higher scores compared to GIST, suggesting that proximity to instructions significantly affects model performance. This has practical implications for designing prompts in multi-turn conversations.

- Task Type Variance: Models performed better on tasks with consistent question formats (e.g., Named Entity Recognition) due to enhanced in-context learning ability, but struggled with tasks requiring varied common sense reasoning.

Positional and Density Impact

The paper also examined performance variation across different text positions and key information densities:

- Positional Effects: Models showed higher accuracy for questions placed at the beginning of the context, with performance declining as the text length increased. This aligns with findings from "Needle in a Haystack" and "Lost In the Middle".

- Density of Key Information: Increased question density within texts negatively impacted model performance. Yet, GPT-4 demonstrated robustness, maintaining high accuracy even in high-density scenarios.

Practical and Theoretical Implications

The results reflect significant implications:

- Practical: For applications requiring long-context understanding, current LLMs may under-deliver despite their advertised capabilities. This suggests a need for more robust models capable of maintaining comprehensive understanding over longer texts.

- Theoretical: The research provides insights into the underlying complexities and challenges of long-context comprehension in LLMs, guiding future improvements in model architectures and training methodologies.

Future Directions

The paper opens avenues for future research and development:

- Improvement in Long-Term Dependency Handling: Techniques to better manage long-term dependencies and instruction follow-through in LLMs could be developed.

- Enhanced Benchmarks: Creating more diverse and realistic task benchmarks to continuously challenge and improve LLMs’ comprehensive understanding capabilities.

- Efficient Training Methods: Exploring efficient training methods like LongLoRA and RingAttention to extend effective context window sizes without significantly increasing computational demands.

Conclusion

The LongIns benchmark provides a robust framework to critically evaluate the long-context handling capabilities of LLMs. By focusing on comprehensive understanding rather than simple retrieval, it exposes the deficiencies in current generation models and sets a pathway for developing more proficient LLMs. This benchmark and its findings are pivotal for both academic research and practical applications, offering a clear direction for the enhancement of long-context understanding in AI.