Overview of "LLMs are Biased Evaluators but Not Biased for Retrieval Augmented Generation"

The paper "LLMs are Biased Evaluators but Not Biased for Retrieval Augmented Generation" presents an investigation into the biases of LLMs within the context of Retrieval-Augmented Generation (RAG) frameworks. The authors, Chen et al., aim to discern whether LLMs exhibit a preference for self-generated content over human-authored content and how this preference impacts the factuality of outputs in RAG scenarios. The paper is driven by two pivotal research questions: the presence of self-bias in LLMs and the capacity of these models to consistently reference correct, particularly human-authored, answers.

Methodology and Experiments

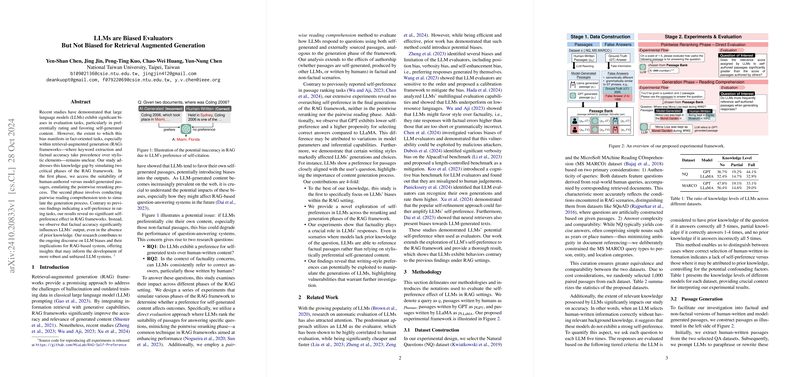

The methodology involves simulating two phases critical to RAG frameworks: the pointwise reranking phase and the generation phase. The pointwise reranking phase evaluates how LLMs rate the relevance of passages for answering questions, while the generation phase assesses self-preference through a pairwise reading comprehension task.

- Pointwise Reranking Phase: This phase identifies potential self-preference biases by directly prompting LLMs to score passages. The results indicate significant self-preference in a normal setting; however, this bias is mitigated within the RAG framework as LLMs showed less preference for model-generated passages compared to human-written ones. This observation holds primarily for GPT models, while LLaMA exhibits dataset-dependent variations.

- Generation Phase: This phase examines the reference selection tendency of LLMs by generating responses based on mixed-source passages. The findings reveal negligible self-preference for both GPT and LLaMA models. Both models displayed a marked ability to discern factual accuracy over self-generated content, illustrating a preference for factually correct passages.

Key Findings and Implications

The paper's findings contribute to the understanding of LLM biases and their implications for RAG systems:

- Factual Accuracy over Self-Preference: A central outcome is the predominant inclination of LLMs to prioritize factual accuracy over self-generated content, even when prior knowledge is absent. This finding suggests that RAG frameworks can mitigate the risk of self-preference biases, particularly those that might affect factual content selection.

- Minimal Bias in RAG Contexts: Contrary to the bias toward self-generated responses in standalone tasks, LLMs exhibit minimal self-preference during the passage selection phases of RAG scenarios. This highlights the robustness of RAG frameworks in utilizing LLM strengths while dampening bias effects.

- Dataset and Model Variability: The observed biases in LLaMA indicate potential model-dependent and dataset-dependent characteristics that merit further exploration. The differing performance between GPT and LLaMA stresses the importance of model-specific evaluations.

Future Directions

The implications of this work point towards substantial avenues for further research. Future studies could expand the dataset diversity and include a broader range of LLM architectures to validate the generality of these findings across different contexts. Additionally, exploring the integration of adaptive prompts to dynamically adjust bias in generation could enhance the robustness of RAG systems.

In sum, the paper provides valuable insights into the balancing of LLM-induced biases in RAG frameworks, underscoring the framework's potential to enhance the factual accuracy and fairness of AI-generated content. The findings encourage the continued development of strategies to optimize and harness LLM capabilities within information retrieval and generation systems.