Enhancing Factual Accuracy in LLMs for Domain-Specific Queries via the Retrieval Augmented Generation Method

Introduction

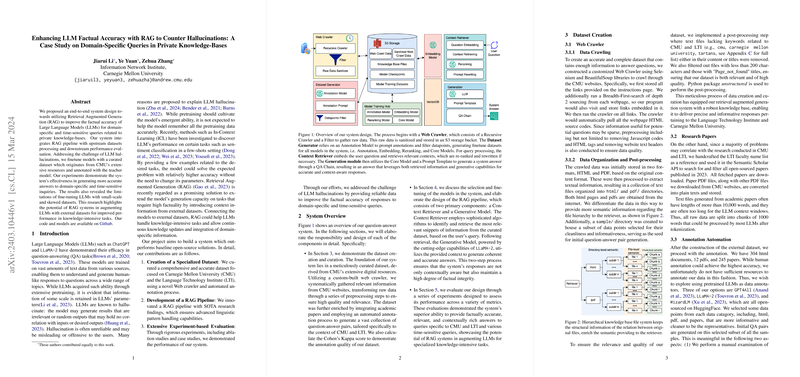

The complexity of deploying LLMs for accurate and reliable question-answering in domain-specific contexts is a significant challenge within the AI research community. Current models, despite their linguistic prowess, are prone to generating responses that either misinterpret the question or provide factually incorrect information—a phenomenon known as "hallucination." Recent efforts to ameliorate this issue have led to the exploration of Retrieval Augmented Generation (RAG) methods. The research presented focuses on developing an end-to-end system that leverages RAG to enhance the factual accuracy of LLM outputs, specifically targeting queries related to Carnegie Mellon University's (CMU) private knowledge bases. Key contributions include the creation of a specialized dataset capturing CMU's expansive digital landscape, the development of a robust RAG pipeline integrating state-of-the-art models, and extensive evaluation through rigorous experiments.

Dataset Creation

Comprehensive Data Curation

The foundation of the research lies in the creation of a dataset meticulously compiled from CMU’s digital repositories. The strategy involved deploying a custom web crawler designed to systematically extract information from pertinent web pages and academic resources. Special attention was given to refining this raw data through preprocessing steps that ensured the final dataset was both relevant and of high quality. Differentiating between HTML and PDF formats allowed for a finer understanding of CMU’s digital footprint.

Furthermore, academic papers associated with CMU faculty were included, providing a rich source of domain-specific knowledge. These documents underwent a process to make them compatible with LLMs by segmenting them into manageable chunks. This dataset not only fuels the RAG system but also serves as a cornerstone for model fine-tuning and validation.

Dataset Annotation and Evaluation

A novel approach was employed to annotate this extensive dataset, utilizing pre-existing LLMs as semi-automated annotators, thus overcoming resource constraints typically associated with manual annotation. An innovative annotation process was formalized, resulting in over 34,000 question-answer pairs. The reliability and consistency of these annotations were verified through calculating the Cohen's Kappa score, ensuring the dataset's utility for training and evaluation purposes.

Question-Answering Pipeline

Advanced System Design

At the core of the proposed system is a RAG pipeline designed for optimal retrieval and generation performance. The pipeline integrates a sophisticated Context Retriever alongside a Generative Model, leveraging the state-of-the-art capabilities of LLaMA-2. This approach enables the system to accurately understand user queries, retrieve relevant information from the curated dataset, and generate answers that are both contextually aware and factually accurate. The intricate design of the retrieval mechanism, coupled with the generative prowess of LLaMA-2, marks a significant step forward in addressing the challenge of LLM hallucinations.

Experimental Evaluation

Rigorous Performance Testing

The system undergoes thorough testing across multiple metrics to assess its effectiveness in enhancing LLM factual accuracy. Fine-tuning strategies for both the embedding model and LLaMA-2 are evaluated, showcasing the benefits and limitations inherent to each approach. The experimental phase includes ablation studies that dissect the contributions of individual components within the RAG pipeline. The findings reveal nuanced insights into the balance between retrieval accuracy and generative fluency, highlighting areas for future improvement.

Impactful Results

The evaluation demonstrates a notable improvement in the system's ability to provide factually correct answers to domain-specific and time-sensitive queries. By addressing the limitations of LLM hallucinations through a RAG approach, the research signifies a pivotal step towards realizing more reliable and accurate AI-driven question-answering systems.

Conclusion

The enhancement of LLM factual accuracy for domain-specific queries through a RAG system represents a meaningful advancement in the field of AI. The comprehensive dataset creation, innovative system design, and rigorous experimental validation collectively underscore the potential of RAG methodologies in overcoming the challenges posed by LLM hallucinations. This research not only contributes to the theoretical understanding of retrieval-augmented models but also paves the way for practical implementations of AI systems capable of delivering precise and reliable responses in knowledge-intensive domains.