Investigating the "Spiral of Silence" in Retrieval-Augmented Generation Systems with LLM-Generated Text

Introduction to the Study

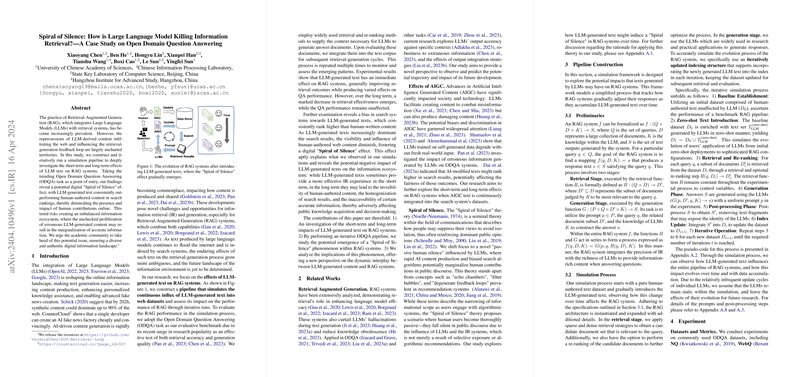

In the field of information retrieval and LLMs, the integration of LLMs into Retrieval-Augmented Generation (RAG) systems has significantly contributed to the advancement of online information processing, notably in Open Domain Question Answering (ODQA) tasks. The team's research explores the dynamics introduced by the inclusion of LLM-generated text into the digital ecosystem and its influence on RAG systems' performance over time. Through a series of simulations, the paper outlines the emergence of a digital "Spiral of Silence," where human-authored content progressively loses its visibility in search rankings to LLM-generated text.

Research Methodology

The paper employs a systematic simulation framework that iterates the process of retrieval and generation, simulating the gradual integration of LLM-generated text into an initially pure human-written text corpus. By evaluating ODQA performance across these iterations, the authors could observe the immediate and long-term impacts of LLM-generated content on both the efficiency of information retrieval and the accuracy of generated answers. The team utilized a broad spectrum of retrieval and generation methodologies, alongside diverse LLMs, offering extensive insights into the effect of synthetic content insertion into a RAG system.

Findings and Implications

Short-Term Observations

The introduction of LLM-generated texts into the corpus initially results in a noticeable enhancement in retrieval accuracy—a finding that iteratively propagates across different retrieval methods. However, this effect does not uniformly translate into improved QA performance, indicating a complex interaction between retrieval efficacy and answer accuracy in the short term.

Long-Term Analysis

Over successive iterations, a decline in retrieval performance becomes apparent, revealing the system's increased preference for LLM-generated text over human-authored content. This shift does not detrimentally impact QA performance, suggesting a degree of robustness in the system's ability to generate accurate responses, despite the degraded retrieval efficacy.

Manifestation of the "Spiral of Silence"

The research highlights the digital "Spiral of Silence" effect—the tendency for human-authored content to be overshadowed in search rankings by LLM-generated text, leading to a homogenization of information and a potential marginalization of diverse human perspectives. This effect, empirically supported by the simulation results, posits a future where the unchecked growth of LLM-generated content could dilute the richness and accuracy of information available online.

Future Directions

Given the theoretical implications of the "Spiral of Silence" within RAG systems, subsequent research should explore mechanisms to counterbalance this effect. This includes the development of retrieval methodologies sensitive to the diversification of content sources and the validation of information accuracy, ensuring a balanced representation of LLM-generated and human-authored content. Furthermore, addressing the limitations highlighted by this paper, future studies could look into the dynamic alterations in LLMs and their cumulative impact on the digital information ecosystem.

Conclusion

The paper provides a foundational understanding of the short-term benefits and potential long-term drawbacks of integrating LLM-generated text into RAG systems. By articulating the emergence of a "Spiral of Silence" effect, it calls for a nuanced approach to designing and managing RAG systems, emphasizing the need for strategies that preserve the diversity and authenticity of the digital information landscape. As we advance, the trajectory of AI-driven content generation and its influence on public discourse, knowledge acquisition, and decision-making remains a critical area for ongoing investigation and ethical consideration.