- The paper introduces a dual approach combining neural program synthesis and task generation to boost abstract reasoning performance on the ARC benchmark.

- It employs a typed DSL and a doubly-recurrent network to generate syntactically valid abstract syntax trees (ASTs) from latent representations.

- By synthesizing 183,282 tasks and leveraging learning-from-mistakes, the framework demonstrates significant improvements in solving complex reasoning tasks.

Learning to Solve Abstract Reasoning Problems with Neurosymbolic Program Synthesis and Task Generation

Introduction

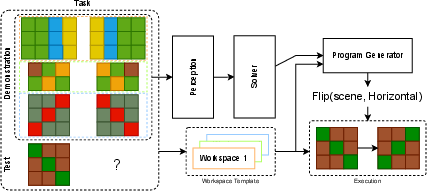

The paper "Learning to Solve Abstract Reasoning Problems with Neurosymbolic Program Synthesis and Task Generation" (2410.04480) presents an innovative framework aimed at enhancing the ability of machine learning models to handle abstract reasoning tasks. The proposed method integrates neural program synthesis with a generation of new tasks to address challenges posed by the Abstract Reasoning Corpus (ARC). By leveraging a typed domain-specific language (DSL), the framework facilitates efficient feature engineering and abstraction, contributing to progress in solving complex reasoning tasks.

Abstract Reasoning Corpus

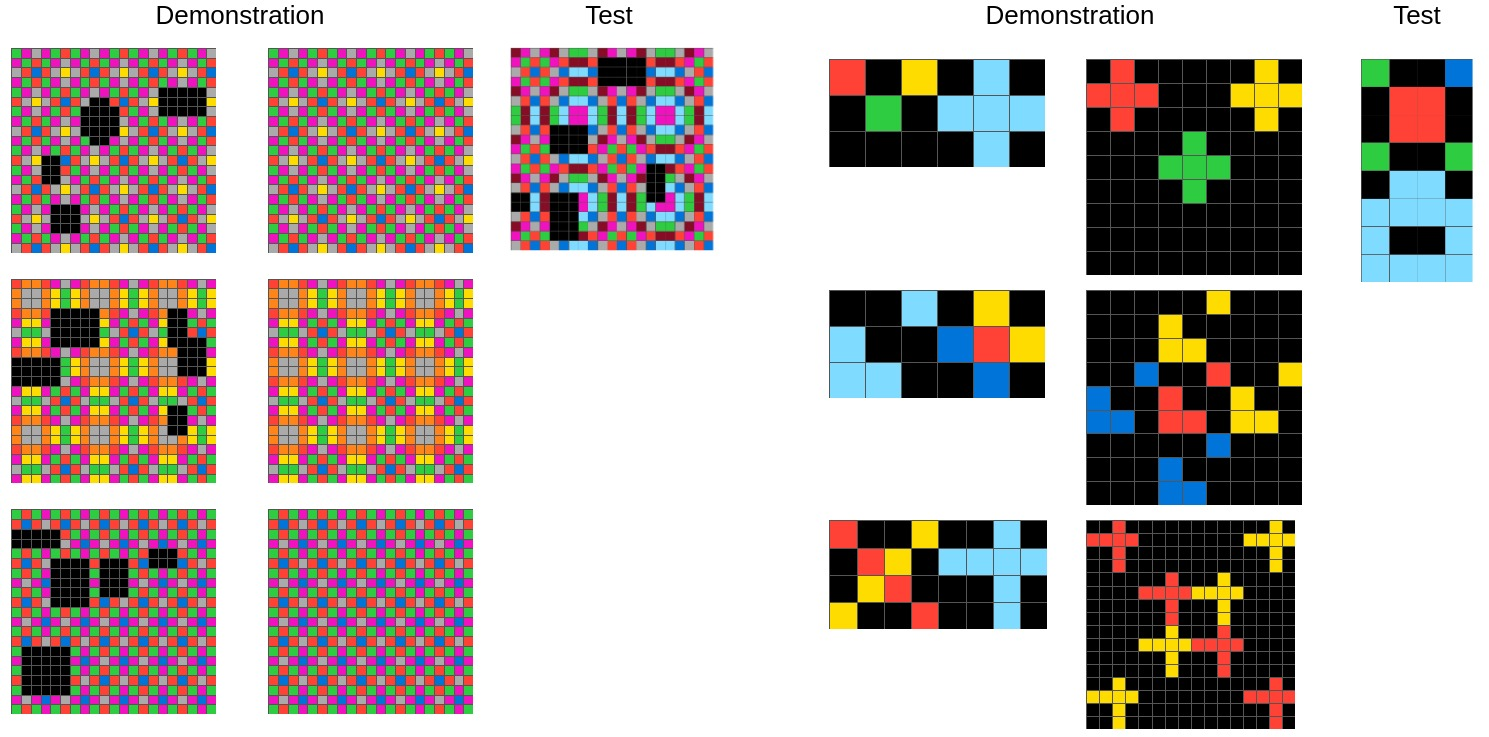

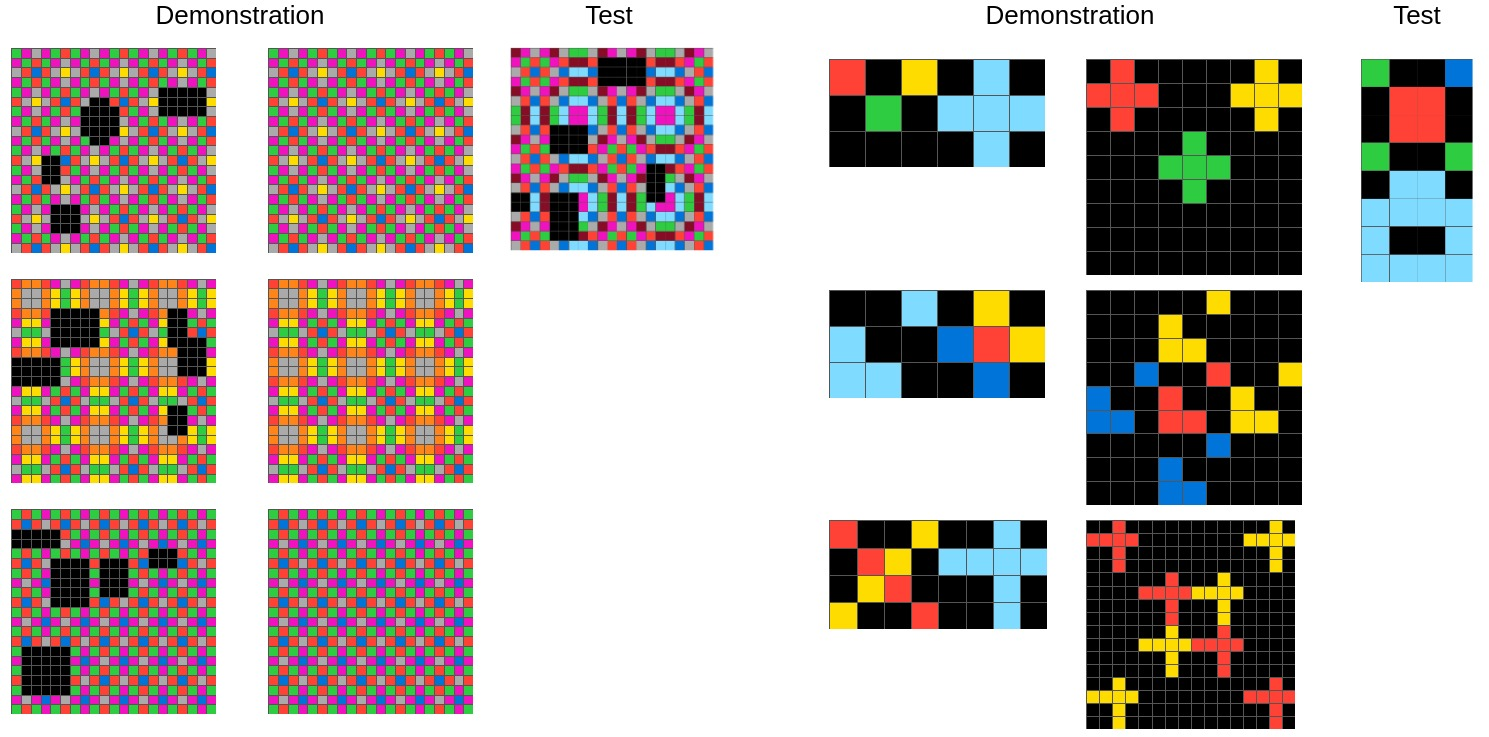

The ARC is a benchmark designed to test a model's capacity for abstract reasoning, comprising tasks that require both symbolic processing and compositional reasoning. It includes varied visual tasks where solutions are not straightforward, necessitating the inference of underlying rules from limited demonstrations. ARC's complexity stems from the diverse nature of tasks, ranging from pixel-wise transformations to reasoning based on intuitive physics.

Figure 1: Examples from the Abstract Reasoning Corpus Dataset.

Proposed Approach

The framework introduced in the paper consists of several key components designed to collaboratively enhance reasoning capabilities:

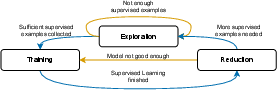

Learning from Mistakes

The framework incorporates an innovative "learning from mistakes" strategy. When a program fails to solve a presented task, it generates a new task based on the incorrect output, turning failures into additional training data. This process creates a synthetic dataset that enhances supervised learning by providing a progression of task difficulties, thereby establishing an effective learning gradient.

Figure 3: The state diagram of 's training, including learning from mistakes.

Experimental Results

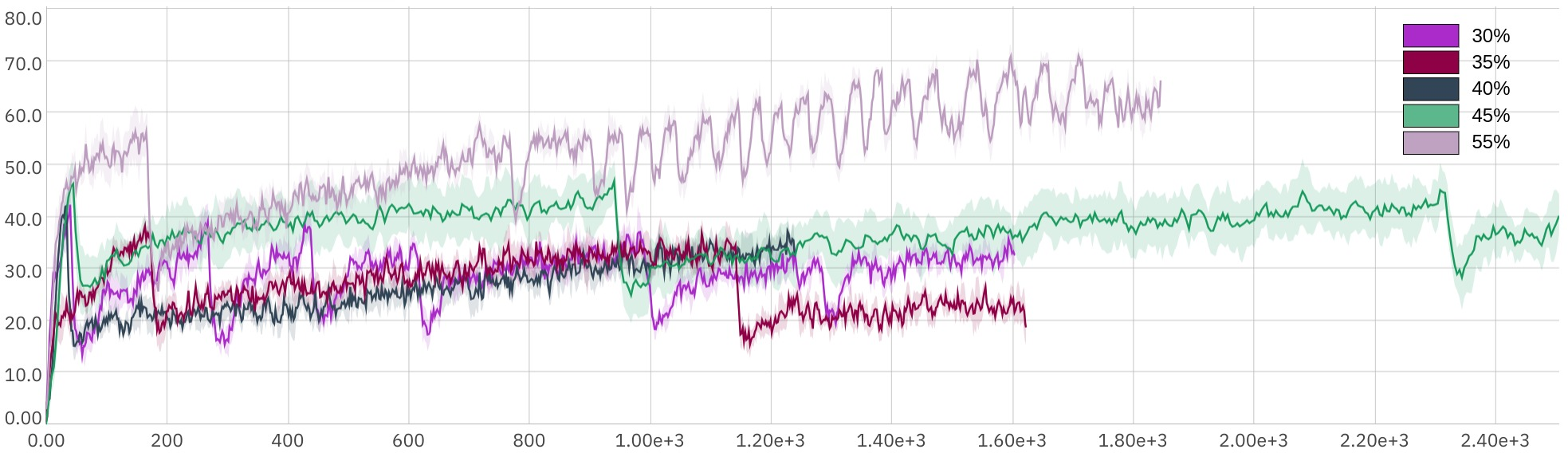

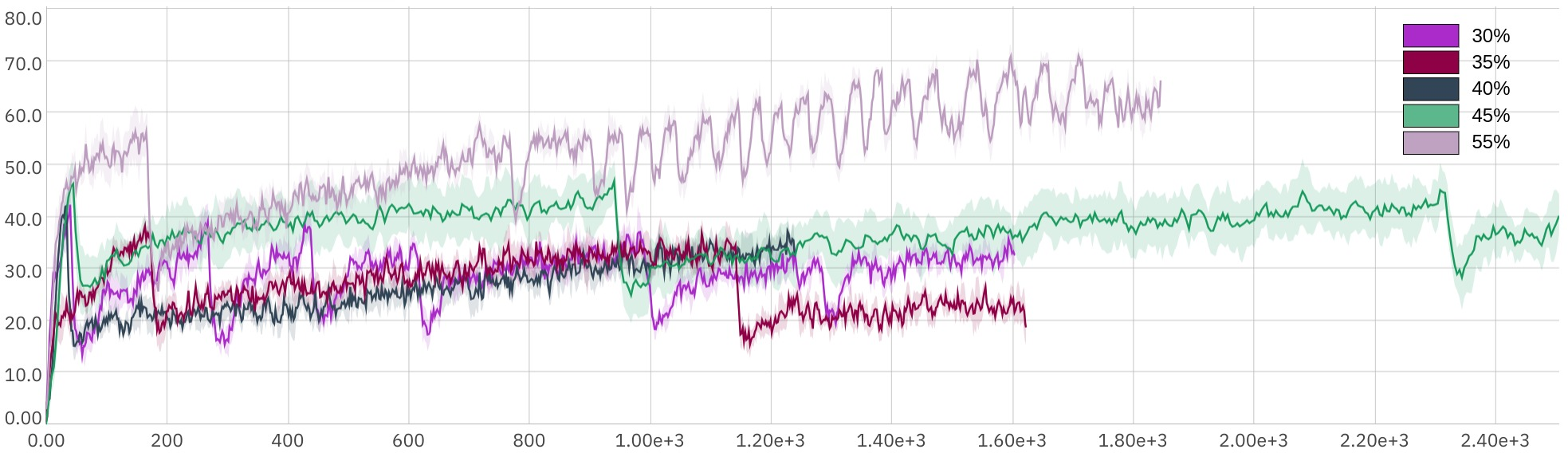

The method was rigorously evaluated on the ARC dataset, demonstrating its ability to produce meaningful improvements in task-solving rates. By creating a synthetic collection of 183,282 tasks, the approach achieved progressive increases in problem-solving capabilities, as evidenced by consistently improved metrics over successive training cycles.

Figure 4: Solving rate after each Reduction phase for runs with different exploration and reduction parameters.

Conclusion

The research pushes the boundaries of neurosymbolic systems by merging program generation with task synthesis. This dual approach not only enhances the model's problem-solving capacity through supervised learning of synthetic tasks but also provides an adaptable framework that can potentially extend to other reasoning benchmarks. Future work might explore different domains or DSL variations to further capitalize on the architecture's modular nature.

In conclusion, the paper presents a structured and effective method for solving abstract reasoning tasks, highlighting its potential to evolve into a more comprehensive solution for complex AI challenges.