- The paper presents a novel analytical framework capturing user verbal and nonverbal behaviors in LLM-based voice assistant interactions.

- It categorizes the interaction process into exploration, conflict, and integration stages, detailing strategies to overcome communication challenges.

- The study suggests that integrating adaptive multimodal cues can significantly enhance voice assistant design for natural, effective human-machine communication.

Human and LLM-Based Voice Assistant Interaction

Introduction

The paper "Human and LLM-Based Voice Assistant Interaction: An Analytical Framework for User Verbal and Nonverbal Behaviors" presents a novel framework that dissects the nature of human interaction with voice assistants powered by LLMs. This investigation uses a task-oriented setting, specifically a cooking scenario, to decipher user behavior patterns while engaging with an LLM-based voice assistant.

Analytical Framework

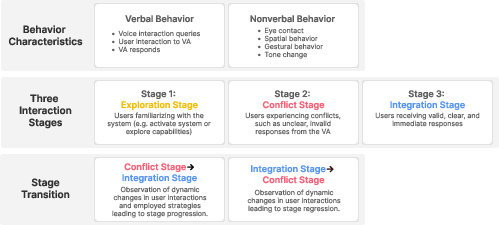

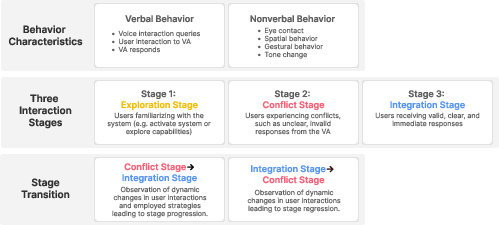

The study introduces a comprehensive framework capturing three pivotal dimensions: behavior characteristics, interaction stages, and stage transition.

Figure 1: The proposed analytical framework consists of three dimensions: 1) behavior characteristics, 2) the three interaction stages, and 3) stage transition.

Behavior Characteristics

The framework initially maps users' verbal and nonverbal behaviors. Verbal behaviors are categorized into user queries, interactions with VAs, and VA responses. Nonverbal behaviors, encompassing eye contact, gestural cues, tone variations, and spatial adjustments, reveal deeper insights into user engagement levels and interaction fluency.

Interaction Stages

Three distinct interaction stages emerge from the analysis: exploration, conflict, and integration.

Exploration Stage

In this stage, users familiarize themselves with the system, often experimenting with its capabilities beyond task-specific commands. Challenges such as remembering activation phrases (wake words) and exploring beyond set functionalities characterize this stage.

Conflict Stage

Users encounter misunderstandings and incorrect responses here. Miscommunications often occur due to speech-to-text errors or unanticipated LLM behavior owing to its extensive, sometimes ambiguous, knowledge base. Users employ a mix of verbal corrections and nonverbal cues such as raised tones or proximity adjustments to rectify issues.

Integration Stage

This stage signifies seamless user-VA interaction, characterized by correct and contextually relevant responses. Users leverage the VA effectively, evidencing a mature interaction pattern where both verbal inquiries and nonverbal affirmations support task accomplishment.

Stage Transition

Stage transitions, particularly from conflict to integration, underscore user strategies such as rephrasing queries, adjusting spatial positioning, and persisting in communication despite initial setbacks.

Figure 2: The scatter plot timelines show a visual overview of the stages experienced by each participant throughout the task, laid out in a timeline format.

Practical Implications

The study highlights the significance of enriching VA design to accommodate multimodal user inputs, fostering more intuitive, human-like interactions. It suggests that LLM-VAs should dynamically adapt to user behavior patterns, potentially predicting and reacting to user states in real-time to enhance interaction fluidity.

Conclusion

This framework unveils critical insights into optimizing LLM-VA design for enhanced human interaction. By integrating both verbal and nonverbal signals into VA responses, the research paves the way for developing more adaptive, context-aware systems capable of anticipating and effectively managing user needs. As LLM-VA uses expand, these insights will play an essential role in guiding future designs toward more natural and efficient human-machine communication.