SE3Set: Harnessing equivariant hypergraph neural networks for molecular representation learning (2405.16511v1)

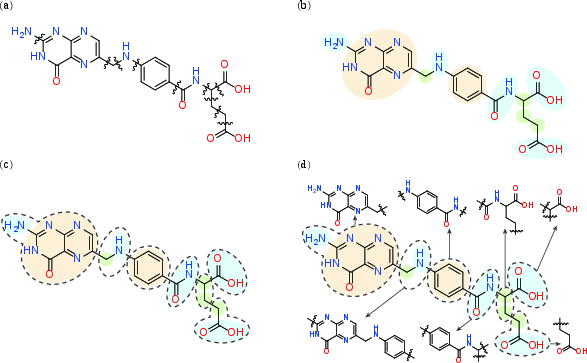

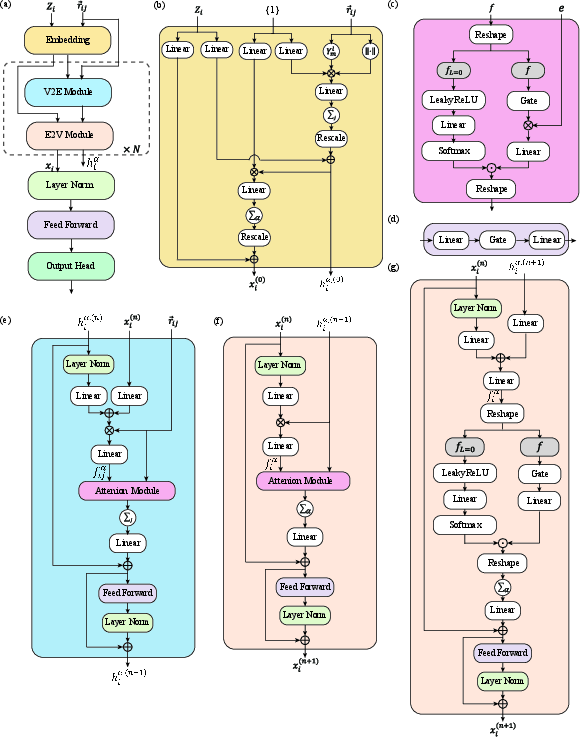

Abstract: In this paper, we develop SE3Set, an SE(3) equivariant hypergraph neural network architecture tailored for advanced molecular representation learning. Hypergraphs are not merely an extension of traditional graphs; they are pivotal for modeling high-order relationships, a capability that conventional equivariant graph-based methods lack due to their inherent limitations in representing intricate many-body interactions. To achieve this, we first construct hypergraphs via proposing a new fragmentation method that considers both chemical and three-dimensional spatial information of molecular system. We then design SE3Set, which incorporates equivariance into the hypergragh neural network. This ensures that the learned molecular representations are invariant to spatial transformations, thereby providing robustness essential for accurate prediction of molecular properties. SE3Set has shown performance on par with state-of-the-art (SOTA) models for small molecule datasets like QM9 and MD17. It excels on the MD22 dataset, achieving a notable improvement of approximately 20% in accuracy across all molecules, which highlights the prevalence of complex many-body interactions in larger molecules. This exceptional performance of SE3Set across diverse molecular structures underscores its transformative potential in computational chemistry, offering a route to more accurate and physically nuanced modeling.

- The molecular representations of coal–a review. Fuel, 96:1–14, 2012.

- Molecular representations in AI-driven drug discovery: a review and practical guide. J. Cheminform., 12(1):1–22, 2020.

- A review of molecular representation in the age of machine learning. Wiley Interdiscip. Rev. Comput. Mol. Sci., 12(5):e1603, 2022.

- Trust, but verify: on the importance of chemical structure curation in cheminformatics and qsar modeling research. J. Chem. Inf. Model., 50(7):1189, 2010.

- Graph neural networks: A review of methods and applications. AI open, 1:57–81, 2020.

- A comprehensive survey on graph neural networks. IEEE Trans. Neural. Netw. Learn. Syst., 32(1):4–24, 2020.

- Graph transformation policy network for chemical reaction prediction. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, pages 750–760, 2019.

- Graph neural networks for automated de novo drug design. Drug Discov. Today, 26(6):1382–1393, 2021.

- Graph neural networks for materials science and chemistry. Commun. Mater., 3(1):93, 2022.

- Neural message passing for quantum chemistry. In International conference on machine learning, pages 1263–1272. PMLR, 2017.

- Simple GNN regularisation for 3D molecular property prediction and beyond. In International Conference on Learning Representations, 2021.

- A survey on oversmoothing in graph neural networks. Preprint at http://arxiv.org/abs/2303.10993, 2023.

- Directional message passing for molecular graphs. In International Conference on Learning Representations, 2019.

- Equivariant message passing for the prediction of tensorial properties and molecular spectra. In International Conference on Machine Learning, pages 9377–9388. PMLR, 2021.

- E (3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nat. Commun., 13(1):2453, 2022.

- Fast and uncertainty-aware directional message passing for non-equilibrium molecules. Preprint at http://arxiv.org/abs/2011.14115, 2020.

- Gemnet: Universal directional graph neural networks for molecules. Advances in Neural Information Processing Systems, 34:6790–6802, 2021.

- Equivariant transformers for neural network based molecular potentials. In International Conference on Learning Representations, 2021.

- MACE: Higher order equivariant message passing neural networks for fast and accurate force fields. Advances in Neural Information Processing Systems, 35:11423–11436, 2022.

- Learning local equivariant representations for large-scale atomistic dynamics. Nat. Commun., 14(1):579, 2023.

- Enhancing geometric representations for molecules with equivariant vector-scalar interactive message passing. Nat. Commun., 15(1):313, 2024.

- Efficiently incorporating quintuple interactions into geometric deep learning force fields. In Thirty-seventh Conference on Neural Information Processing Systems, 2023.

- Tensor field networks: Rotation-and translation-equivariant neural networks for 3d point clouds. Preprint at http://arxiv.org/abs/1802.08219, 2018.

- Cormorant: Covariant molecular neural networks. Advances in neural information processing systems, 32, 2019.

- SE (3)-transformers: 3D Roto-translation equivariant attention networks. Advances in Neural Information Processing Systems, 33:1970–1981, 2020.

- Equiformer: Equivariant graph attention transformer for 3D atomistic graphs. In The Eleventh International Conference on Learning Representations, 2022.

- EquiformerV2: Improved equivariant transformer for scaling to higher-degree representations. Preprint at http://arxiv.org/abs/2306.12059, 2023.

- Schnet: A continuous-filter convolutional neural network for modeling quantum interactions. Advances in neural information processing systems, 30, 2017.

- Schnet–a deep learning architecture for molecules and materials. J. Chem. Phys., 148(24), 2018.

- SphereNet: Learning spherical representations for detection and classification in omnidirectional images. In Proceedings of the European conference on computer vision (ECCV), pages 518–533, 2018.

- ComENet: Towards complete and efficient message passing for 3D molecular graphs. In Alice H. Oh, Alekh Agarwal, Danielle Belgrave, and Kyunghyun Cho, editors, Advances in Neural Information Processing Systems, 2022.

- Beyond pairwise clustering. In 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), volume 2, pages 838–845. IEEE, 2005.

- Learning with hypergraphs: Clustering, classification, and embedding. Advances in neural information processing systems, 19, 2006.

- The Z-eigenvalues of a symmetric tensor and its application to spectral hypergraph theory. Numer. Linear Algebra Appl., 20(6):1001–1029, 2013.

- On spectral hypergraph theory of the adjacency tensor. Graphs Combin., 30:1233–1248, 2014.

- The spacey random walk: A stochastic process for higher-order data. SIAM Rev., 59(2):321–345, 2017.

- Landing probabilities of random walks for seed-set expansion in hypergraphs. In 2021 IEEE Information Theory Workshop (ITW), pages 1–6. IEEE, 2021.

- Nonlinear higher-order label spreading. In Proceedings of the Web Conference 2021, pages 2402–2413, 2021.

- Transformers generalize deepsets and can be extended to graphs & hypergraphs. Advances in Neural Information Processing Systems, 34:28016–28028, 2021.

- Equivariant hypergraph neural networks. In European Conference on Computer Vision, pages 86–103. Springer, 2022.

- Hyper-mol: Molecular representation learning via fingerprint-based hypergraph. Comput. Intell. Neurosci., 2023, 2023.

- Rxn hypergraph: a hypergraph attention model for chemical reaction representation. Preprint at http://arxiv.org/abs/2201.01196, 2022.

- Hiroshi Kajino. Molecular hypergraph grammar with its application to molecular optimization. In International Conference on Machine Learning, pages 3183–3191. PMLR, 2019.

- Molecule property prediction and classification with graph hypernetworks. Preprint at http://arxiv.org/abs/2002.00240, 2020.

- A hypergraph convolutional neural network for molecular properties prediction using functional group. Preprint at http://arxiv.org/abs/2106.01028, 2021.

- Molecular hypergraph neural networks. Preprint at http://arxiv.org/abs/2312.13136, 2023.

- Fragmentation methods: A route to accurate calculations on large systems. Chem. Rev., 112(1):632–672, 2012.

- Energy-based molecular fragmentation methods. Chem. Rev., 115(12):5607–5642, 2015.

- Hypergraph pre-training with graph neural networks. Preprint at http://arxiv.org/abs/2105.10862, 2021.

- Contrastive learning of molecular representation with fragmented views. 2022.

- Fragment-based pretraining and finetuning on molecular graphs. Preprint at http://arxiv.org/abs/2310.03274, 2023.

- You are AllSet: A multiset function framework for hypergraph neural networks. In International Conference on Learning Representations, 2021.

- Deep sets. Advances in neural information processing systems, 30, 2017.

- Set transformer: A framework for attention-based permutation-invariant neural networks. In International conference on machine learning, pages 3744–3753. PMLR, 2019.

- Enumeration of 166 billion organic small molecules in the chemical universe database GDB-17. J. Chem. Inf. Model., 52(11):2864–2875, 2012.

- Quantum chemistry structures and properties of 134 kilo molecules. Sci. Data, 1(1):1–7, 2014.

- Machine learning of accurate energy-conserving molecular force fields. Sci. Adv., 3(5):e1603015, 2017.

- Accurate global machine learning force fields for molecules with hundreds of atoms. Science Advances, 9(2):eadf0873, 2023.

- Long-short-range message-passing: A physics-informed framework to capture non-local interaction for scalable molecular dynamics simulation. In The Twelfth International Conference on Learning Representations, 2024.

- Generalized gradient approximation made simple. Phys. Rev. Lett., 77(18):3865, 1996.

- Accurate and efficient method for many-body van der Waals interactions. Phys. Rev. Lett., 108(23):236402, 2012.

- On the art of compiling and using’drug-like’chemical fragment spaces. ChemMedChem: Chemistry Enabling Drug Discovery, 3(10):1503–1507, 2008.

- rdkit/rdkit: 2020_03_1 (q1 2020) release, March 2020.

- György Lendvay. On the correlation of bond order and bond length. J. Mol. Struct., 501:389–393, 2000.

- Bond orders between molecular fragments. Chemistry, 12 8:2252–62, 2006.

- Escaping atom types in force fields using direct chemical perception. J. Chem. Theory Comput., 14(11):6076–6092, 2018.

- openforcefield/openff-toolkit: 0.15.1. Testing updates, January 2024.

- Fast graph representation learning with pytorch geometric. Preprint at http://arxiv.org/abs/1903.02428, 2019.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.