Exploring In-Context Learning Through the Lens of Information Retrieval

Introduction

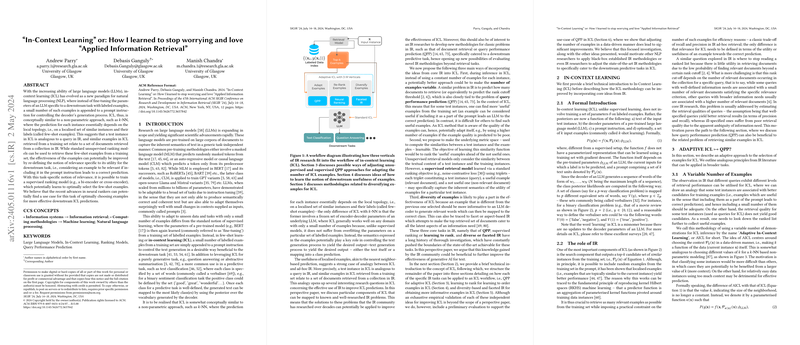

In-context learning (ICL) is evolving as a significant approach in NLP by utilizing LLMs like GPT-3. Unlike traditional machine learning methods that require extensive training on a large dataset, ICL leverages a small number of examples appended into a prompt to guide the LLM in generating useful responses for specific tasks. This methodology is fascinating because it mirrors non-parametric models (like k-NN) where predictions depend on a few, locally similar instances. This paper proposes viewing these few-shot examples in ICL from an information retrieval (IR) perspective, suggesting a potential crossover between IR techniques and ICL.

Understanding In-Context Learning (ICL)

ICL refrains from the traditional model retraining and instead, adjusts the outputs based on a handful of provided examples, making it robust against overfitting and adaptable across various domains. The process involves:

- Employing the fixed parameters of a pre-trained LLM.

- Utilizing the text of the input instance.

- Adding a few labeled examples as part of a prompt (termed few-shot learning).

This method is akin to querying in an IR system, where the few-shot examples are like documents retrieved to answer a query, and the input instance is similar to a search query itself.

Adapting IR Strategies to Enhance ICL

Several IR techniques could enhance the effectiveness of ICL by adapting methods usually applied to search engines:

- Query Performance Prediction (QPP) for Adaptive ICL:

- Employing QPP techniques from IR could predict the utility of few-shot examples more accurately. This would involve dynamically choosing the number of training examples based on predictive performance estimators.

- Learning to Rank for Better Example Selection:

- Just as search engines learn to rank websites, ICL can be optimized by learning to rank few-shot examples based on their relevance or utility, leading to more effective predictions.

- Diversity Techniques for More Informative Examples:

- Just as IR systems aim to provide a diverse set of search results, applying diversity-based retrieval could ensure that the few-shot examples used in ICL cover a broader range of responses, providing the LLM with a richer context for generation.

Implications and Future Directions

Practical Implications:

- Applying IR techniques to select and rank few-shot examples could make ICL much more efficient and effective, reducing computational costs by minimizing the number of necessary examples while maximizing performance.

Theoretical Implications:

- This approach challenges the traditional boundaries between IR and NLP, paving the path for cross-disciplinary methodologies that leverage strengths from both fields.

Speculation on Future Developments:

- As ICL and IR techniques increasingly intersect, we might see the emergence of new, hybrid models that are inherently more robust and versatile across different types of data and tasks.

Conclusion

The paper suggests a compelling new viewpoint on enhancing in-context learning by incorporating proven information retrieval techniques. This intersection not only promises improved performance but also a deeper understanding of how models can be made more adaptable and efficient. Integrating these fields could lead to significant advancements in how we approach machine learning and natural language processing tasks.