The Broader Spectrum of In-Context Learning: A Perspective

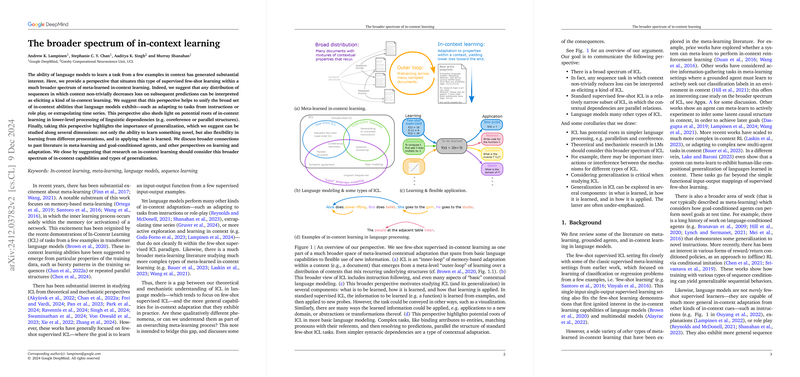

The paper, "The Broader Spectrum of In-Context Learning," presents an expanded view of in-context learning (ICL) within the domain of LLMs. It asserts that ICL is not limited to few-shot learning, as commonly studied, but is part of a more extensive spectrum of meta-learned contextual adaptation. The authors propose that any sequence learning task where context reduces prediction loss non-trivially can be considered a form of ICL. By adopting this overarching framework, the paper seeks to unify various abilities of LLMs and link ICL to fundamental linguistic processes, while also highlighting the importance of generalization across multiple dimensions.

In-Context Learning and Generalization

The paper details how ICL, notably displayed in LLMs trained using transformer architectures, is not confined to the traditional few-shot learning setup. Instead, ICL encompasses a broader range of abilities, including task adaptation via instructions, role-playing, or time-series extrapolation. By framing ICL within the broader context of meta-learned adaptation, this paper builds upon past investigations in meta-learning and goal-conditioned agents. LLMs demonstrate various ICL skills that surpass the scope of merely supervising few-shot capabilities.

From the perspective presented, the authors emphasize that successful ICL not only involves learning new information but also mastering how such information is acquired and subsequently applied. This multifaceted view of generalization in ICL suggests that it includes, but is not limited to, typical few-shot supervised tasks where LLMs learn input-output functions from limited examples.

Roots and Mechanisms of In-Context Learning

The authors venture into understanding the potential origins of ICL capabilities, rooting them in the basic processing of linguistic dependencies. ICL abilities are hypothesized to emerge from the properties of training data, such as bursty sequences and recurring parallel structures. These characteristics might induce adaptable behaviors from simple contextual adaptations, e.g., coreference resolution, to more complex tasks such as task adaptation from instructions.

By extending the understanding of ICL to encompass these fundamental language processing skills, the paper suggests an innate connection between higher-order LLMing tasks and simpler linguistic structures. This is particularly useful in surmising that actions like coreference resolution or recognizing syntactic patterns are more primitive instances of ICL, laying the groundwork for more complex task adaptations such as analogy or meta-learning.

Implications and Future Directions

This perspective on ICL poses important implications for future research in artificial intelligence. By broadening the constraints of ICL, the paper encourages exploration beyond standard few-shot paradigms, thereby fostering investigation into the interactions between various learning styles and contextual adaptations. The potential interference among different forms of ICL and the importance of generalization are salient points for further theoretical and mechanistic exploration. These investigations could reveal critical insights into how model architectures, training processes, or data structures influence the capabilities of in-context learning.

Moreover, the paper underscores that understanding the nuances of ICL—how models learn, generalize, and apply knowledge in flexible ways—could lead to enhanced design of adaptive systems and better alignment of model behaviors with broader learning objectives.

Conclusion

The paper "The Broader Spectrum of In-Context Learning" effectively situates standard few-shot learning within a wider array of in-context capabilities. By highlighting the complex interactions and potential for generalized application in ICL, the authors argue for an enriched research agenda that accommodates the vast and nuanced spectrum of in-context learning mechanisms exhibited by advanced LLMs. The adoption of this broader perspective could significantly impact both the theoretical understanding and practical implementation of artificial intelligence systems going forward.