In-Context Learning: A Comprehensive Survey

The paper "A Survey on In-context Learning" provides a detailed examination of in-context learning (ICL), a paradigm that utilizes LLMs to make predictions based on a few example-augmented contexts. The authors present an organized overview of the current progress and challenges associated with ICL, emphasizing its emerging role in NLP.

Overview and Definitions

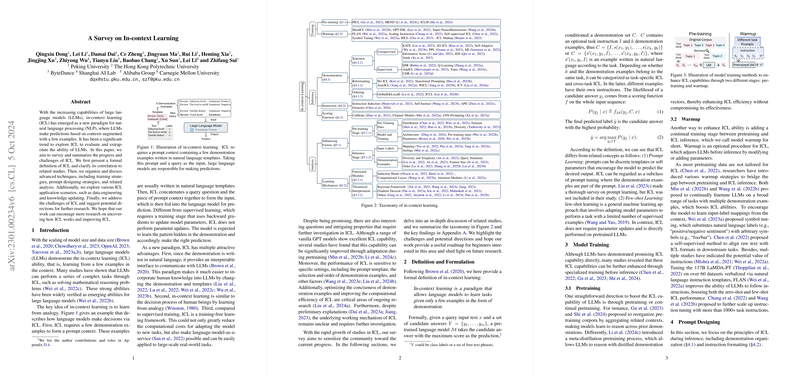

ICL allows LLMs to generalize from a few given examples without updating the model parameters and is contrasted with traditional machine learning that relies on extensive supervised training. This paper defines ICL in the context of its ability to leverage pretrained models to make informed predictions through analogy. The distinction between ICL and related paradigms like prompt learning and few-shot learning is also clarified.

Model Warmup Techniques

The paper explores various methods of enhancing LLMs' ICL capabilities through model warmup strategies, both supervised and self-supervised. Supervised in-context training, such as MetaICL and instruction tuning, improves LLMs' adaptability to new tasks by finetuning them on task-specific instructions. Alternatively, self-supervised methods leverage raw corpora to align training objectives with downstream ICL tasks, showing promise in refining LLMs' ICL capabilities without extensive labeled data.

Demonstration Designing Strategies

A critical component of ICL performance is the selection and formatting of demonstration examples. The paper discusses several strategies, including selecting semantically similar examples and optimizing the order of presentation. It also highlights the role of instructions and intermediate reasoning steps in improving LLMs' performance on complex reasoning tasks.

Scoring Functions

Different scoring functions are evaluated for their efficiency, task coverage, and stability. Methods like Direct and PPL scoring calculate likelihoods based on either conditional probabilities or sentence perplexity, while Channel models invert traditional probability computation to improve performance under certain scenarios. These approaches play a crucial role in converting LLM predictions into actionable insights.

Analytical Perspectives

The paper explores factors influencing ICL performance, divided between the pretraining and inference stages. Emergent findings reveal the significance of model size, pretraining data distribution, and demonstration characteristics. Additionally, several theoretical analyses, such as connections between ICL and gradient descent, provide insights into the underlying mechanisms of ICL.

Evaluation Frameworks and Applications

Challenging benchmarks and tasks are essential for evaluating ICL's capabilities. The paper discusses both traditional and new, specialized datasets that probe the nuanced abilities of ICL methods. Applications of ICL span various domains such as data engineering, retrieval augmentation, and knowledge updating, showcasing its versatility across traditional and novel tasks.

Challenges and Future Directions

While ICL presents numerous advantages, it also faces challenges in areas such as efficiency, scalability, and robustness. Future research directions include developing new pretraining strategies, distilling ICL abilities to smaller models, and addressing robustness issues. These explorations aim to enhance the practicality and effectiveness of ICL in real-world applications.

In summary, this comprehensive survey highlights ICL’s potential in leveraging LLMs without exhaustive training. Through a detailed examination of strategies, challenges, and applications, the paper serves as a valuable resource for researchers aiming to advance this promising paradigm in artificial intelligence.