LLMs are Parallel Multilingual Learners

Introduction

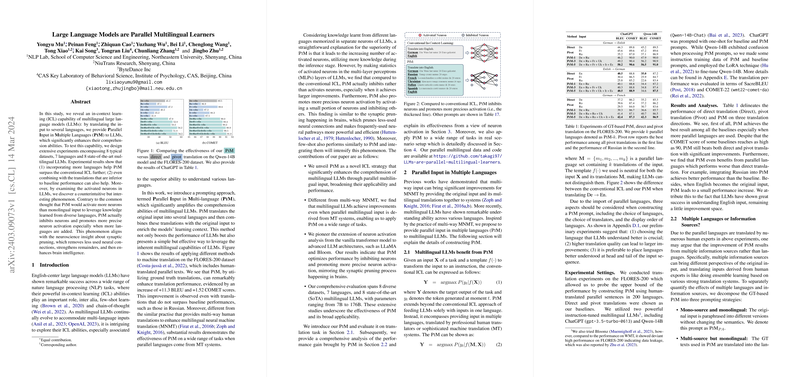

In this paper, we explore the in-context learning (ICL) capabilities of LLMs for processing and understanding information provided in multiple languages simultaneously. This work introduces a novel prompting approach, Parallel Input in Multiple Languages (PiM), which significantly enhances the comprehension abilities of multilingual LLMs by augmenting the standard input with translations of the task's prompt into several languages. Through extensive experiments across a diverse set of datasets, languages, and state-of-the-art multilingual LLMs, this paper demonstrates the efficacy of PiM in improving model performance across a variety of tasks, including machine translation, language inference, reading comprehension, text simplification, and abstractive summarization.

Parallel Input in Multiple Languages

The paper presents PiM as a method to leverage the inherent capability of multilingual LLMs to process inputs in multiple languages. PiM involves translating the original input into several languages and presenting these translations alongside the original input to the LLM. This approach is theorized to enrich the context available to the model, thereby improving its performance. The hypothesis is substantiated by significant improvements observed across eight datasets, seven languages, and eight leading multilingual LLMs. Notably, PiM demonstrates effectiveness even when translations do not outperform direct translations in baseline scenarios, suggesting a robust method to enhance multilingual model performance.

Insights and Theoretical Implications

A counterintuitive discovery made through neuron activation analysis in LLMs suggests that, contrary to expectations, PiM does not necessarily increase the number of activated neurons. Instead, it inhibits neurons while promoting more precise neuron activation, especially with the addition of more languages to the input. This observation indicates a potential optimization in how LLMs access and utilize multilingual knowledge, aligning with processes of synaptic pruning observed in neurological studies. These findings suggest that PiM's effectiveness may stem from inducing a more efficient use of the model's neural network, emphasizing quality over quantity in neuron activation.

Practical Applications and Future Directions

The paper evidences the broad applicability of PiM across various NLP tasks and its compatibility with multiple LLM architectures, from 7B to 176B parameters. The success of PiM in improving translation tasks, even with machine-translated inputs, opens new pathways for its use in enhancing LLM performance in real-world scenarios. Furthermore, the paper highlights an intriguing direction for future research on understanding neuron activation patterns in LLMs and their relation to learning processes in human brains. Given the effectiveness of PiM, further exploration into tailored prompting strategies for different types of tasks and languages could yield additional gains in model performance and efficiency.

Conclusions

This research contributes significantly to the field by demonstrating a simple yet effective strategy to improve the performance of multilingual LLMs across a range of tasks. By adopting PiM, the paper not only provides a practical method for leveraging the multilingual capabilities of LLMs but also offers new insights into the optimization of neural networks for multilingual understanding. The revelations regarding neuron activation patterns offer a fascinating glimpse into the potential analogs between artificial and biological learning processes, presenting an exciting avenue for interdisciplinary research bridging AI and neurosciences.