Towards a Multilingual LLM for the Medical Domain

Introduction

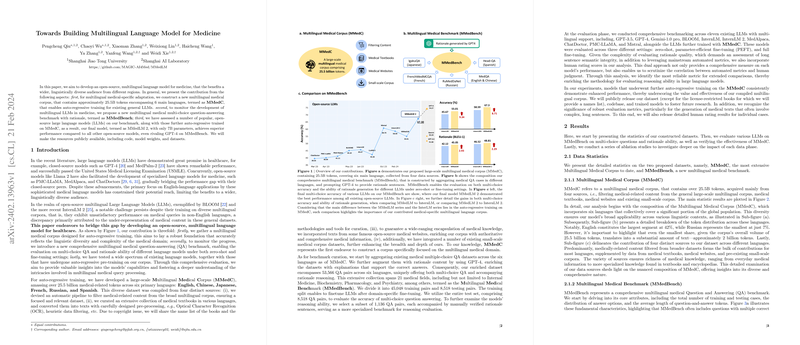

The development of LLMs has significantly propelled advancements in NLP applications within the medical domain. Despite notable successes, the preponderance of LLMs' focus on English has hindered their broader application across linguistically diverse regions. The paper discusses the inception of MMedC, a large-scale multilingual medical corpus, and MMedBench, a benchmark for evaluating LLMs' capabilities in medical question-answering across six primary languages. Through rigorous testing, the paper introduces MMedLM 2, a model that not only leverages MMedC for enhanced performance but also exhibits competencies rivalling those of GPT-4 in multilingual medical contexts.

Dataset Construction and Metrics

MMedC: A Multilingual Medical Corpus

MMedC stands distinct with its assembly of 25.5 billion tokens spanning six languages. It derives richness from a variety of sources:

- Filtering medical content from a large-scale multilingual corpus

- Including texts from medical textbooks and reputable medical websites

- Incorporating existing medical corpora

This compilation underscores a collective endeavor to furnish a model that transcends linguistic barriers within the medical domain.

MMedBench: Benchmarking Multilingual Medical Understanding

The advent of MMedBench fills the void for a comprehensive evaluation tool by aggregating medical question-answering datasets across languages and supplementing them with rationale reasoning, hence offering a novel lens through which to assess LLMs. This process involves the augmentation of standard QA pairs with detailed rationales using GPT-4, followed by meticulous human verification to ensure quality and correctness.

Model Evaluation and Insights

The evaluation of MMedC and MMedBench yielded intriguing findings. Consistent with expectations, models trained on MMedC outperformed their contemporaries across various metrics under zero-shot, parameter-efficient fine-tuning (PEFT), and full fine-tuning settings. Notably, MMedLM 2 emerged as a formidable contender, demonstrating remarkable proficiency in multilingual medical question-answering and rationale generation, closely mirroring the performance metrics of GPT-4.

Theoretical and Practical Implications

Enhancing Multilingual Medical AI Research

The paper's endeavor to create MMedC and MMedBench catalyzes the exploration of general medical artificial intelligence (GMAI) and retrieval-augmented generation, facilitating the development of LLMs robust across languages and capable of integrating comprehensive medical knowledge.

Broader Clinical and Educational Outreach

The practical implications are profound, promising to alleviate language barriers in healthcare, tailor models to recognize cultural nuances, and democratize access to medical education globally. This endeavor opens avenues for deploying LLMs in diverse medical settings, ensuring equitable access to quality healthcare information.

Future Directions and Challenges

Despite its achievements, the paper acknowledges limitations such as the corpus's linguistic breadth and the computational scope of the final model. Future work will aim at extending language coverage, scaling model architectures, and refining the model to mitigate hallucination issues. The continuous evolution of MMedC and MMedBench aspires to bolster the development of LLMs that are both linguistically inclusive and deeply entrenched in medical knowledge.

Data and Resources Availability

In a move towards transparency and fostering further research, the authors have made the datasets, codebase, and trained models publicly accessible. This initiative is aimed at encouraging collaborative advancements and facilitating access to resources critical for extending the boundaries of multilingual medical natural language processing.