Overview of "PMC-LLaMA: Towards Building Open-source LLMs for Medicine"

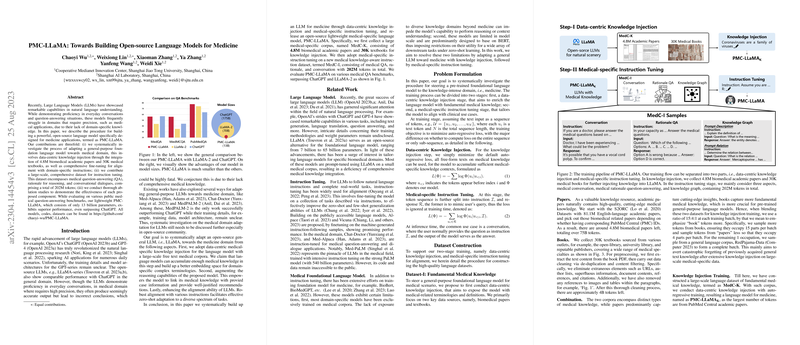

The paper "PMC-LLaMA: Towards Building Open-source LLMs for Medicine" by Chaoyi Wu et al. presents a systematic approach in adapting open-source LLMs for medical applications. The authors introduce PMC-LLaMA, a specialized LLM targeting the medical domain, characterized by its data-centric approach to knowledge injection and comprehensive instruction tuning.

Key Contributions

The research makes three significant contributions:

- Domain-specific Knowledge Injection:

- The authors adapted a general-purpose LLM by incorporating domain-specific knowledge through an extensive corpus of 4.8 million biomedical academic papers and 30,000 medical textbooks. This process enriches the model's understanding of medical terminologies and concepts, thereby improving its precision in medical contexts.

- Comprehensive Instruction Tuning Dataset:

- A large-scale dataset, MedC-I, was created to facilitate instruction tuning. Encompassing 202 million tokens, the dataset includes medical question-answering, rationale for reasoning, and conversational dialogues. This contributes to the model's ability to adapt across various medical tasks without task-specific training.

- Empirical Evaluation and Performance:

- Through rigorous ablation studies, the effectiveness of each proposed component was validated. Remarkably, PMC-LLaMA, with only 13 billion parameters, surpassed ChatGPT in performance across several public medical QA benchmarks.

Methodology

The methodology involves two main stages:

- Knowledge Injection:

The model was exposed to a corpus blending biomedical papers and textbooks, aiming to build a robust medical knowledge base. A batch ratio emphasizing more on book tokens is employed to establish comprehensive understanding.

- Instruction Tuning:

Leveraging medical consulting conversations, rationale QA, and knowledge graph prompting, this stage aligns the model with medical professionals' instructional expectations.

The model was trained using advanced techniques such as Fully Sharded Data Parallel and gradient checkpointing to leverage large data and computational efficiency.

Results and Implications

The model PMC-LLaMA was evaluated on standard medical QA benchmarks such as PubMedQA, MedMCQA, and USMLE. Maintaining an average accuracy of 64.43%, it outperformed existing models, including ChatGPT, in terms of both precision and reasoning in medical contexts.

The findings indicate that open-source models can be effectively tailored for specialized domains, potentially democratizing access to powerful AI tools in medicine. It also sets a foundation for future research in fine-tuning LLMs for various domain-specific applications, emphasizing the importance of domain knowledge integration.

Conclusion and Future Work

The paper successfully demonstrates the viability of building efficient, open-source LLMs for the medical domain, addressing the current limitations of general LLMs in precision-critical fields. As AI continues to evolve, such approaches can be extended to other specialized domains. Future work could explore further scaling of LLMs for more sophisticated medical applications, enhancing their applicability in real-world clinical settings.

The release of all models, codes, and datasets at the provided GitHub repository underscores a commitment to transparency and collaboration, encouraging further exploration and refinement in the open-source community.