Elevating Medical NLP with Multilingual LLM: Insights from Medical mT5 Development

Introduction

Recent advances in AI and NLP have significantly improved the capabilities of LLMs in various domains, including medicine. However, most of these developments have been confined to the English language, leaving a notable gap in resources and tools for non-English medical texts. Addressing this imbalance, the paper presents Medical mT5, a pioneering open-source text-to-text multilingual model fine-tuned on medical domain data across English, Spanish, French, and Italian. This model is an encoder-decoder framework based on the mT5 architecture, demonstrating state-of-the-art performance in multilingual sequence labeling for the medical domain.

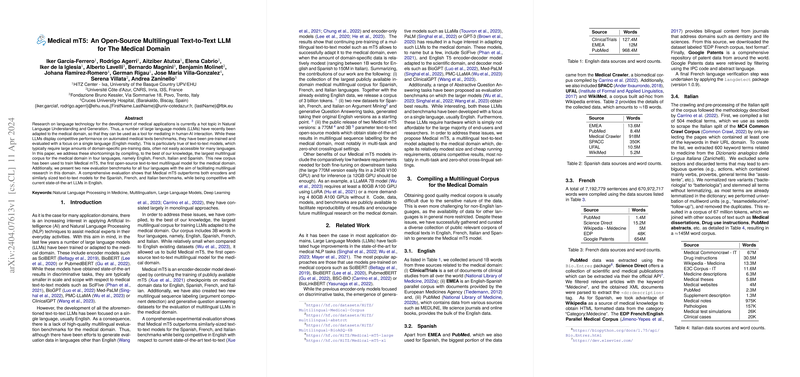

Multilingual Corpus Compilation

The foundation of Medical mT5's success lies in the assembly of a diverse and extensive multilingual corpus tailored to the medical domain. This corpus, touted as the largest of its kind, encompasses 3 billion words across four languages. It blends data from various sources, including clinical trials, PubMed articles, and medical instructions, ensuring a comprehensive representation of the medical lexicon. This corpus not only facilitates the training of Medical mT5 but also sets a new benchmark for multilingual medical NLP research.

Medical mT5 Model Development

Building upon the mT5 framework, Medical mT5 underwent continued pre-training on the assembled multilingual medical corpus. This process involved adapting the model to recognize and process medical terminology consistently across the covered languages. The development yielded two versions of Medical mT5, one with 770M parameters and another with 3B parameters, to cater to different computational capabilities and use cases. The model's architecture and training approach are carefully designed, ensuring it remains accessible to a broad range of researchers and practitioners, especially considering the comparatively low hardware requirements for both training and inference.

Benchmark Creation and Evaluation

To effectively gauge Medical mT5's performance, the research contributes two novel multilingual datasets for sequence labeling and generative question answering in the medical domain. These benchmarks challenge the model across multiple tasks, including Argument Mining and Abstractive Question Answering, facilitating a rigorous and comprehensive evaluation. Medical mT5 demonstrated exemplary performance, surpassing similarly-sized models on non-English benchmarks and achieving competitive results in English, showcasing its robustness and versatility across languages.

Implications and Future Directions

The implications of this research extend far beyond its immediate achievements. Medical mT5 paves the way for more inclusive and equitable medical NLP applications, breaking the English-centric mold that has dominated the field. It highlights the importance of developing multilingual tools that can support medical professionals and patients across diverse linguistic backgrounds. Looking ahead, this work could inspire further efforts to expand the corpus to include more languages and refine the model to tackle a broader range of medical NLP tasks.

Conclusion

The development of Medical mT5 marks a significant step forward in multilingual NLP for the medical domain. By leveraging a vast multilingual medical corpus, this model not only achieves state-of-the-art results in sequence labelling and question answering but also demonstrates the feasibility and importance of extending NLP research and applications to non-English languages in the medical field. Future research will undoubtedly build on this foundation, further enhancing the capabilities of NLP technologies to serve global medical communities.