Toward Evaluating and Building Versatile LLMs for Medicine - An Analysis

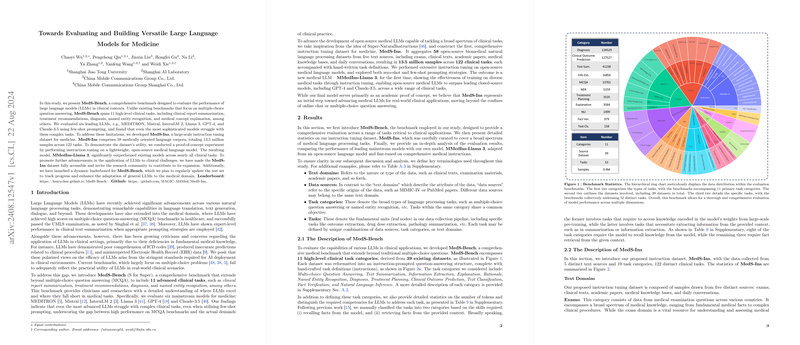

The paper "Towards Evaluating and Building Versatile LLMs for Medicine," authored by Chaoyi Wu, Pengcheng Qiu, and their colleagues, introduces MedS-Bench—an extensive benchmark designed to evaluate LLMs within clinical contexts. The proposed MedS-Bench encompasses 11 high-level clinical tasks, addressing a significant gap in existing benchmarks that primarily focus on multiple-choice question answering (MCQA). To both enhance and benchmark LLMs for medical applications, the authors also introduce MedS-Ins, an extensive instruction tuning dataset built specifically for the medical domain. Notably, MedS-Ins covers 58 medically oriented corpora, compiling a total of 13.5 million samples across 122 clinical tasks.

Analyzing the Performance of Leading Models

The authors evaluated six leading LLMs on MedS-Bench using few-shot prompting: MEDITRON, Mistral, InternLM 2, Llama 3, GPT-4, and Claude-3.5. The results indicated that even the most sophisticated LLMs currently struggle with complex clinical tasks, underlining the gap between benchmark performance and practical requirements in clinical settings.

Table of Key Results:

- Multilingual MCQA: MMedIns-Llama 3 achieved an average accuracy of 63.9, surpassing other LLMs including GPT-4 and Llama 3.

- Text Summarization: MMedIns-Llama 3 significantly outperformed other models with average BLEU/ROUGE scores of 46.82/48.38.

- Information Extraction: MMedIns-Llama 3 led with an average score of 83.18, outperforming benchmarks like InternLM 2.

- Concept Explanation: The model achieved the highest BLEU/ROUGE scores of 33.89/37.68.

- Answer Explanations: Leading with average BLEU/ROUGE scores of 47.17/34.96.

- NER: MMedIns-Llama 3 reached an average F1 score of 68.58, dominating all NER benchmarks.

- Diagnosis, Treatment Planning, and Clinical Outcome Prediction: Accuracy scores up to 98 in treatment planning and 95 in diagnosis.

Implications and Future Directions

Practical Implications

- Clinical Decision Support Systems (CDSS): MMedIns-Llama 3's performance in tasks like diagnosis and treatment planning shows promise for deploying AI in real-time CDSS, potentially enhancing diagnostic accuracy and treatment recommendations.

- Automating Medical Documentation: The success in text summarization tasks suggests that LLMs can effectively automate the summarization of large clinical documents, improving efficiency in healthcare environments.

- Medical NER and Information Extraction: High performance in NER tasks reflects the capability of MMedIns-Llama 3 to structure extensive clinical texts into usable data, crucial for maintaining accurate electronic health records and facilitating advanced analytics.

Theoretical Implications

- Knowledge Representation: The ability to effectively perform on diverse tasks highlights the importance of incorporating medical domain-specific knowledge bases into pre-training and fine-tuning steps.

- Task Diversity in Training: The breadth of the MedS-Ins dataset emphasizes that including varied task categories can significantly boost a model's adaptability to nuanced clinical scenarios, suggesting avenues for designing more encompassing training regimes.

- Instruction Tuning: The process of instruction tuning demonstrated in MedS-Ins could be pivotal in realizing LLMs that can generalize across tasks with minimal examples, a feature that could be further leveraged for other highly specialized domains.

Future Directions

- Expansion of Benchmarks and Datasets: Extending both MedS-Bench and MedS-Ins to cover more clinical tasks and languages can augment the utility of LLMs in global health contexts, ensuring the AI advancements equitably benefit diverse populations.

- Development of Multilingual Models: Integrating multilingual capabilities to better interpret and generalize medical information across different languages will be crucial for international collaborations in health informatics.

- Community Collaboration: Open-sourcing MedS-Ins and inviting contributions from the research community can lead to more robust and comprehensive datasets, driving forward the evolution of LLMs for healthcare applications.

Conclusion

The paper by Chaoyi Wu et al. presents a significant contribution to the field of AI in medicine through the introduction of MedS-Bench and MedS-Ins. The evaluation of current LLMs underscores the gap between existing capabilities and the demands of clinical practice, while the development and results of MMedIns-Llama 3 illustrate a promising direction for future advancements. By providing a more diverse and realistic range of tasks, this work paves the way for more effective and reliable medical AI systems. The open-sourcing of datasets and collaborative efforts in expanding benchmarks will further amplify the impacts of this research, fostering ongoing progress in the application of AI to healthcare.