- The paper introduces a multi-memory system (MMS) that processes short-term memory into episodic, semantic, and cognitive fragments, significantly improving long-term recall.

- It leverages cognitive psychology theories to design both retrieval and contextual memory units, outperforming traditional models like MemoryBank and A-MEM in complex reasoning tasks.

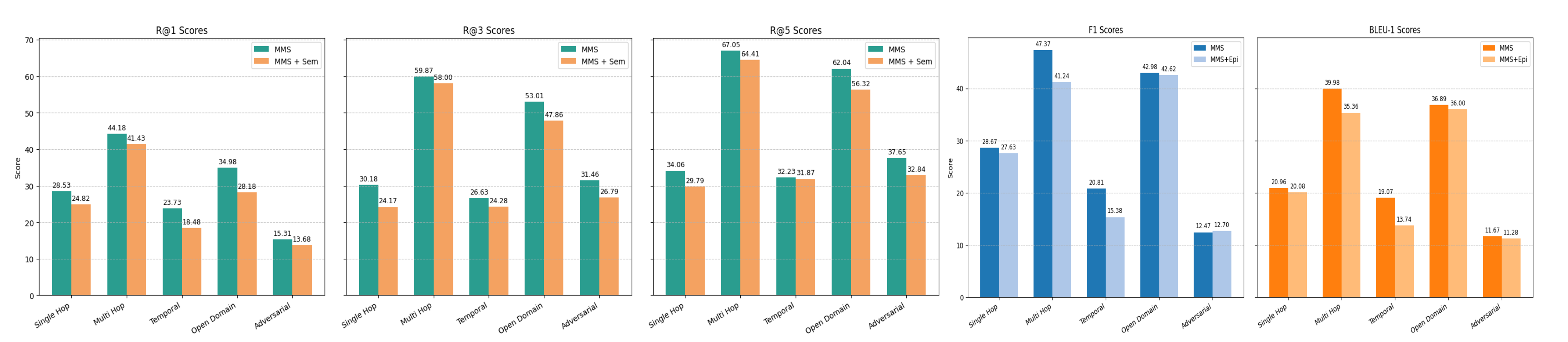

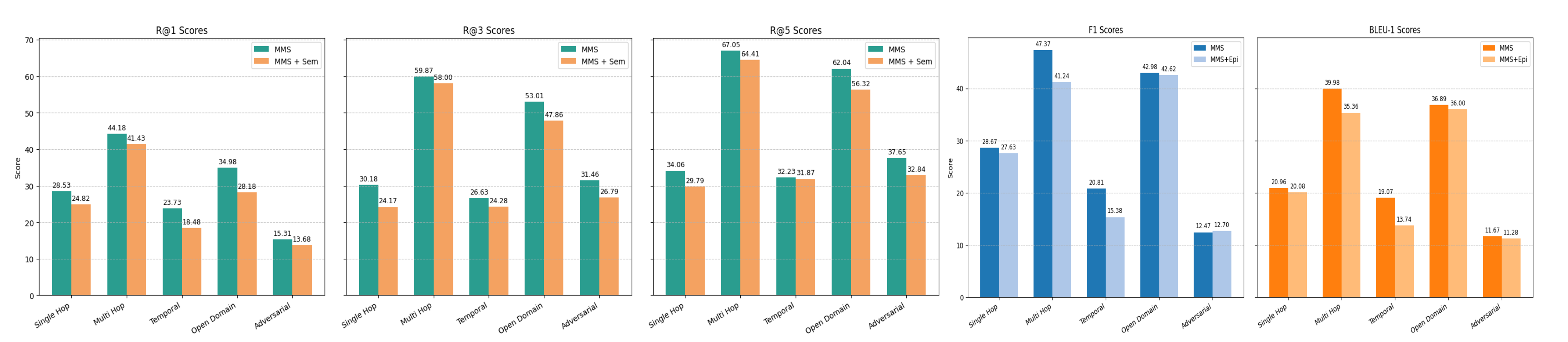

- Experimental evaluations on the LoCoMo dataset show that MMS enhances performance metrics such as Recall@N, F1 Score, and BLEU-1, underscoring its robustness against noise.

Multiple Memory Systems for Enhancing the Long-term Memory of Agent

Introduction

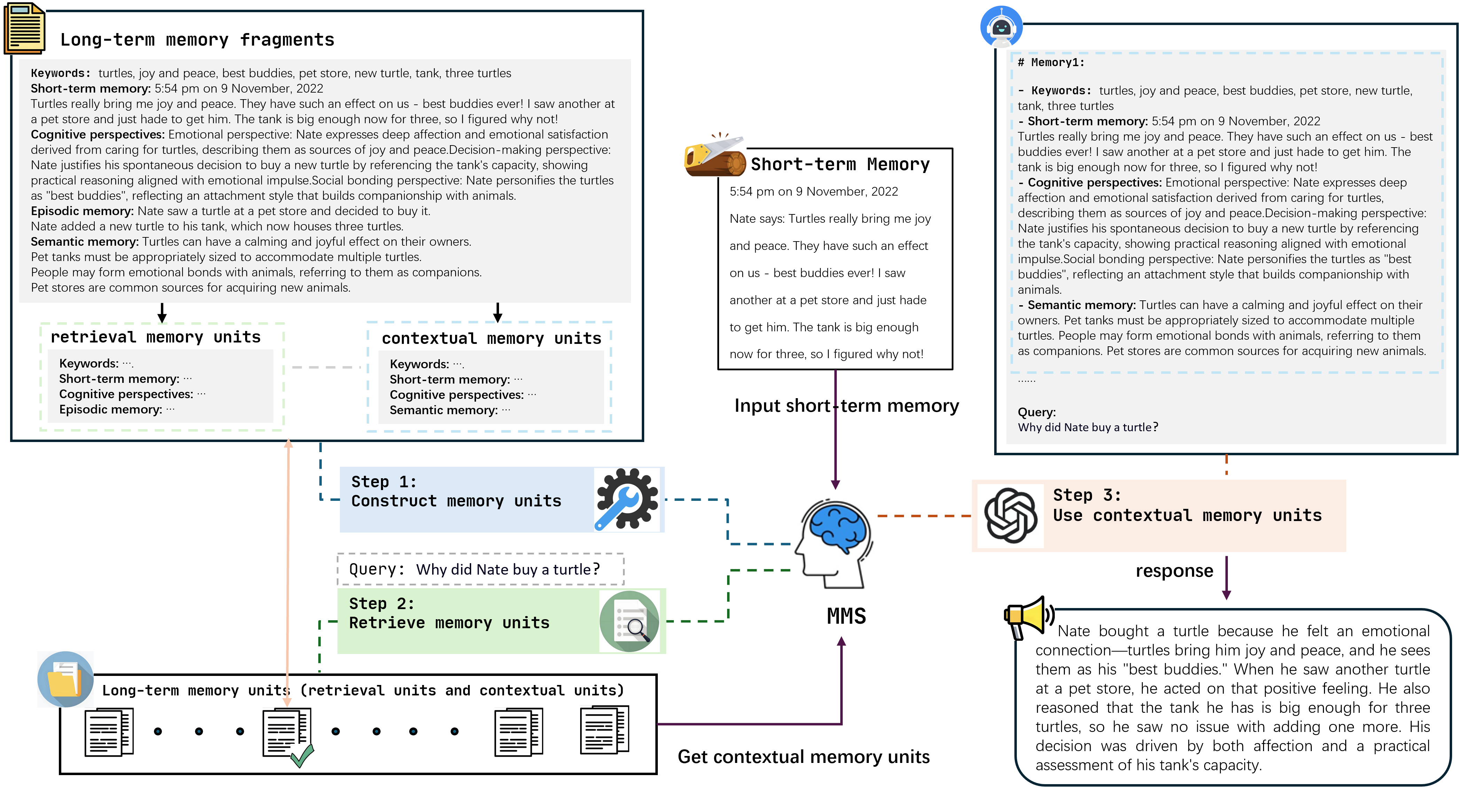

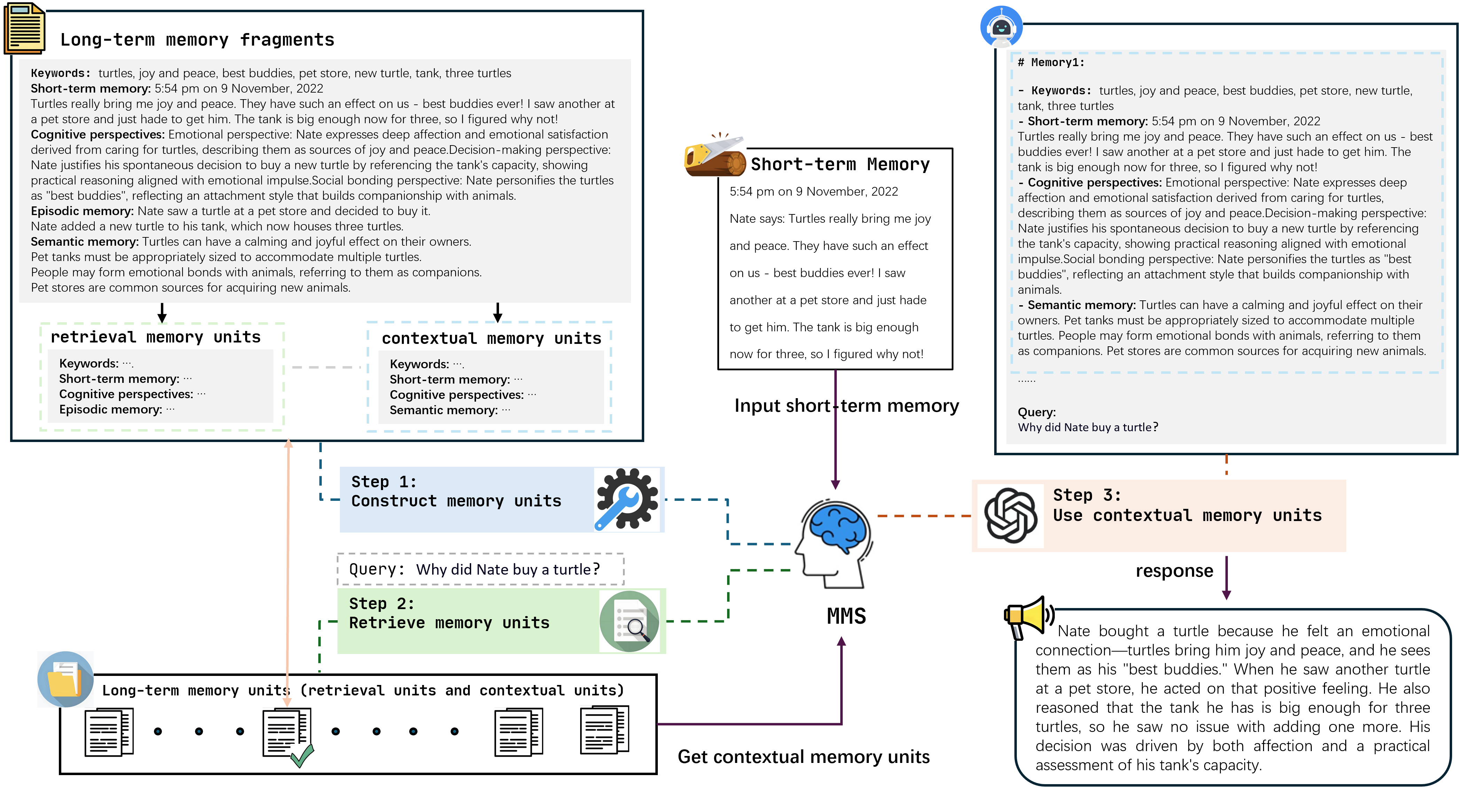

This paper introduces a novel approach to enhancing long-term memory (LTM) in LLM-based agents by designing a Multiple Memory System (MMS). This system is inspired by cognitive psychology theories, which propose that memory is not a monolithic structure but consists of diverse subsystems each responsible for different types of information processing. Unlike traditional models like MemoryBank and A-MEM, which exhibit deficiencies in stored memory quality, this approach seeks to improve memory recall and response quality by processing short-term memory (STM) into various long-term memory fragments, including episodic and semantic memories. This multi-memory fragment strategy not only enhances recall but also improves agent responses by effectively utilizing historical data through retrieval and contextual memory units.

Figure 1: Schematic of multi-memory system process: After acquiring short-term memory, MMS processes it into memory fragments and constructs retrieval units and contextual memory units.

Theoretical Framework

Cognitive Psychology and Memory Systems

Drawing on Tulving's theory of multiple memory systems, the MMS treats human memory as comprising procedural, semantic, and episodic components, each activated under different cognitive tasks. The Levels of Processing Theory suggests memory formation depends on the depth of information processing, while the Encoding Specificity Principle indicates effective retrieval is contextually dependent. The MMS translates these theories into practice by generating high-quality LTM fragments—keywords, cognitive perspectives, episodic, and semantic memory—that improve retrieval efficacy and knowledge enhancement during generation tasks.

Existing architectures like MemoryBank store dialogue content by extracting keywords and summaries as memory units, whereas A-MEM builds knowledge via keyword extraction and summarization. However, these models often miss nuanced context retrieval, affecting recall quality. MMS addresses this through its multi-faceted approach, ensuring retrieval units align more closely with queries, thereby expanding the semantic reach and recalling performance.

The MMS Framework

Construction of Long-term Memory Units

MMS processes the content of a dialogue (STM) into diverse long-term memory representations: Mkey, Mcog, Mepi, and Msem. These fragments are used to construct retrieval memory units (MUret) essential for high-precision relevance matching, and contextual memory units (MUcont), which enrich knowledge output during generation. The architecture exploits both low-level keyword extraction and high-level semantic analysis to form robust query alignment mechanisms.

Figure 2: Compare the impact on performance after adding other segments. In terms of recall metrics, MMS and MMS+Sem were compared.

Memory Retrieval and Utilization

The retrieval phase involves converting user queries into vectors and selecting the top-k memory segments based on cosine similarity for enhanced response generation by mapping MUret to MUcont. This meticulously structured memory integration ensures that contextually relevant content is fed back into LLMs, significantly enhancing agent responses under diverse querying scenarios.

Experimental Evaluation

Setup and Results

Employing the LoCoMo dataset, evaluations were conducted using metrics like Recall@N, F1 Score, and BLEU-1, across tasks that include single-hop, multi-hop, and temporal reasoning. MMS outperformed baselines (NaiveRAG, MemoryBank, A-MEM) notably in multi-hop and open-domain questions, due to its adept multi-level integration of memory fragments. The system displayed robust performance, especially in high-complexity reasoning tasks.

Discussion

Ablation and Robustness Analysis

Ablation studies demonstrate the critical role of diverse memory segments in query handling efficiency, reaffirming the necessity of varied cognitive perspectives in LTM construction. Furthermore, robustness testing against memory fragment variation confirmed MMS's capacity for maintaining high-quality content retrieval despite potential noise.

Implications for AI Memory Systems

By integrating cognitive psychology insights with advanced LTM structuring, MMS paves the way for future exploration into memory system design in AI contexts. Such a multi-dimensional approach potentially sets a foundation for more human-like adaptive learning mechanisms within AI agents, continually improving interaction quality and autonomy.

Conclusion

The MMS approach demonstrates strong utility in enhancing recall and generation capabilities of AI agents, showcasing that cognitive-theory-based memory systems can significantly improve LTM quality. Through innovative memory fragmentation and retrieval strategies, MMS adapts sophisticated cognitive processes to practical computational models, offering a scalable, high-performance solution to memory challenges in LLM-based agents. Future work will explore larger-scale integration of memory operations to further align AI capabilities with human cognition models.