Introduction

LLMs have exhibited impressive capabilities in handling complex tasks that involve extensive text, such as summarization, question answering, and coding. One crucial aspect of improving their performance is the ability to align models with long-context instructions, which typically demands instruction fine-tuning on lengthy input sequences. Current efforts in long-context LLMs are mainly centered on extending context windows, but this approach alone is insufficient for instruction alignment over long texts.

Related Work

When considering long-context scaling, two primary categories emerge: methods requiring fine-tuning and those that do not. Non-fine-tuned techniques might involve sliding window attention or neighboring token compression to address the positional out-of-distribution issue in attention computations for lengthy contexts; however, these approaches still fall short of the performance achieved by fine-tuned models. On the other hand, position encoding extension and continual pretraining on extended sequences are standard strategies employed by fine-tuned approaches. As these models scale, aligning them with long instruction-following datasets during a phase known as supervised fine-tuning becomes paramount to ensure they can handle diverse user requests in a chat interface.

LongAlign

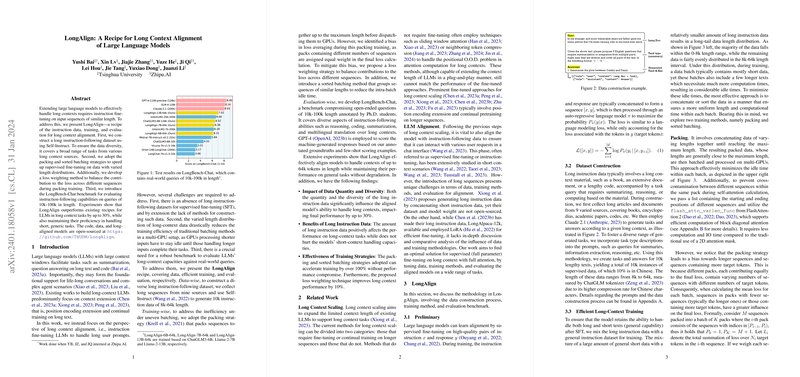

To effectively train LLMs for extended interactions with users, LongAlign introduces an integrated process for data construction, efficient training, and robust evaluation. Its first salvo is an expansive dataset of long instruction data, derived from nine diverse sources and created using Self-Instruct to generate 10k instruction data of lengths ranging from 8k to 64k tokens. Training efficiency is amplified through packing and sorted batching strategies, reducing idle times in multi-GPU setups. Furthermore, to address biases introduced by these strategies, a loss weighting method is developed, ensuring balanced contributions to loss calculations across sequences of varying lengths. The evaluation benchmark, LongBench-Chat, brings realism into play with a series of open-ended questions, annotated by Ph.D. students, that span lengths of 10k to 100k tokens.

The standard baselines for evaluating the success of LLMs on long-context tasks have been outshone by LongAlign, which displays a performance enhancement of up to 30% on long-context tasks, without a concurrent drop in proficiency in handling short, generic tasks.

Findings and Contributions

LongAlign's efficacy is underlined by its success in scaling beyond the 64k token length mark while maintaining compatibility with shorter contexts. Empirical experiments unveil several insights:

- The quantity and diversity of long instruction data crucially influencer a model's performance, optimizing outcomes by as much as 30%.

- The packing and sorted batching training strategies are over 100% faster than traditional methods without trading off performance.

- The loss weighting strategy notably amplifies long-context performance by 10%, addressing potential biases in training loss calculations.

Conclusion

LongAlign stands as a robust and efficient approach to align LLMs with long-context tasks. The contributions extend from innovative data collection and training methodologies to the creation of an evaluation benchmark capable of determining a model's aptitude in executing realistic long-context interactions. This development opens avenues for emerging tasks requiring in-depth understanding of extended texts by LLMs.