Overview of LongLoRA: Efficient Fine-tuning of Long-Context LLMs

The paper presents LongLoRA, a novel approach to efficiently fine-tune LLMs for extended context lengths while minimizing computational overhead. This method addresses the prohibitive computational costs traditionally associated with training LLMs on long-context sequences, such as those required for processing extensive documents or handling complex queries.

Contributions and Techniques

LongLoRA introduces several innovations to achieve efficient and effective fine-tuning:

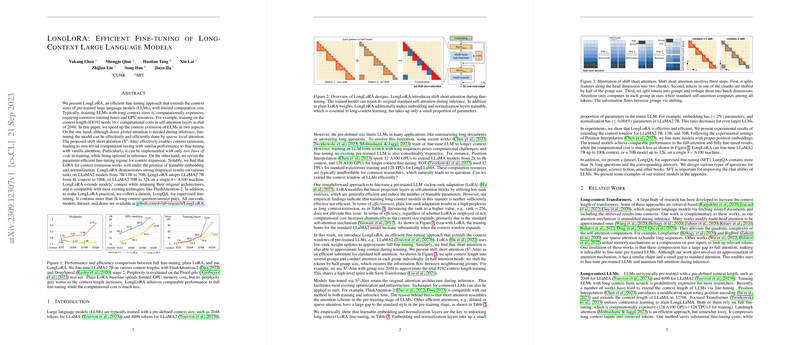

- Shifted Sparse Attention (S-Attn) LongLoRA employs a method called Shifted Sparse Attention (S-Attn) to reduce the computational burden during fine-tuning. In this mechanism, the context is divided into several groups, and attention is computed within these groups. Half of the attention heads use a shifted grouping mechanism to allow information flow between adjoining groups. This technique approximates the effects of full attention while significantly reducing computation costs. The implementation simplicity of S-Attn, requiring only two lines of code, further enhances its appeal.

- Parameter-Efficient Fine-Tuning The authors extend the LoRA (Low-Rank Adaptation) framework for long-context fine-tuning by incorporating trainable embedding and normalization layers. This adaptation, referred to as LoRA in the paper, is crucial for achieving effective long-context adaptation. It significantly narrows the performance gap between LoRA and full fine-tuning, allowing for efficient parameter updates with minimal additional computational requirements.

Empirical Evaluation

The paper provides extensive empirical evaluations demonstrating the efficacy of LongLoRA. Key results include:

- Context Extension: LongLoRA successfully extends the context window of Llama2 7B from 4k to 100k tokens and Llama2 70B to 32k tokens using only a single 8 A100 machine. The models retain the original architectures and support optimizations such as Flash-Attention2, making them highly compatible with existing techniques.

- Performance Metrics: Evaluation on datasets like PG19 and proof-pile shows that models fine-tuned with LongLoRA achieve perplexity values comparable to fully fine-tuned models. For instance, a Llama2 7B model fine-tuned to 32k context length achieves a perplexity of 2.50 on proof-pile, closely matching full attention fine-tuned models.

- Efficiency: LongLoRA fine-tuning of Llama2 7B to 100k context length demonstrates up to 1.8 lower memory cost and reduced training hours compared to conventional full fine-tuning approaches.

Implications and Future Directions

LongLoRA represents a significant advancement in the domain of efficient fine-tuning for LLMs. Its ability to handle much longer context lengths with reduced computational resources opens doors for various practical applications. These include summarizing extensive documents, handling long-form question answering, and other tasks requiring substantial context comprehension.

Theoretically, the introduction of S-Attn and the enhancements to the LoRA framework suggest promising avenues for further research into efficient attention mechanisms and parameter-efficient training strategies. Future work could explore the application of LongLoRA to other LLM architectures and position encoding schemes, further broadening its utility and impact.

Conclusion

The LongLoRA method offers a pragmatic solution to the challenge of extending the context lengths of LLMs while balancing computational efficiency and performance. The combination of S-Attn for efficient attention and the improved LoRA framework exemplifies a thoughtful approach to addressing the limitations of conventional fine-tuning methods. This work lays a solid foundation for future research aimed at optimizing LLMs for long-context applications, ensuring scalability and accessibility for broader research communities.