LongBench: A Bilingual, Multitask Benchmark for Long Context Understanding

Overview

The paper "LongBench: A Bilingual, Multitask Benchmark for Long Context Understanding" introduces LongBench, an innovative benchmark specifically designed to evaluate the long context understanding capabilities of LLMs. The benchmark addresses a crucial limitation of current LLMs, which often struggle with processing and understanding texts beyond a few thousand tokens. LongBench represents a significant effort to provide a rigorous framework for assessing LLMs' abilities to handle extended sequences present in books, reports, and codebases, across diverse languages and tasks.

Benchmark Design

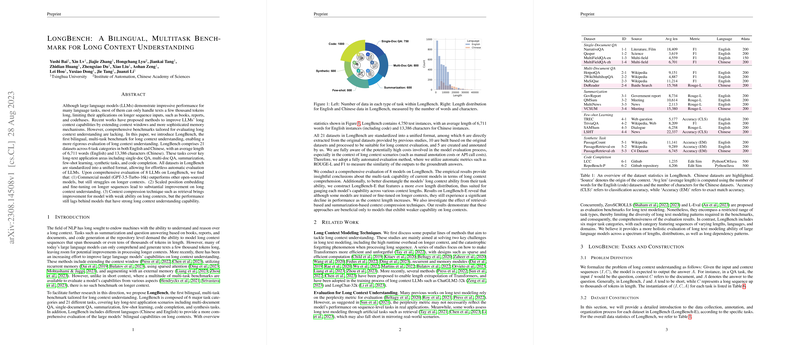

LongBench distinguishes itself by its breadth and structure, encompassing 21 datasets across six key task categories: Single-Document QA, Multi-Document QA, Summarization, Few-shot Learning, Synthetic Tasks, and Code Completion. It includes bilingual datasets in both English and Chinese, adding a layer of complexity and comprehensiveness to the evaluation.

- Single-Doc QA & Multi-Doc QA: These tasks aim to evaluate how well models can extract and integrate information from single or multiple documents.

- Summarization: This category tests the models' abilities to condense detailed documents into concise summaries, highlighting global context understanding.

- Few-Shot Learning: Few-shot scenarios test the adaptability of LLMs to leverage minimal examples for various tasks, simulating practical constraints.

- Synthetic Tasks: These controlled tasks focus on specific long-context dependencies, offering insights into the models' internal representations and scaling behavior.

- Code Completion: By introducing tasks at both file and repository levels, LongBench examines models’ capacities to understand programming code over extended contexts.

Each dataset has been standardized for ease of automatic evaluation, employing metrics like ROUGE-L and F1 scores.

Experimental Evaluation

The paper reports a comprehensive assessment of eight diverse LLMs, ranging from open-source to commercial models like GPT-3.5-Turbo-16k. Notable findings include:

- GPT-3.5-Turbo-16k consistently outperforms its peers, yet still encounters challenges with longer contexts.

- Techniques like scaled positional embeddings and fine-tuning on long sequences (as seen in models such as LongChat and ChatGLM2) significantly enhance long context performance.

- Context compression methods yield improvements for underperforming models, but such methods still underdeliver compared to inherently robust models.

The analysis included tailored versions of LongBench like LongBench-E, which emphasize varied sequence lengths to discern the models' length sensitivity independent of task complexity.

Implications and Future Directions

The development of LongBench opens several avenues for both theoretical and applied insights:

- Practical Impacts: The benchmark supports developers in identifying strengths and weaknesses in model designs, particularly for applications requiring comprehensive document understanding, code analysis, and multilingual capabilities.

- Theoretical Insights: Exploring the impact of context length on model performance could reveal deeper insights into LLMs' attention mechanisms and potential architectural improvements.

- Innovation in Model Design: The paper’s conclusions suggest the need for novel architectures capable of efficiently handling longer contexts, potentially integrating advanced memory mechanisms or novel positional encoding techniques.

LongBench's balanced design in terms of task diversity and bilingual focus provides a valuable tool for future advancements in AI, contributing to more robust and adaptable LLMs capable of tackling real-world complexities involving extended textual materials.

Overall, LongBench represents a significant step forward in the ongoing development of benchmarks tailored for the evolving capacities of LLMs, marking a vital contribution to both the academic community and industry practitioners focusing on natural language processing and long-form text applications.