LogFormer: A Pre-train and Tuning Pipeline for Log Anomaly Detection

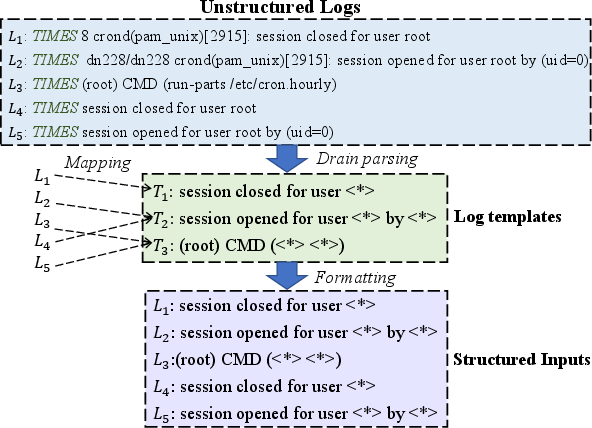

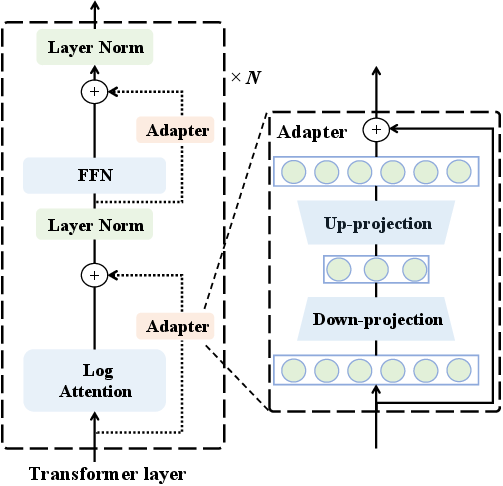

Abstract: Log anomaly detection is a key component in the field of artificial intelligence for IT operations (AIOps). Considering log data of variant domains, retraining the whole network for unknown domains is inefficient in real industrial scenarios. However, previous deep models merely focused on extracting the semantics of log sequences in the same domain, leading to poor generalization on multi-domain logs. To alleviate this issue, we propose a unified Transformer-based framework for Log anomaly detection (LogFormer) to improve the generalization ability across different domains, where we establish a two-stage process including the pre-training and adapter-based tuning stage. Specifically, our model is first pre-trained on the source domain to obtain shared semantic knowledge of log data. Then, we transfer such knowledge to the target domain via shared parameters. Besides, the Log-Attention module is proposed to supplement the information ignored by the log-paring. The proposed method is evaluated on three public and one real-world datasets. Experimental results on multiple benchmarks demonstrate the effectiveness of our LogFormer with fewer trainable parameters and lower training costs.

- GripRank: Bridging the Gap between Retrieval and Generation via the Generative Knowledge Improved Passage Ranking. In CIKM 2023, 36–46. ACM.

- Anomaly detection from log files using data mining techniques. In Information Science and Applications. Springer.

- Bert: Pre-training of deep bidirectional transformers for language understanding. NAACL 2019.

- Spell: Streaming parsing of system event logs. In ICDM 2016.

- Deeplog: Anomaly detection and diagnosis from system logs through deep learning. In CCS 2017.

- LogLG: Weakly Supervised Log Anomaly Detection via Log-Event Graph Construction. In DASFAA 2023, volume 13946 of Lecture Notes in Computer Science, 490–501. Springer.

- LVP-M3: Language-aware Visual Prompt for Multilingual Multimodal Machine Translation. In EMNLP 2022, 2862–2872. Association for Computational Linguistics.

- OWL: A Large Language Model for IT Operations. CoRR, abs/2309.09298.

- Drain: An online log parsing approach with fixed depth tree. In ICWS 2017, 33–40.

- Loghub: A Large Collection of System Log Datasets towards Automated Log Analytics. CoRR, abs/2008.06448.

- Long short-term memory. Neural computation, 1735–1780.

- Parameter-efficient transfer learning for NLP. In ICML 2019.

- LoRA: Low-Rank Adaptation of Large Language Models. In ICLR 2022.

- HitAnomaly: Hierarchical Transformers for Anomaly Detection in System Log. TNSM, 17(4): 2064–2076.

- Abstracting Execution Logs to Execution Events for Enterprise Applications (Short Paper). In QSIC 2008, 181–186.

- Detecting Anomaly in Big Data System Logs Using Convolutional Neural Network. In DASC 2018, 151–158.

- Clustering event logs using iterative partitioning. In KDD 2009, 1255–1264.

- LogAnomaly: Unsupervised Detection of Sequential and Quantitative Anomalies in Unstructured Logs. In IJCAI 2019.

- What Supercomputers Say: A Study of Five System Logs. In DSN 2007, 575–584.

- Training language models to follow instructions with human feedback. In NeurIPS.

- Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In EMNLP 2019, 3980–3990.

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. In NeurIPS.

- Detecting large-scale system problems by mining console logs. In ICML 2010.

- UM4: Unified Multilingual Multiple Teacher-Student Model for Zero-Resource Neural Machine Translation. In IJCAI 2022, 4454–4460. ijcai.org.

- PLELog: Semi-Supervised Log-Based Anomaly Detection via Probabilistic Label Estimation. In ICSE 2021, 230–231.

- Semi-supervised Log-based Anomaly Detection via Probabilistic Label Estimation. In ICSE 2021, 1448–1460.

- A study of the performance of general compressors on log files. ESE, 25(5): 3043–3085.

- Automated IT system failure prediction: A deep learning approach. In BigData 2016.

- Rapidand robust impact assessment of software changes in large internet-based services. In ENET 2015.

- Robust log-based anomaly detection on unstable log data. In FSE 2019.

- Serial or Parallel? Plug-able Adapter for multilingual machine translation. CoRR.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.