DISC-FinLLM: A Specialized Chinese Financial LLM

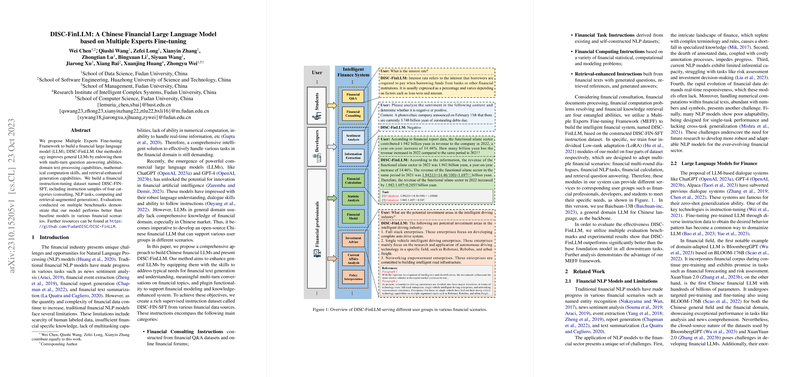

The paper "DISC-FinLLM: A Chinese Financial LLM based on Multiple Experts Fine-tuning" presents a novel methodology for developing a financial LLM tailored to the Chinese market. Recognizing the unique challenges inherent in the financial domain, the authors propose a Multiple Experts Fine-tuning Framework to enhance general LLM capabilities with domain-specific skills.

Methodology and Dataset Construction

The research introduces DISC-FinLLM, a financial LLM that embeds capabilities such as multi-turn question answering, domain-specific text processing, mathematical computation, and retrieval-augmented generation. Central to its development is the creation of DISC-FIN-SFT, a financial instruction-tuning dataset. This dataset is meticulously segmented into four categories: financial consulting, various NLP tasks, computational problems, and retrieval-enhanced generation.

- Financial Consulting Instructions: These are derived from financial Q&A datasets, forums, and ChatGPT-generated content. The goal is to mimic real-world consulting scenarios and enhance the model's conversational fluency.

- Financial Task Instructions: This category focuses on tasks such as sentiment analysis and event extraction, using existing Chinese financial NLP datasets complemented by hand-crafted prompt templates for zero-shot and few-shot learning.

- Financial Computing Instructions: Addressing numerical computation within financial texts, this dataset includes questions that train the model to utilize computational tools like calculators and equation solvers.

- Retrieval-enhanced Instructions: By merging generated questions with retrieved documents, this part of the dataset simulates the model's engagement with financial research and news texts.

Multiple Experts Fine-tuning Framework

The innovative Multiple Experts Fine-tuning Framework trains the model on these datasets using Low-rank Adaptation (LoRA). This involves creating distinct LoRA modules for each dataset category, enabling DISC-FinLLM to toggle between specialized tasks without compromising performance. This modular approach not only improves efficiency but also isolates task-specific enhancements, optimizing the model for different financial scenarios.

Evaluation and Results

The model's performance is evaluated against several benchmarks:

- Financial NLP Tasks: Using the FinCUGE benchmark, DISC-FinLLM exhibits significant performance improvements across six evaluated tasks compared to baseline models. This underscores the effectiveness of the task-specific datasets.

- Human Tests: On the FinEval benchmark, which includes financial multiple-choice questions, the model demonstrates superior accuracy compared to other LLMs, only trailing behind GPT-4 and ChatGPT.

- Computation and Retrieval-Efficiency: The model excels in computationally intensive tasks and retrieval-based evaluations, highlighting the adept integration of computational and retrieval plug-ins, reflecting its adaptability in financial contexts.

Implications and Future Directions

The DISC-FinLLM marks a substantial step forward in creating specialized LLMs for financial applications in non-English markets. The model enhances capabilities crucial for financial professionals, offering potential applications in customer support, investment advisory, and financial analysis. The modular architecture suggests promising avenues for further customization and extension into other specialized domains within AI.

Future research may focus on expanding the dataset's scope and integrating real-time financial data, which would enhance the model's applicability in dynamic financial environments. Additionally, exploring cross-lingual adaptations could broaden the utility of such domain-specific LLMs globally. The rigorous approach taken in this paper sets a foundation for further exploration of expert-based fine-tuning methods in specialized fields.