DISC-LawLLM: Advancing Legal Services with LLMs

The paper introduces DISC-LawLLM, a system specifically designed to leverage LLMs for a wide range of intelligent legal services. Structured on a legal syllogism prompting strategy, this model fine-tunes LLMs to improve legal reasoning within the Chinese Judicial context. By incorporating a retrieval module, DISC-LawLLM enhances its ability to utilize external legal knowledge, addressing the dynamic nature of legal databases.

Methodology and Dataset Construction

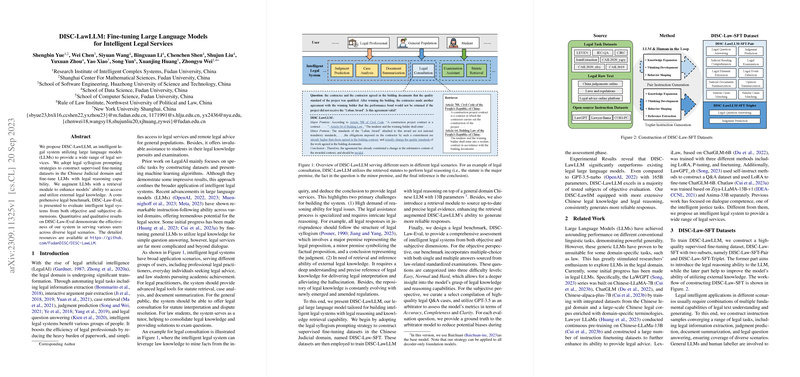

The authors construct a supervised fine-tuning dataset, DISC-Law-SFT, which consists of distinct subsets, focusing on legal reasoning and domain-specific knowledge integration. This dataset is derived from multiple sources, including public NLP legal task datasets, legal raw text, and open-source instruction datasets. The paper employs GPT-3.5-turbo for enhancing output consistency with legal syllogism, creating instruction samples for tasks like legal information extraction, judgment prediction, and text summarization.

Training and Model Architecture

The training of DISC-LawLLM is accomplished via two primary steps: Supervised Fine-Tuning (SFT) and Retrieval Augmentation. The architecture is based on the Baichuan-13B-Base model with 13.2 billion parameters, which is further fine-tuned using DISC-Law-SFT. Retrieval Augmentation is implemented by integrating an external retrieval framework that dynamically accesses an evolving legal knowledge base, ensuring accurate and current legal references.

Evaluation Framework

The authors propose a comprehensive evaluation framework, DISC-Law-Eval, which provides both objective and subjective assessments. Objective evaluation examines legal knowledge and reasoning via multi-choice questions from various legal exams. Subjective evaluation involves qualitative analysis using a question-answering paradigm, scored by GPT-3.5, assessing accuracy, completeness, and clarity.

Results and Implications

The results demonstrate that DISC-LawLLM significantly surpasses existing general and legal LLMs in objective evaluations, even outperforming GPT-3.5-turbo in multiple legal domains. It indicates superior jurisprudential reasoning, particularly for complex legal tasks. In subjective evaluations, DISC-LawLLM shows improvements in average scoring across key dimensions, highlighting its applicability in real-world scenarios.

Practical and Theoretical Contributions

From a practical perspective, DISC-LawLLM offers substantial advantages over traditional legal systems, simplifying tasks for legal professionals, enhancing legal consultation accessibility, and serving educational purposes for law students. Theoretically, the paper contributes to the field of LegalAI by demonstrating how fine-tuning with legal syllogism and retrieval mechanisms can enhance LLM capabilities in specialized domains.

Future Directions

This paper opens avenues for extending DISC-LawLLM to other legal systems and languages, with the potential to integrate even broader repositories of legal knowledge. Future developments could explore multi-modal inputs and deeper integration with court databases to further enrich the system's applicability and reliability in diverse legal contexts.

Overall, DISC-LawLLM represents a significant step forward in utilizing LLMs for legal applications, setting a robust foundation for future advancements in AI-driven legal services.