Overview of FinLLMs: Evolution and Evaluation in Financial NLP

The paper "A Survey of LLMs in Finance (FinLLMs)" offers an in-depth survey of the development and current state of Financial LLMs (FinLLMs). With a focus on understanding the transition from general-domain LLMs to those specific to the financial domain, this paper examines emerging techniques, performance evaluations on financial tasks, and discusses opportunities and challenges facing FinLLMs.

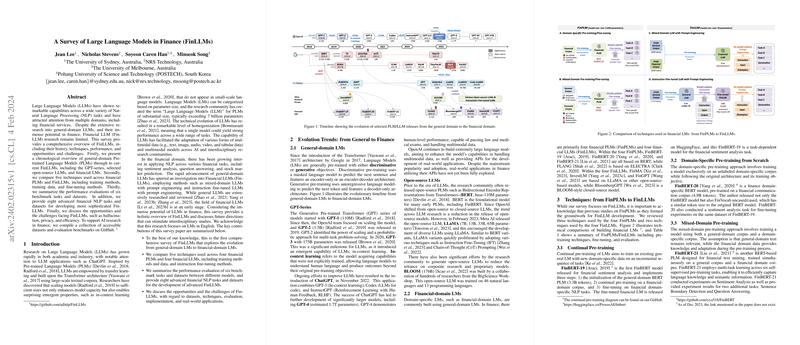

Evolutionary Trends in FinLLMs

FinLLMs are built on the extensive foundations of general-domain LLMs. The evolution begins with the popularization of the Transformer architecture, which introduced sophisticated pre-training techniques either for discriminative or generative LLMing. This paper highlights the significant development of the GPT-Series models, noting their capability improvements such as in-context learning, which was not possible with their smaller predecessors. The survey emphasizes the role of open-source initiatives, despite a trend towards closed-source models, and marks the introduction of specific models like LLaMA and BLOOM as pivotal for domain-specific adaptation.

Financial-domain models are typically constructed upon these general LLMs. This survey identifies key financial PLMs and LLMs such as FinBERT, FLANG, FinMA, and BloombergGPT, distinguished by their integration of finance-specific datasets into their training. Techniques employed include continual pre-training, mixed-domain pre-training, and instruction fine-tuning, revealing certain trends—such as prompt engineering—that help enhance the performance of these LLMs on financial tasks.

Techniques for Developing FinLLMs

The paper categorizes the techniques used for FinLLMs development into distinct approaches:

- Continual Pre-training and Domain-Specific Pre-training: These focus on leveraging existing LMs with domain-specific data, either from scratch or through incremental knowledge integration.

- Mixed-Domain Pre-training and Instruction Fine-Tuning: Mixed-domain models benefit from both general and domain-specific data during pre-training, whereas instruction fine-tuning utilizes datasets adapted specifically to guide the model's application towards financial tasks.

The paper illustrates how these methods contribute to diverse applications within finance, such as sentiment analysis and stock prediction, further benefiting from strategies like prompt engineering which enhance model outputs without fine-tuning model weights.

Benchmark Evaluation

Consistent evaluation frameworks are essential to gauge the performance of FinLLMs effectively. This paper performs evaluations employing six financial NLP benchmark tasks, including Sentiment Analysis (SA), Text Classification (TC), Named Entity Recognition (NER), among others, utilizing a variety of well-crafted datasets like Financial PhraseBank, FiQA, and multiple conversational datasets like ConvFinQA.

Benchmarking results indicate that mixed-domain FinPLMs exhibit strong performance on less complex tasks such as SA and TC, suggesting that task-specific models may still outperform LLMs on more complex tasks such as Numerical Reasoning and Stock Movement Prediction. The evaluation demonstrates the potential of current FinLLMs, like FinMA and BloombergGPT, in excelling in certain tasks, albeit with limitations noted against more specialized solutions.

Challenges and Future Directions

Significant challenges remain in improving the FinLLMs. These include ensuring data privacy and minimizing hallucinations, while simultaneously enhancing performance in multi-modal contexts. The integration of advanced techniques, such as Retrieval-Augmented Generation (RAG), offers promising routes to mitigate these issues through accessing non-pretrained data dynamically.

The paper highlights the need for domain-relevant evaluation metrics and human expert validation in performance assessment, proposing a shift from traditional NLP metrics to those more reflective of financial expert insight. Additionally, the paper stresses the importance of learning from cross-disciplinary integration, potentially opening pathways for more hybrid models that encompass legal, economical, and financial datasets.

Conclusion

This comprehensive analysis serves as a valuable reference for researchers aiming to understand the current scope and future potential of FinLLMs. Through a meticulous review of both existing models and the challenges they face, the paper provides a strategic roadmap for future FinLLM research and development, emphasizing the need for adaptive techniques and robust evaluation metrics. The collection of additional datasets and novel financial NLP tasks proposed in the paper could stimulate advanced interdisciplinary studies and practical applications in the landscape of AI-driven finance.