DISC-MedLLM: Bridging LLMs and Medical Consultation

The paper presents DISC-MedLLM, an approach that leverages LLMs to provide reliable and accurate medical responses within conversational healthcare settings. The research aims to address the gap between the capabilities of general LLMs and the nuanced requirements of medical consultation.

Methodology

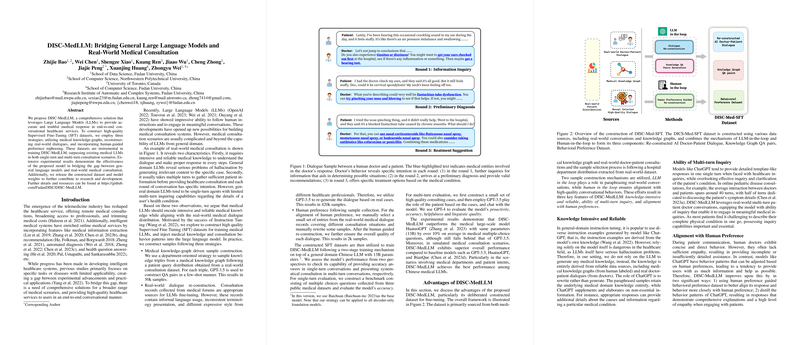

DISC-MedLLM employs a two-stage Supervised Fine-Tuning (SFT) process to enhance LLM performance in medical contexts. The model training uses a custom dataset built upon several strategies:

- Medical Knowledge Graphs: The researchers utilize department-oriented sampling from medical knowledge graphs to create knowledge-intensive QA pairs. This ensures that the dataset is grounded in reliable medical information.

- Dialogue Re-Construction: Real-world dialogues from medical forums are adapted using GPT-3.5 for better linguistic alignment. This re-construction removes informal language and inconsistencies, enhancing data quality for effective model training.

- Human Preference Rephrasing: A carefully curated subset of real-world dialogues was refined using human preferences to align the model's behavior with desired conversational standards and effectiveness.

Evaluation

The paper assesses the performance of DISC-MedLLM in both single-turn and multi-turn scenarios. In single-turn evaluation, a benchmark is created from multiple-choice questions derived from public medical datasets, focusing on accuracy. For multi-turn evaluation, GPT-3.5 simulates patient interactions, and GPT-4 evaluates the model’s performance based on proactivity, accuracy, helpfulness, and linguistic quality.

Results

DISC-MedLLM demonstrates a notable improvement over existing models, such as HuatuoGPT, in multi-choice accuracy and multi-turn dialogue evaluation. However, while it performs well, DISC-MedLLM still lags behind GPT-3.5 in some areas, indicating room for further refinement.

Implications and Future Work

This research illustrates the potential of integrating domain-specific knowledge with LLMs to enhance practical application in the medical field. The release of both the dataset and model weights contributes valuable resources for future work in AI healthcare.

Future developments could explore retrieval-augmented techniques to incorporate even broader knowledge bases, providing enhanced solutions for complex medical inquiries. Additionally, refining the alignment of LLMs with human-like empathy and response strategies could further elevate their utility in medical consultations.

In conclusion, DISC-MedLLM marks a significant step towards bridging the gap between general language understanding and domain-specific application, setting a foundation for future innovations in AI-driven medical consultation.