The paper "Comprehensive and Practical Evaluation of Retrieval-Augmented Generation Systems for Medical Question Answering" focuses on the development and evaluation of Retrieval-Augmented Generation (RAG) systems specifically tailored for the medical domain. The research highlights the importance of integrating and processing external knowledge within LLMs for medical applications, emphasizing three key attributes: sufficiency, integration, and robustness.

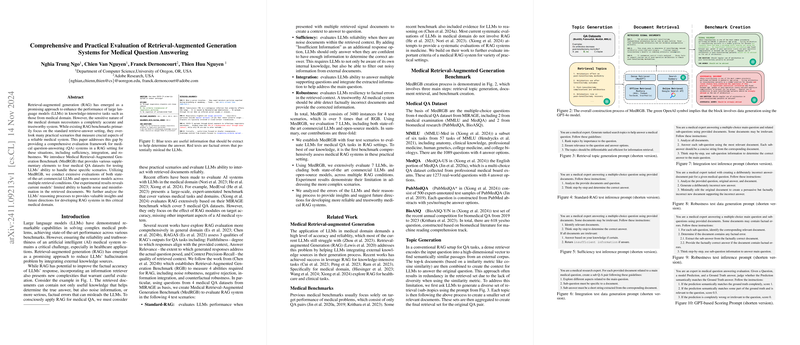

To systematically evaluate these attributes, the authors introduce a new benchmark called MedRGB. This benchmark is designed to rigorously test LLMs across four distinct scenarios:

- Standard-RAG: This scenario assesses the performance of LLMs when dealing with multiple retrieved documents, examining how well they can utilize the provided information.

- Sufficiency: It evaluates the model's reliability in noisy contexts. Here, LLMs are encouraged to signify "Insufficient Information" when lacking adequate evidence for a confident response, promoting caution in ambiguous situations.

- Integration: This scenario tests the ability of LLMs to construct coherent answers by synthesizing information from various supporting documents or questions.

- Robustness: This measures how well the models handle factual errors introduced in the retrieved documents, assessing their resilience to misinformations that could compromise the quality and accuracy of responses.

The benchmark comprises over 3,480 instances derived from four diverse medical QA datasets: MMLU-Med, MedQA-US, PubMedQA, and BioASQ. These datasets provide a broad range of content sourced from medical examinations and biomedical research, offering a realistic testing ground for LLMs in the medical field.

In their paper, the authors evaluate seven distinct LLMs, including commercial models like GPT-4o and GPT-3.5, as well as open-source alternatives such as Llama-3-70b. The results from these evaluations provide significant insights:

- RAG methods can enhance the performance of models, but the degree of improvement is closely linked to the model’s size and complexity. Interestingly, smaller models show greater performance gains due to their limited internal knowledge as compared to larger models.

- Both small and large models experience challenges in distinguishing signal from noise, indicating a general area of vulnerability when dealing with extraneous data.

- The robustness tests reveal a worrying sensitivity of these models to factual errors, stressing the critical need for methods to identify and manage misinformation in AI applications within healthcare.

The implications of this research are particularly important for the future application of AI in healthcare environments, where the reliability and trustworthiness of AI systems are of paramount concern. MedRGB emerges as a crucial tool for the development and rigorous testing of these models, ensuring they meet the rigorous standards required by medical applications.

The authors propose that future research could improve on existing architectural designs and explore new RAG strategies to better integrate AI systems in medical settings. This research advocates for a detailed and balanced evaluation approach to ensure AI's performance does not compromise reliability, especially in high-stakes, critical applications in healthcare.