CRUD-RAG: A Comprehensive Chinese Benchmark for Retrieval-Augmented Generation of LLMs

Authors: Yuanjie Lyu, Zhiyu Li, Simin Niu, Feiyu Xiong, Bo Tang, Wenjin Wang, Hao Wu, Huanyong Liu, Tong Xu, Enhong Chen, Yi Luo, Peng Cheng, Haiying Deng, Zhonghao Wang, Zijia Lu

The paper "CRUD-RAG: A Comprehensive Chinese Benchmark for Retrieval-Augmented Generation of LLMs" explores the intricacies of evaluating Retrieval-Augmented Generation (RAG) systems. RAG systems are instrumental in leveraging external knowledge sources to augment the capabilities of LLMs. This paper proposes a novel framework for benchmarking RAG systems, addressing the insufficiencies of current evaluation methods that narrowly focus on question answering (QA) tasks.

Motivation and Contribution

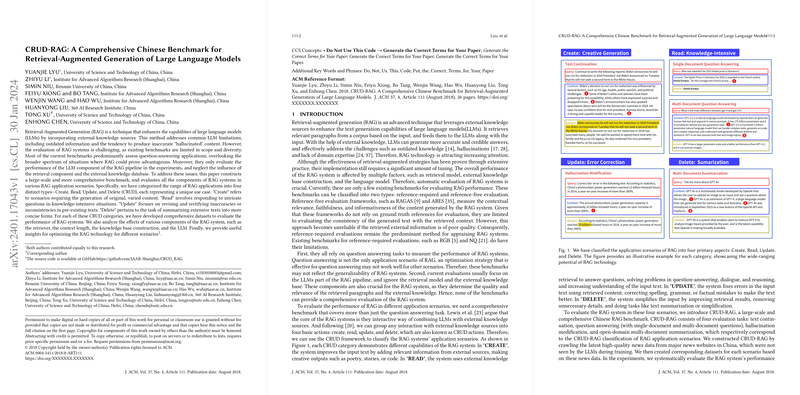

To mitigate the limitations of current RAG systems—such as outdated information and hallucinations—the authors constructed CRUD-RAG, a benchmark that comprehensively evaluates all components of RAG systems across different application scenarios. CRUD-RAG adopts the CRUD actions (Create, Read, Update, and Delete) to categorize RAG applications and ensure a wide-ranging evaluation. This paper makes significant contributions by introducing:

- A Comprehensive Benchmark: CRUD-RAG is designed not only for QA but for various RAG applications categorized by CRUD actions.

- High-Quality Datasets: It includes diverse datasets for different evaluation tasks: text continuation, multi-document summarization, single and multi-document QA, and hallucination modification.

- Extensive Experiments: Performance evaluations using various metrics and insights for optimizing RAG technology.

Methodology and Dataset Construction

News Collection and Dataset Construction

The benchmark uses the latest high-quality Chinese news data to create datasets. The news data avoid the pitfalls of pre-existing LLM knowledge, ensuring that RAG systems rely on retrieval for generating responses. The datasets include over 86,834 documents, encompassing tasks across the CRUD spectrum:

- Text Continuation (Create): Evaluates creative content generation.

- Question Answering (Read - 1-document, 2-document, 3-document): Evaluates knowledge-intensive applications.

- Hallucination Modification (Update): Focuses on error correction.

- Multi-Document Summarization (Delete): Concise summarization of extensive texts.

Evaluation Metrics

To measure the effectiveness of RAG systems, this benchmark employs both traditional semantic similarity metrics (bleu, rouge-L, bertScore) and a tailored key information metric (RAGQuestEval). RAGQuestEval leverages question-answering based frameworks to evaluate the factual consistency between generated and reference texts.

Experimental Analysis

The authors extensively analyze the impact of various RAG components:

- Chunk Size and Overlap: Optimal chunk sizes preserve text structure, crucial for creative and QA tasks. Overlapping chunks maintain semantic coherence.

- Retriever and Embedding Models: Dense retrieval algorithms generally outperform BM25. Embedding models' performance varies by task, with some models specifically excelling in error correction.

- Top-k Values: Increasing top-k enhances diversity and accuracy but can introduce redundancy. Task-specific tuning of top-k is essential for balance.

- LLMs: The choice of LLM significantly affects performance. GPT-4 exhibits superior performance across tasks, though other models like Qwen-14B and Baichuan2-13B also demonstrate competitive capabilities, particularly in specific tasks.

Implications and Future Developments

The research presented in this paper provides a robust framework for evaluating and optimizing RAG systems, which hold promise for various natural language generation applications. The findings underscore the importance of context-specific tuning of RAG components. Future developments in AI can build upon these insights to enhance LLMs' capabilities in real-world applications, ensuring they generate more accurate, relevant, and coherent content.

Researchers and practitioners can leverage the CRUD-RAG benchmark to:

- Develop more contextual and accurate generative models by refining retrieval mechanisms.

- Explore domain-specific applications, especially where factual accuracy is paramount.

- Enhance the robustness of RAG systems against common pitfalls like outdated information and hallucinations.

In conclusion, CRUD-RAG establishes a new standard for evaluating RAG systems, pushing the boundaries of what is achievable with the integration of retrieval and LLMs. The authors' work lays a foundation for future exploration and advancements in this exciting field of AI.