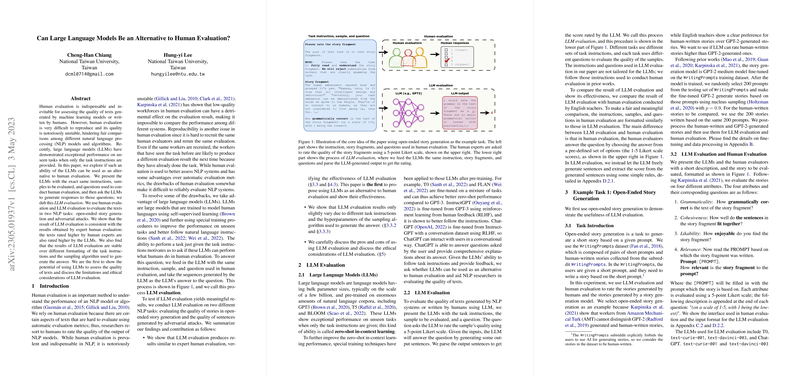

The paper introduces a systematic framework that repurposes LLMs (LLM) for text quality assessment in natural language generation tasks, thereby positioning LLM-based evaluation as a potential alternative—or at least a complementary approach—to expert human evaluation. The authors present a series of experiments on two distinct tasks (open-ended story generation and adversarial example generation) to gauge whether the evaluation generated by LLMs can align with expert human judgments under comparable conditions.

The core methodology involves feeding the same evaluation prompt, story or news title samples, and questions used in expert human evaluation directly into several LLMs. In the open-ended story generation task, both human-written and model-generated stories (from a fine-tuned GPT-2 on the WritingPrompts dataset) are rated on four attributes:

- Grammaticality: assessing sentence-level syntax and coherence.

- Cohesiveness: evaluating the logical inter-sentence flow.

- Likability: capturing the subjective enjoyment factor.

- Relevance: determining the alignment between story content and the initial prompt.

Key findings from this experiment include:

- Alignment with Human Judgments:

Evaluators based on stronger generation models (e.g., text-davinci-003 and ChatGPT) produce ratings that consistently favor human-authored texts over GPT-2-generated stories, with statistically significant differences as confirmed by Welch’s t-test. In contrast, models like T0 and text-curie-001 show less pronounced differentiation, suggesting that only particular LLM variants are sufficiently aligned with expert standards.

- Consistency and Stability:

The paper reports that variations in evaluation instructions (e.g., appending a persona or soliciting an explanation) as well as modifications of the sampling hyperparameters (varying the temperature parameter in the decoding process) result in only marginal shifts in the absolute ratings. Importantly, the relative ranking between human and model outputs remains stable, implying robustness in the LLM evaluation method.

- Correlation Analysis:

The authors compute Kendall’s τ to assess rank correlation between LLM evaluations and those provided by expert human evaluators. Across attributes, correlations vary—for instance, relevance yields a stronger correlation compared with grammaticality—suggesting that the interpretability of certain attributes (e.g., semantic alignment with the prompt) is more straightforward for LLMs than more nuanced syntactic judgments.

In the adversarial attack evaluation task, the paper focuses on synonym substitution attacks (SSAs) such as TextFooler, PWWS, and BAE—commonly employed against BERT-based classifiers. Here, the evaluation criteria diverge into two aspects:

- Fluency: How naturally the text reads after adversarial perturbations.

- Meaning Preservation: The ability of the adversarial example to retain the original semantic content compared to its benign counterpart.

Findings in this task demonstrate that while both human evaluators and LLMs rate benign news titles significantly higher on fluency and meaning preservation, the LLMs (including text-davinci-003 and ChatGPT) tend to assign slightly elevated ratings to the adversarial examples relative to human judgments. Despite this quantitative shift, the overall ranking of the attacks is preserved; for example, adversarial examples from BAE are consistently rated as higher quality than those from TextFooler and PWWS.

The paper also provides a detailed discussion on the following aspects:

- Comparative Advantages:

- Reproducibility: Unlike human evaluation, which is sensitive to evaluator fatigue and calibration effects (e.g., prior exposure altering rating criteria), the LLM evaluation procedure is inherently more reproducible when model details, random seeds, and decoding parameters are fixed.

- Cost and Speed: The LLM-based method demonstrates a substantive reduction in both cost and time relative to recruiting and compensating human experts.

- Ethical Considerations: The approach minimizes human exposure to objectionable content, thereby reducing potential evaluator distress.

- Limitations and Ethical Implications:

The paper acknowledges that LLMs possess certain limitations, including potential biases introduced during pre-training and reinforcement learning from human feedback, as well as issues with factual accuracy. Moreover, the evaluation of attributes that rely on subjective affective judgments (e.g., likability) can be more challenging for an LLM that lacks genuine affective processes. The authors caution that these limitations necessitate an integrated evaluation scheme wherein LLM evaluation complements rather than replaces human judgment.

In summary, the work provides a comprehensive empirically driven exploration of how prominent, instruction-following LLM systems can serve as effective proxies for human evaluators in text quality assessment. By rigorously testing across diverse tasks and evaluation metrics, the paper offers evidence that certain LLMs (notably text-davinci-003 and ChatGPT) can yield ratings that are consistent, reproducible, and in strong agreement with expert human judgment, thereby opening the possibility for their broader application in the evaluation of natural language generation systems.