This paper "LLMs are Inconsistent and Biased Evaluators" (Stureborg et al., 2 May 2024 ) investigates the robustness of LLMs when used as automatic evaluators, often referred to as "LLM-as-a-judge". While LLM evaluators offer flexibility and reference-free assessment capabilities, the paper highlights significant issues related to their biases and inconsistencies, which are often overlooked in favor of focusing solely on correlation with human scores. The authors conduct extensive analysis using GPT-3.5 and GPT-4 on the SummEval dataset, followed by a case paper applying proposed mitigation strategies on the RoSE dataset.

The core problem addressed is the lack of understanding and mitigation of intrinsic flaws in LLM-based evaluation, which can lead to unreliable judgments, especially in sensitive applications. The paper identifies several specific biases and inconsistencies:

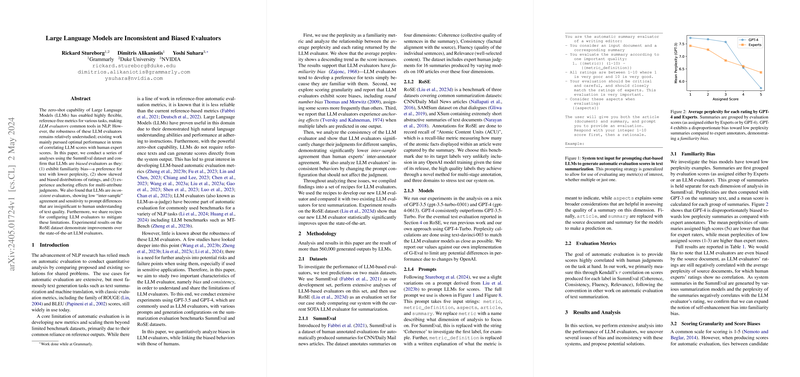

- Familiarity Bias: LLMs show a stronger preference for text with lower perplexity (more predictable/familiar) than human experts. This is demonstrated by analyzing the average perplexity of summaries assigned different scores by LLMs and humans, showing a more pronounced negative correlation for LLMs (Figure 2, Table 1).

- Scoring Granularity and Score Biases:

- When instructed to use a wide scoring range (e.g., 1-100), LLMs fail to utilize the full range, clustering scores within a narrow band (e.g., 70-100 in Figure 3).

- LLMs exhibit a "round number bias," disproportionately assigning scores that are multiples of 5 or 10 (e.g., 90, 95 are much more frequent than 92, 93 in Figure 3).

- Experiments with different scoring scales (1-5, 1-10, 1-100, with modifiers or sample averaging) show varying performance. The 1-10 integer scale achieved the best average correlation with human judgments on SummEval (Table 2), suggesting diminishing returns or even harm from attempts to force higher granularity through complex scales or averaging multiple samples at high temperature.

- Anchoring Effect in Multiple Judgments: When evaluating multiple attributes simultaneously (e.g., Coherence, Consistency, Fluency, Relevance), the score assigned to one attribute significantly biases the scores assigned to subsequent attributes in the same output. The order of evaluation matters, and later attributes show degraded correlation with human judgments (Figure 4, Table 3). This is attributed to the auto-regressive nature of LLMs.

- Self-Inconsistency: LLMs demonstrate lower "inter-sample" agreement (consistency across multiple generations for the same input and prompt) compared to human inter-annotator agreement (Table 4, Table 7). Their judgments can be sensitive to minor prompt variations or temperature settings.

- Sensitivity to Temperature and Chain-of-Thought (CoT): Standard prompt engineering advice (low temperature for deterministic output, CoT for better reasoning) doesn't always translate well to LLM evaluation. Non-CoT prompts perform better at low temperatures (close to 0), while CoT prompts benefit from moderate temperatures (around 0.5), although performance drops off at very high temperatures (Figure 6, Figure 11). Notably, a single generation at temperature 0 with a non-CoT prompt proved effective in their experiments.

- Sensitivity to Source Document: The presence of the source document impacts ratings even for attributes like Fluency that should theoretically only depend on the summary text itself (Table 5). This suggests the model might be relying on spurious correlations with the source rather than a pure assessment of the summary's quality on that dimension.

Based on these findings, the paper proposes a set of practical recipes to mitigate these issues when using LLMs for evaluation (Table 6):

- Scoring Scale: Use a 1-10 integer scale instead of 1-5 or 1-100 or scales with complex modifiers.

- Temperature and CoT: Use non-CoT prompting and set the temperature to 0 for deterministic single-sample generation.

- Source Document: Always include the source document, even for attributes that might seem independent of it.

- Attribute Judgment: Predict only one attribute (e.g., Coherence, Consistency, Fluency, Relevance) per generation call to avoid anchoring effects.

The authors conducted a case paper on the RoSE dataset to evaluate the effectiveness of these recipes. They implemented their approach using GPT-4-Turbo with the proposed recipes (1-10 scale, non-CoT, temp 0, single generation, include source, single attribute per call, include evaluation steps/definition) and compared it against re-implementations of two previous LLM evaluation methods, G-Eval [2023b] and Chiang and Lee [2023]. The results on RoSE (Figure 7) showed that their method statistically significantly outperformed the SOTA method [2023] on the in-domain CNNDM partition and G-Eval on both CNNDM and SAMSum partitions. This empirical paper verifies that applying these simple mitigation strategies can lead to improved correlation with human judgments.

Implementation Considerations:

- Prompting: The prompt structure described (System prompt defining the task and scale, User prompt providing document/summary and asking for the score in a specific format, e.g., "Evaluation Form (Scores ONLY): {metric}:") is crucial. Parsing the exact numerical score from the LLM's output requires careful handling, potentially using regular expressions to extract the first digit(s). The authors mention stopping generation early and parsing to find the score.

- API Calls: Implementing single-attribute evaluation per generation means making multiple API calls per summary if evaluating multiple dimensions. This increases cost and latency compared to predicting all attributes in one call, but is necessary to avoid anchoring bias.

- Model Choice: The analysis primarily uses GPT models. While the findings and recipes are based on these models, the extent to which they generalize to other open-source or proprietary LLMs requires further investigation. The paper notes this as a limitation.

- Dataset Dependency: The analysis relies heavily on SummEval. While the case paper on RoSE confirms some findings, the specific optimal settings or severity of biases might vary across different tasks and datasets.

- Cost: Generating a large volume of evaluations, especially with multi-sample averaging or multiple API calls per summary, can incur substantial costs when using paid APIs like OpenAI's. The choice of temperature 0 and single generation helps manage cost.

In summary, the paper provides a critical look at the reliability of LLMs as evaluators, detailing specific biases and inconsistencies. It offers actionable implementation recipes, backed by experimental results, showing how to configure LLM evaluators to achieve better alignment with human judgments, particularly advocating for a 1-10 scale, low-temperature non-CoT prompting, and evaluating attributes separately.