Overview of OpenICL: An Open-Source Framework for In-Context Learning

The paper introduces OpenICL, a sophisticated open-source toolkit designed for in-context learning (ICL) and LLM evaluation. OpenICL addresses the complexities inherent in implementing ICL, offering a highly flexible architecture that accommodates diverse retrieval and inference methods. This essay provides a detailed examination of the paper, focusing on the framework's design principles, architecture, and its application across various NLP tasks.

Design Principles

OpenICL is built on three foundational principles:

- Modularity: The framework's loosely-coupled design enables users to interchange components, facilitating integration of the latest ICL methods and customization for specific tasks.

- Efficiency: Given the massive scale of current LLMs, OpenICL implements data and model parallelism for optimized, efficient inference.

- Generality: The framework's adaptable interface supports a broad spectrum of models, tasks, retrieval, and inference methods.

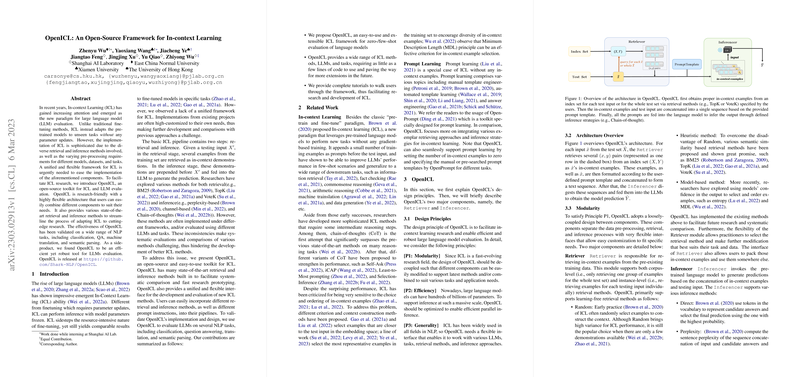

Architecture Overview

OpenICL's architecture is structured around two primary components: the Retriever and the Inferencer.

- Retriever: This component is tasked with selecting in-context examples from the training data. It supports various retrieval methods, including Random selection, Heuristic approaches like BM25 and TopK, and Model-based strategies. These methods cater to diverse requirements, allowing systematic comparison and research exploration.

- Inferencer: This module interfaces with pre-trained LLMs to generate predictions. It supports Direct, Perplexity, and Channel methods, offering flexibility for recursive invocation in multi-stage ICL processes such as chain-of-thought reasoning.

Evaluation and Application

The paper demonstrates OpenICL's flexibility through evaluations on several NLP tasks: sentiment analysis with SST-2, commonsense reasoning with PiQA, arithmetic reasoning with GSM8K, machine translation with WMT16, and summarization with Gigaword. By leveraging different LLMs, such as GPT-Neo and GPT-3, the framework accommodates a wide variety of ICL applications with minimal code modifications.

Implications and Future Directions

The implications of OpenICL are multifold:

- Practical: Researchers can rapidly prototype and evaluate ICL algorithms, accelerating the development cycle and enabling robust comparisons across methods.

- Theoretical: The modularity and flexibility of OpenICL may encourage exploration of novel retrieval and inference techniques and integration of recent advancements in LLMs.

Looking toward the future, OpenICL's extensibility suggests potential adaptations to evolving ICL methods and models. This adaptability will be crucial as the field advances, allowing the framework to incorporate cutting-edge research to remain relevant and useful.

Conclusion

OpenICL represents a significant contribution to ICL research, providing a comprehensive toolkit that simplifies the implementation of complex methodologies. Though ICL and LLM evaluation are maturing areas, OpenICL lays a strong foundation for continued innovation and exploration. As such, it is likely to become a vital resource for researchers and practitioners alike.