- The paper introduces humble trust to mitigate misplaced distrust by balancing the costs of false positives and false negatives in AI systems.

- It critiques traditional precautionary methods and proposes variable thresholds and human oversight to improve classification accuracy.

- The study highlights the significant social and psychological impacts on individuals misclassified by biased AI decision-making processes.

Humble Machines: Attending to the Underappreciated Costs of Misplaced Distrust

Introduction to Distrust in AI Systems

The research explores the inherent distrust prevalent in AI systems when making critical decisions that directly impact human lives. The authors assert that the distrust AI systems face from the public is not solely due to a lack of confidence in their technical accuracy but rather stems from the systems' characteristic design prioritizing the reduction of false positives over false negatives. This focus often results in AI wrongfully categorizing trustworthy individuals, thereby eroding public trust in AI's decision-making processes. The research suggests a shift in design philosophy towards "humble trust" to mitigate the moral and social costs associated with misplaced distrust.

Decision-Making Dynamics in AI

AI decision-making systems predominantly rely on statistical methods to determine a decision threshold beyond which outcomes are classified as positive or negative. The research critiques the tendency of these systems towards precautionary measures where the cost of false positives is excessively weighted over false negatives, resulting in greater misclassification rates.

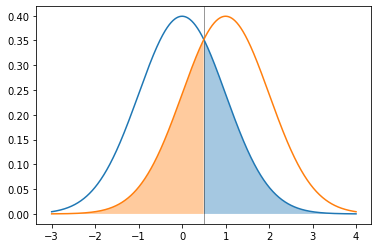

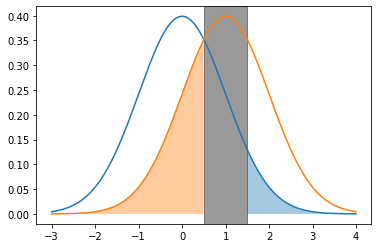

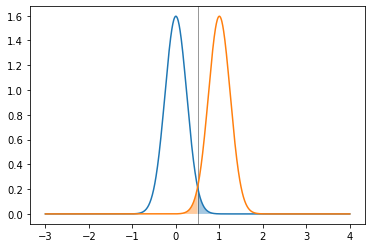

Figure 1: Equal costs for false positives and false negatives.

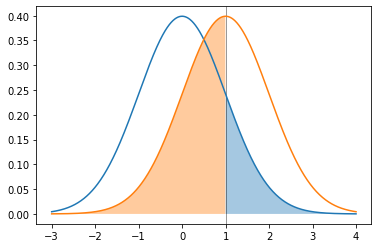

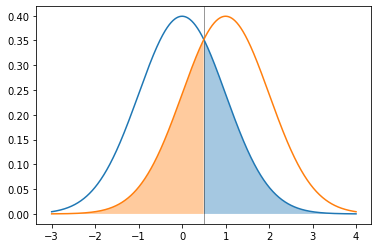

Figure 2: Greater cost for false positives than false negatives yielding a larger false negative rate and smaller false positive rate.

As illustrated in Figure 1 and Figure 2, the relative weighting of these costs affects decision thresholds and misclassification rates. Here, the shift in decision threshold yields a larger false negative rate due to higher prioritization of avoiding false positives.

Impact of Distrustful AI on Individuals and Society

The paper explores the social and psychological impact of AI systems that default to a position of distrust. It is highlighted that individuals who are wrongfully categorized as untrustworthy by AI systems experience increased alienation, resentment, and demoralization, leading to a wider societal impact where trust in AI diminishes further. The "precautionary" methods inherent in AI decision-making (e.g., mistaken prior beliefs about the distribution of data) exacerbate these issues, increasing the emotional and psychological toll on individuals.

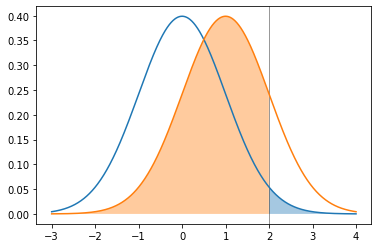

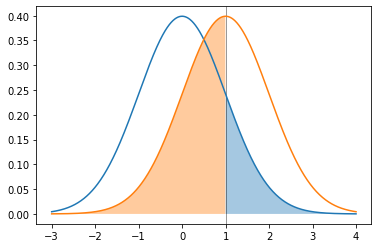

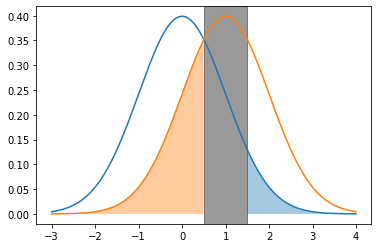

Figure 3: Even greater cost for false positives than false negatives yielding an even larger false negative rate and even smaller false positive rate.

The Concept of Humble Trust

To counteract the negative implications of distrust-oriented AI, the research introduces the concept of "humble trust," which advocates for the equal consideration of the costs of false negatives and false positives. Humble trust involves skepticism about the AI's initial assumptions, curiosity to explore trust-responsiveness among decision subjects, and a commitment to avoiding distrust of the trustworthy.

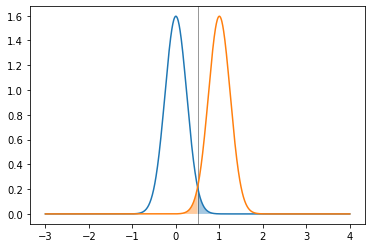

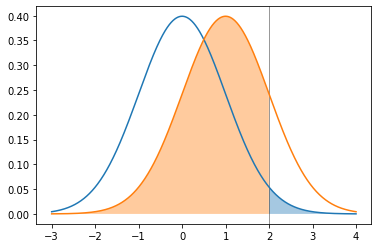

Figure 4: Lowered uncertainty through more informative features.

This approach suggests actively searching for additional data to enrich decision-making processes (Figure 4), thus reducing uncertainty and improving classification accuracy.

Technical Implementation of Humble Trust

Practically implementing humble trust requires shifting algorithmic cost functions to a balanced approach that equally considers false positives and negatives (Figure 3). Implementing a variable threshold or gray area (Figure 5 and Figure 6) might include decisions that are outside a clear trust/distrust classification, needing human intervention or thoughtful consideration above automated determinations.

Figure 5: The gray band around a decision threshold may be used for randomization or to revert to a human decision maker.

Conclusion

The research asserts that incorporating humble trust into AI systems could restore public confidence by addressing the underappreciated costs of misplaced distrust. Acknowledging the inherent moral and social responsibilities of AI developers is crucial to balance technical efficiency and trustworthiness. The commitment to redesign AI systems, focusing on trust-equitable design and implementation practices, will likely have long-term positive effects on person-AI relations.