A Framework for the Interoperability of Cloud Platforms: Towards FAIR Data in SAFE Environments

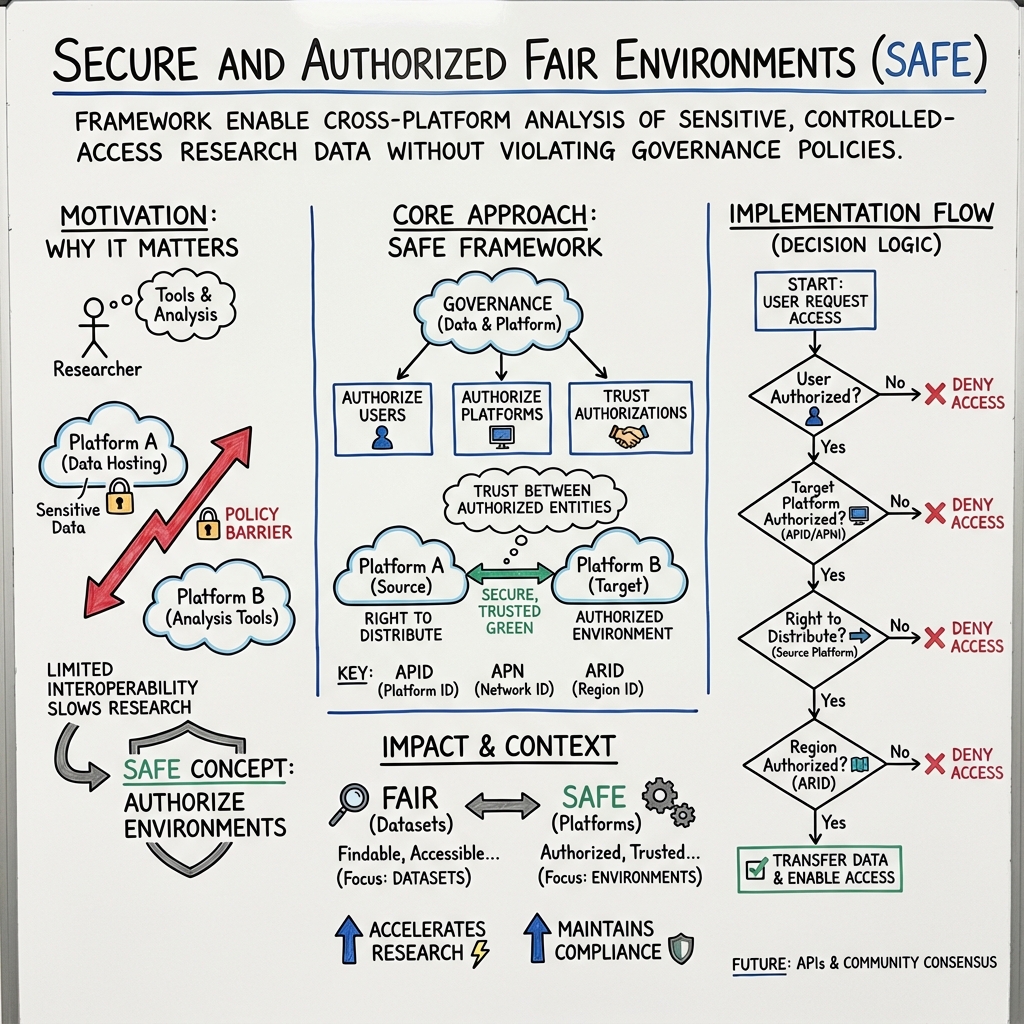

Abstract: As the number of cloud platforms supporting scientific research grows, there is an increasing need to support interoperability between two or more cloud platforms, as a growing amount of data is being hosted in cloud-based platforms. A well accepted core concept is to make data in cloud platforms Findable, Accessible, Interoperable and Reusable (FAIR). We introduce a companion concept that applies to cloud-based computing environments that we call a Secure and Authorized FAIR Environment (SAFE). SAFE environments require data and platform governance structures and are designed to support the interoperability of sensitive or controlled access data, such as biomedical data. A SAFE environment is a cloud platform that has been approved through a defined data and platform governance process as authorized to hold data from another cloud platform and exposes appropriate APIs for the two platforms to interoperate.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper is about helping different cloud platforms safely work together when they handle sensitive research data (like medical records or DNA data). The authors introduce a new idea called a Secure and Authorized FAIR Environment, or SAFE. It complements the well-known FAIR principles for data (Findable, Accessible, Interoperable, Reusable) by focusing on the environments where that data is analyzed. The goal is to make it easier and safer for researchers to use tools from one cloud to analyze data stored in another, without breaking rules or risking privacy.

What questions does the paper try to answer?

The paper asks:

- How can we let scientists analyze sensitive data across different cloud platforms without copying it everywhere or weakening privacy protections?

- What rules and checks should be in place so that one cloud platform can trust another with controlled data?

- How do we clearly define who is allowed to use the data, where they can use it, and which platforms are allowed to share it?

How did the authors approach the problem?

To explain their approach, think of research clouds like different “libraries” and sensitive datasets like “special books” that only certain people can read in certain rooms.

- FAIR focuses on the “books” (datasets): making them easy to find, access, and reuse.

- SAFE focuses on the “rooms” (cloud environments): making sure the rooms where people read the books are secure, approved, and can work together.

Here are the key ideas, translated into everyday language:

- Authorized user: Someone who has permission to read certain special books.

- Authorized environment: A specific room or workspace that meets security rules and is approved for reading those special books.

- Right to distribute: The permission a library has to lend a special book to a reader, possibly in another approved room.

They also define simple, standard “ID cards” to help platforms recognize each other:

- APID (Authorized Platform Identifier): A unique ID for each cloud platform—like a library’s ID badge.

- APNI (Authorized Platform Network Identifier): A unique ID for a group of platforms that agree to trust and work together—like a club of libraries.

- ARID (Authorized Region ID): A unique ID for the geographic areas where data can be used—like a rule that says “this book can only be read in this country.”

How platforms talk and check trust:

- APIs are like doorways with rules: they let platforms exchange information safely. Metadata (data about data) is like labels on the books and signs on the doors that clearly state the rules (who can read, where, and how).

Approval and safety checks:

- The paper suggests a practical approval process, similar to a safety checklist. Examples include NIH data access processes and NIST security standards. These are like inspectors confirming a room is secure and issuing an “Authority to Operate” before anyone can read the special books there.

To make a decision about sharing a dataset from Platform A to Platform B, imagine checkpoints:

- Is the user authorized to view this dataset?

- Is Platform B an authorized environment?

- Does Platform A have the right to distribute the dataset?

- Is Platform B in an allowed region (if there are geographic limits)?

If all checkpoints pass, Platform A can provide access to the data for the user in Platform B.

What did they find or propose, and why it matters?

Main proposal and outcome:

- SAFE is a clear, practical framework that complements FAIR. FAIR makes data good for sharing; SAFE makes cloud environments safe and authorized for analyzing that data.

- The core principle is simple: “Authorize the users, authorize the platforms, and trust the authorizations.” In other words, if the person and the platform are both known and approved, then sharing between platforms should be allowed.

- They propose standard IDs (APID, APNI, ARID) and dataset labels (metadata) to make these decisions easy and consistent.

- They outline who does what: Project Sponsors set rules and approvals; Platform Operators run the secure environments; Users follow the rules to access data.

Why it matters:

- Researchers can use the tools they prefer—even if those tools live in a different cloud than the data—without copying data all over the place.

- It protects privacy by ensuring only approved people and approved platforms handle sensitive data.

- It speeds up discoveries in health and social research because it reduces roadblocks to combining and analyzing data across platforms.

What are the implications or potential impact?

If widely adopted, SAFE could:

- Make scientific research faster and more flexible by letting trusted cloud platforms interoperate safely.

- Reduce duplicated data storage and lower risk, since data doesn’t need to be copied to many places.

- Help sponsors and institutions maintain strong privacy protections while still enabling modern, cross-platform analysis.

- Support large communities (like NIH’s cloud platforms) in building trusted networks where datasets can move securely to approved environments.

In short, combining FAIR data with SAFE environments helps the research world share sensitive data responsibly: people are approved, places are approved, and trusted platforms can work together to accelerate science.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

After reviewing the paper, the following unresolved issues remain. Each bullet identifies a concrete gap or open question that future work could address:

- Minimum security baseline: What specific, testable security and privacy controls must a platform meet to be considered a SAFE environment beyond citing example frameworks (e.g., NIST SP 800-53 Moderate)? Define a normative control profile and assurance levels.

- Trust and attestation mechanism: How is the “chain of trust” established and verified between platforms (e.g., PKI, certificate authorities, mutual TLS, signed attestations, remote attestation, supply-chain attestations)? Who operates trust anchors and how are they governed?

- Revocation and compromise handling: What are the processes and protocols to revoke a platform’s authorization (APID) or network membership (APNI), rotate keys, and disseminate revocation status in near real time after incidents?

- Identity and access federation: How are user identities mapped across platforms (e.g., ORCID, institutional SSO, GA4GH AAI, OIDC/SAML)? How is authorization portable across platforms while honoring least privilege and purpose limitation?

- API specification completeness: The SAFE API is not specified (no endpoints, schemas, authentication flows, error codes, or discovery mechanisms). Provide a concrete API definition (e.g., OpenAPI) for APID/APNI/ARID exchange, authorization checks, and dataset eligibility queries.

- Registry and governance of identifiers: Who operates globally unique registries for APID, APNI, and ARID (naming rules, collision avoidance, lookup services, persistence guarantees), and how are registry operators accredited and overseen?

- Metadata schema and vocabularies: What is the standard schema for dataset-level metadata indicating right to distribute, authorized networks, and regional limits? How does it align with FAIR schemas (e.g., DCAT, schema.org) and GA4GH DUO/Consent codes?

- Policy-as-code and enforcement: How are governance rules (distribution rights, regional constraints, secondary-use restrictions) expressed, versioned, and enforced machine-readably across platforms (e.g., OPA/Rego, Cedar)?

- Enforcement of regional restrictions: How are ARID constraints technically enforced (e.g., cloud region pinning, geofencing, KMS keys, data residency controls) and auditable in multi-cloud contexts and under regulations like GDPR and HIPAA?

- Handling jurisdictional conflicts: How are cross-border legal conflicts resolved when project sponsors, platforms, and users span multiple legal regimes (e.g., Schrems II, data localization laws)?

- Dataset redistribution workflows: What is the exact mechanism for transferring data between platforms (in situ compute vs. data copy vs. federated query), including encryption-in-transit, integrity verification (checksums), and validation of receipt?

- Derived data governance: How are rights and restrictions propagated to derived datasets, intermediate files, and results exported from authorized environments? Who determines whether derivatives remain controlled?

- Provenance and versioning across transfers: How is provenance, version control, and immutability of datasets maintained and synchronized across platforms to prevent divergence and ensure reproducibility?

- Auditing, observability, and compliance: What are the minimum logging requirements, cross-platform audit trails, retention periods, and standardized formats for independent review and breach forensics?

- Incident response coordination: How are incident handling, breach notification, and coordinated vulnerability disclosure managed across platform boundaries and sponsors?

- Authorization lifecycle management: How often are authorized environments re-assessed (recertification), what triggers ad hoc reviews, and how is deauthorization propagated to block access and enforce data deletion?

- Data minimization and least privilege: What controls constrain scope of data access (e.g., row/column-level filtering, dataset shards) to reduce exposure when full transfer isn’t needed?

- Granular use restrictions enforcement: How are DUO/consent-based purpose limitations and secondary-use restrictions enforced at run time and embedded in access decisions and logs?

- Interoperability with GA4GH/EOSC standards: How does SAFE integrate with or extend GA4GH DRS, TES/WES, AAI, Passport/Visas, and EOSC frameworks? Provide mappings or profiles to avoid duplicative standards.

- Software/workflow portability and supply chain: How are containers and workflows moved safely across platforms with provenance (SBOMs, signatures), vulnerability scanning, and reproducibility guarantees (e.g., WDL/CWL portability)?

- Threat modeling and risk taxonomy: What is the explicit threat model (insider threats, credential theft, cross-tenant leakage, supply-chain attacks, exfiltration via results)? How do controls map to threats?

- Multi-tenancy isolation: What technical baselines are required for tenant isolation within authorized environments (e.g., VPC isolation, confidential computing, hardened kernels)?

- Privacy-preserving analytics: When data movement is constrained, what SAFE-compatible approaches (federated learning, secure multiparty computation, differential privacy, confidential computing) are within scope and how are they certified?

- Cost and performance implications: How do egress fees, latency, and compute costs affect SAFE-mediated interoperability, and what economic models or incentives mitigate barriers for researchers and smaller institutions?

- Equity and accessibility: What is the burden for smaller or resource-limited institutions to become SAFE environments (costs, staffing, audits), and what support models reduce disparities?

- Right-to-distribute decision criteria: What concrete criteria and extra safeguards distinguish platforms allowed to redistribute data from those that cannot, and how are these criteria audited?

- APN governance and membership: How are Authorized Platform Networks formed, governed, expanded/contracted, and how are disputes resolved among members with differing policies?

- Discovery NB: typo/ambiguity in ARID vs. AIRD: The manuscript ends noting “AIRD identifiers” while earlier defining ARID; clarify the correct identifier and its semantics.

- User experience and workflow: How do users discover authorized environments for a dataset, initiate requests, and track approvals? Provide reference UX flows and integration points with DAC processes.

- Handling composite/multi-sponsor datasets: How are conflicting governance, consent, and distribution rules reconciled for datasets sourced from multiple sponsors or consents?

- Compatibility with on-prem/private clouds: What additional controls or attestations are required to include private clouds or on-prem clusters as SAFE environments, including network peering and identity integration?

- Results egress controls: How are exports of analysis outputs controlled and reviewed to prevent leakage (e.g., privacy checks, output gating, statistical disclosure control)?

- Data deletion and end-of-use: What verifiable deletion methods and attestations are required when access expires or platforms are deauthorized, including cryptographic erasure and log-backed proofs?

- Standardization and stewardship: What body maintains the SAFE specifications, versions them, manages change control, and ensures backward compatibility and conformance testing?

- Conformance testing and certification: How will platforms demonstrate SAFE compliance (test suites, audits, certifications), and who recognizes or accredits assessors?

- Quantitative evaluation plan: What metrics will assess SAFE’s effectiveness (time-to-access, breach rate reduction, interoperability success rate, researcher satisfaction), and what pilot studies or case reports will validate the framework?

- Clarified scope boundaries: SAFE is “not a security or compliance level,” yet prescribes approvals; define precise scope to avoid misinterpretation as a certification and to delineate responsibilities.

- Integration with consent management: How will dynamic consent updates be propagated across platforms and enforced in real time for already-transferred data?

- Handling edge cases: How are ephemeral notebook environments, BYO-cloud setups, and offline analyses treated within SAFE’s authorization and auditing model?

- Data classification granularity: Beyond open vs. controlled, what sensitivity tiers and corresponding control sets exist (e.g., high-risk genomics + EHR vs. deidentified aggregates) and how does SAFE adapt?

- Conflict resolution in authorization checks: When APN memberships, ARID constraints, or distribution rights partially overlap, what is the decision logic and who arbitrates?

- Legal liability and indemnification: How are liabilities allocated among sponsors, platform operators, and users in the event of breaches or misuse in an authorized environment?

- Education and accountability: What minimum training and attestation requirements exist for users and operators to ensure policy comprehension and accountability?

- Terminology harmonization: How does “authorized environment” relate to existing terms (e.g., ATO, Controlled Access Workspace), and can inconsistencies be resolved to prevent divergent interpretations?

Collections

Sign up for free to add this paper to one or more collections.