- The paper demonstrates that high probing accuracy does not necessarily imply that linguistic features are functionally relevant to the primary task.

- It employs control datasets and synthetic experiments to isolate the influence of pre-trained word embeddings from task-specific learning.

- The study advocates for refined probing methods, such as causal and adversarial approaches, to better distinguish incidental encoding from practical utility.

Probing the Probing Paradigm: Does Probing Accuracy Entail Task Relevance?

Introduction

This paper investigates the widely used probing paradigm in neural NLP models to assess its efficacy in determining the task-related significance of learned features. It critically examines whether the probing accuracies imply task relevance and discusses the interaction between linguistic properties encoded in neural models and the actual task requirements. Fundamentally, the paper posits that linguistic properties may be incidentally encoded in representations, and probing accuracies might not reliably indicate task-specific utility.

Methodology and Experimental Design

The study employs a methodical approach by creating control datasets where a linguistic property is non-informative for task-specific decisions. The models are trained and evaluated on both these purposefully biased datasets and traditional setups to compare probing outcomes.

Figure 1: Illustration of our control dataset methodology for evaluating probing classifiers.

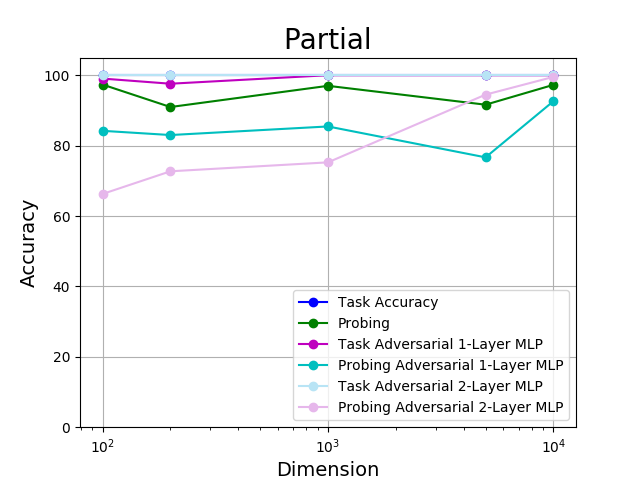

The main task examined is Natural Language Inference (NLI), with auxiliary probing tasks focusing on linguistic features like tense, subject number, and object number. Control datasets for each auxiliary task are curated by fixing the property of interest across all data points, ensuring it does not aid in task resolution. This rigour is maintained to evaluate whether probing still retrieves these properties from the model representations.

Key Findings

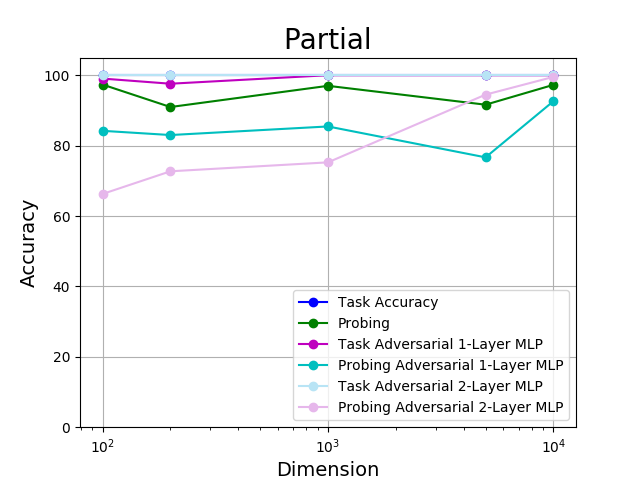

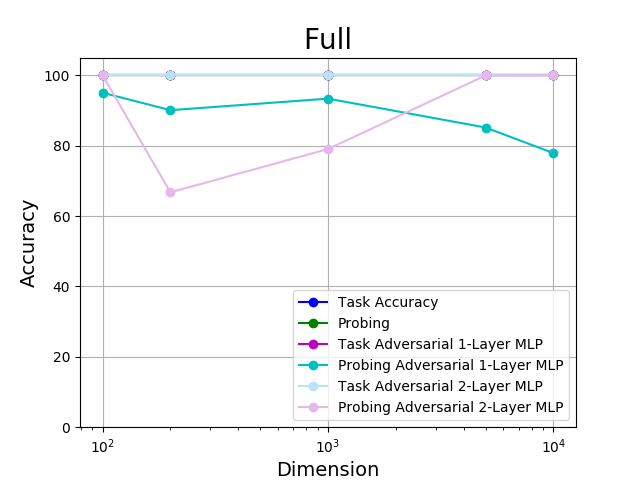

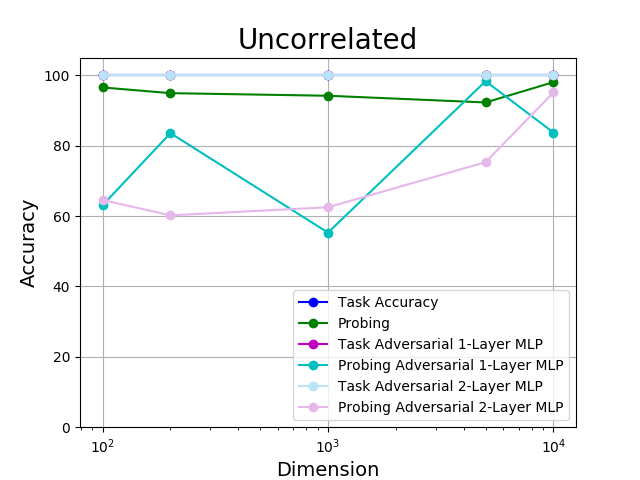

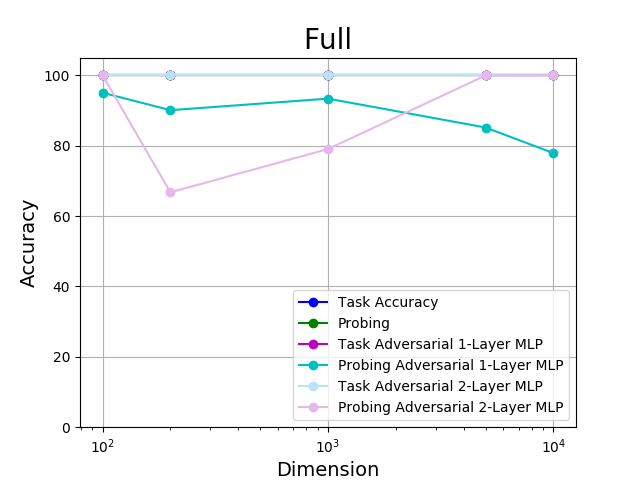

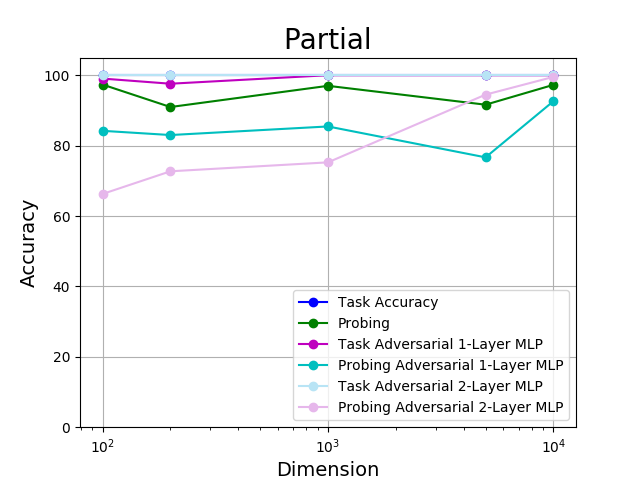

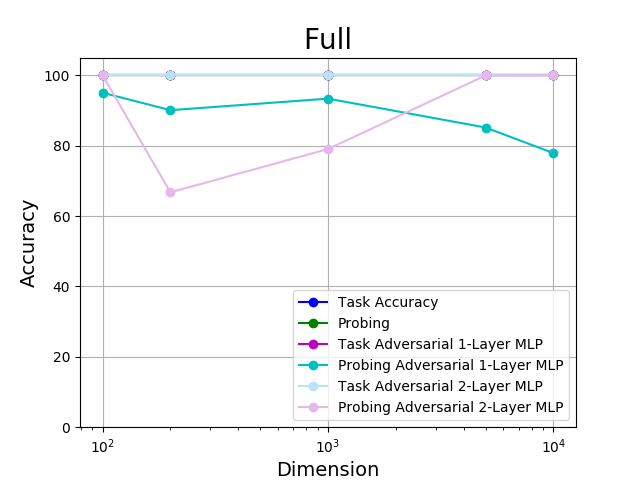

- Probing Accuracy vs. Task Utility: The study demonstrates that models consistently show high probing accuracy for linguistic properties that are not necessary for the main task. This finding brings into question the inference of task relevance solely from probing performance as task-specificity may mislead evaluations when linguistic properties are incidentally learned.

- Role of Word Embeddings: Pre-trained word embeddings contribute significantly to the encoding of linguistic properties in neural representations, often independent of the main task learning. This introduces a confound in the interpretation of probing accuracies, emphasizing the need for disentangling embedding influence from task-induced learning.

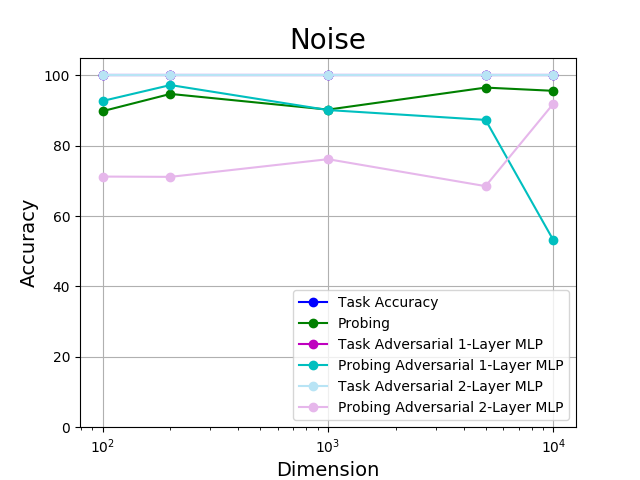

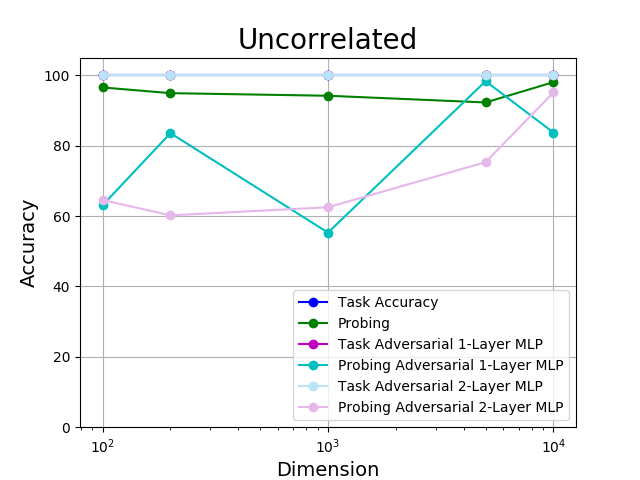

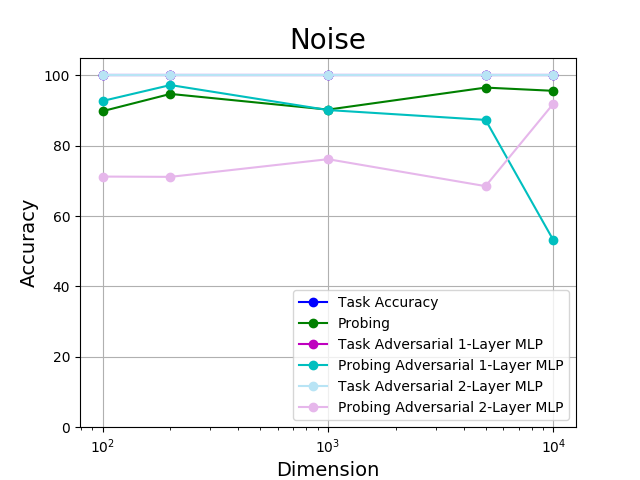

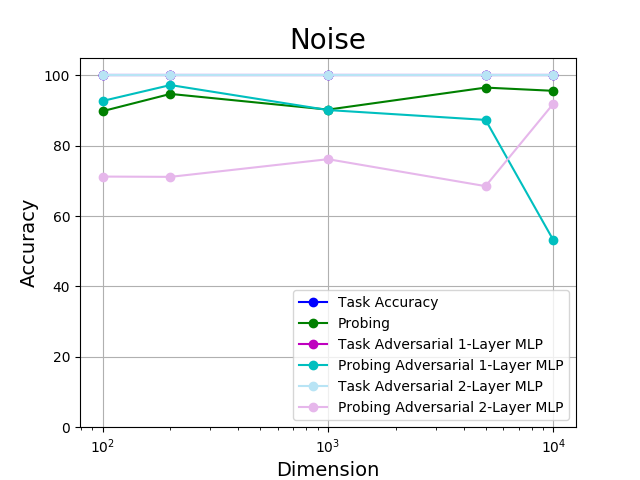

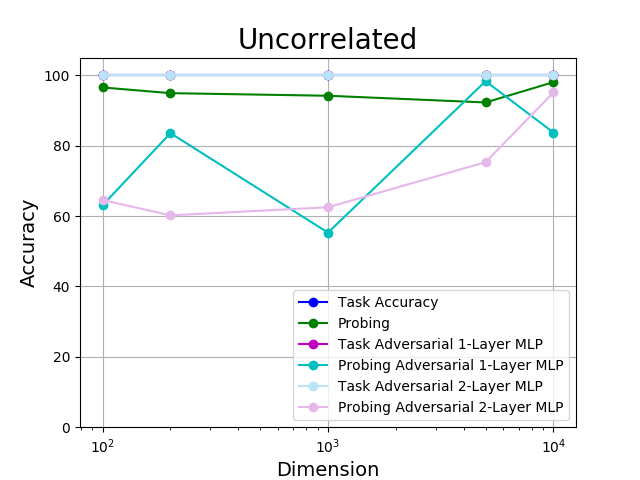

- Synthetic Experiments: Through synthetic datasets, this study illustrates the incidental encoding of properties as tasks are executed. Even random noise appeared as latent in trained representations, spotlighting that probed features might not necessarily contribute to task performance and may indeed be extraneous.

Practical Implications and Recommendations

The findings bear significant relevance for NLP model analysis methods. They suggest a cautious interpretation of probing results with an emphasis on carefully constructed baselines and controls. Furthermore, tasks with synthetic noise reveal the tendency for models to capture irrelevant attributes, highlighting the potential for misattribution of learned competencies.

Figure 2: Main Task and Probing Accuracy as a function of capacity of sentence representation (# units).

Future Directions

Advancing this inquiry framework calls for designing refined probing techniques that more accurately isolate task-relevant properties. Suggestions include employing causal methods to differentiate between incidentally encoded properties and those essential for a model's decision process. Additionally, as probing becomes inadequate in discerning task reliance, broader methods incorporating controlled interventions and adversarial suppression could help clarify the real utility of linguistic features in model learning.

Conclusion

This comprehensive critique of the probing methodology uncovers limitations in its use as a diagnostic tool for understanding model dynamics. The paper underscores the importance of distinguishing between mere representation and functional necessity of linguistic properties in neural models, urging for comprehensive methodologies to more accurately translate probing results into meaningful task relevance insights.