BERT (Bidirectional Encoder Representations from Transformers) is a language representation model pre-trained on large unlabeled text corpora using a Transformer-based architecture. Its primary contribution lies in pre-training deep bidirectional representations by jointly conditioning on both left and right context across all layers, addressing limitations of prior unidirectional or shallow bidirectional approaches (Devlin et al., 2018 ). The core innovation enables the pre-trained model to be effectively fine-tuned for a wide array of downstream NLP tasks by adding minimal task-specific layers, achieving state-of-the-art results on numerous benchmarks upon its release.

Model Architecture

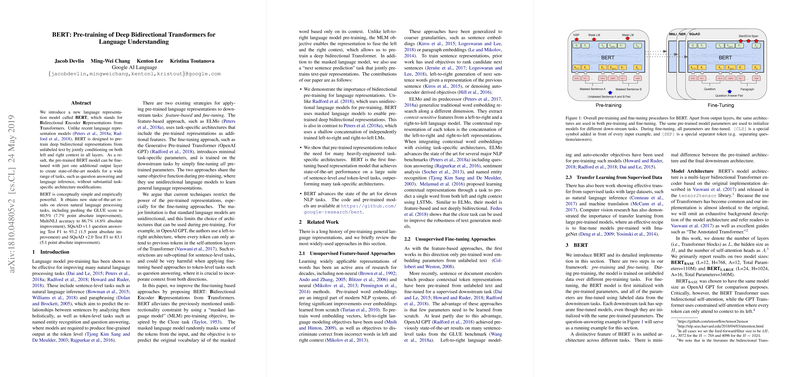

BERT's architecture is fundamentally a multi-layer bidirectional Transformer encoder, based on the original Transformer model proposed by Vaswani et al. (2017). It deviates from the full Transformer by omitting the decoder stack, as its goal is representation learning rather than sequence transduction. Two primary configurations were introduced:

- BERT-Base: L=12, H=768, A=12, Total Parameters=110M

- L: Number of Transformer blocks (layers)

- H: Hidden size

- A: Number of self-attention heads

- BERT-Large: L=24, H=1024, A=16, Total Parameters=340M

Each Transformer block employs a multi-head self-attention mechanism followed by position-wise feed-forward networks. Residual connections and layer normalization are applied around each sub-layer. The key architectural element enabling bidirectionality during pre-training is the Masked LLM (MLM) objective, which modifies the standard LLMing task to allow simultaneous conditioning on context from both directions without letting the target token "see itself" indirectly through stacked self-attention layers.

Pre-training Procedure

BERT's effectiveness stems from its novel pre-training phase, which utilizes two unsupervised tasks performed jointly on large text corpora (BookCorpus: 800M words, English Wikipedia: 2,500M words).

Masked LLM (MLM)

To enable deep bidirectional conditioning, the MLM task modifies the standard LLMing objective. Instead of predicting the next word based on previous words (left-to-right), BERT predicts randomly masked tokens within the input sequence based on their surrounding context (left and right). The masking procedure is as follows:

- Selection: 15% of the WordPiece tokens in each sequence are randomly selected for potential replacement.

- Replacement Strategy:

- 80% of the selected tokens are replaced with a special

[MASK]token. - 10% of the selected tokens are replaced with a random token from the vocabulary.

- 10% of the selected tokens remain unchanged.

- 80% of the selected tokens are replaced with a special

This strategy serves two purposes:

- It forces the model to learn contextual representations to predict the original masked tokens.

- It mitigates the mismatch between pre-training (where

[MASK]tokens are present) and fine-tuning (where they are typically absent) by occasionally presenting the model with corrupted (random token) or correct tokens at the positions designated for prediction.

The final hidden vectors corresponding to the masked token positions are fed into an output softmax layer over the vocabulary, identical to a standard LM classifier. The MLM loss is the cross-entropy loss computed only on the prediction of the masked tokens.

Next Sentence Prediction (NSP)

To equip the model with an understanding of sentence relationships, crucial for tasks like Question Answering (QA) and Natural Language Inference (NLI), the NSP task is introduced. During pre-training, the model receives pairs of sentences (A, B) as input and predicts whether sentence B is the actual sentence that follows sentence A in the original corpus (IsNext) or just a random sentence (NotNext).

- Data Generation: For each pre-training example, 50% of the time sentence B is the actual subsequent sentence to A, and 50% of the time it is a random sentence sampled from the corpus.

- Prediction: The output corresponding to the special

[CLS]token (prepended to every input sequence) is passed through a simple binary classification layer to predict theIsNext/NotNextlabel.

The NSP loss is the binary cross-entropy loss from this classification. The total pre-training loss is the sum of the mean MLM loss and the mean NSP loss.

Input Representation

The input representation for a given sequence (or pair of sequences) in BERT is constructed by summing three types of embeddings:

- Token Embeddings: Learned embeddings for each token in the vocabulary, derived using WordPiece tokenization with a vocabulary size of approximately 30,000.

- Segment Embeddings: Learned embeddings (

E_AorE_B) indicating whether a token belongs to the first sentence (A) or the second sentence (B) in a pair. This is crucial for the NSP task and sentence-pair classification tasks during fine-tuning. For single-sentence inputs, onlyE_Ais used. - Position Embeddings: Learned embeddings representing the position of each token in the sequence. Unlike the sinusoidal positional encodings used in the original Transformer, BERT learns these positional embeddings, supporting sequence lengths up to 512 tokens.

The final input embedding for a token is .

Special tokens are used:

[CLS]: Prepended to every input sequence. Its final hidden state is used as the aggregate sequence representation for classification tasks.[SEP]: Used to separate sentences in a pair and appended at the end of each sequence.

Fine-tuning BERT

A significant advantage of BERT is its applicability to diverse downstream tasks with minimal architectural modifications. The pre-trained parameters provide a strong initialization, and fine-tuning involves updating all parameters end-to-end using task-specific labeled data.

The fine-tuning procedure generally involves:

- Input Formatting: Representing the task-specific inputs as sequences or sequence pairs suitable for BERT (e.g., premise-hypothesis pair for NLI, question-passage pair for QA, single sentence for sentiment classification).

- Output Layer: Adding a simple task-specific output layer on top of the pre-trained BERT model.

- Sequence-level tasks (e.g., GLUE, sentiment analysis): A classification layer is added on top of the final hidden state corresponding to the

[CLS]token, . The output probability is , where is the task-specific parameter matrix. - Token-level tasks (e.g., Named Entity Recognition - NER, SQuAD QA): An output layer is added on top of the final hidden states of each token, . For NER, this could be a per-token classifier. For SQuAD, start and end span prediction layers are added, calculating dot products between token representations and learned start/end vectors.

- Sequence-level tasks (e.g., GLUE, sentiment analysis): A classification layer is added on top of the final hidden state corresponding to the

- End-to-End Training: Fine-tuning all parameters (BERT's and the added layer's) to minimize the task-specific loss function. Hyperparameters like learning rate (e.g., 5e-5, 3e-5, 2e-5), batch size (e.g., 16, 32), and number of epochs (e.g., 2-4) are typically explored during fine-tuning.

Compared to pre-training, fine-tuning is computationally inexpensive.

Experimental Results and Ablations

BERT demonstrated significant improvements across a wide range of NLP tasks:

- GLUE: Achieved a score of 80.5% on the GLUE benchmark test set, a 7.7% absolute improvement over the previous state-of-the-art.

- MultiNLI: Reached 86.7% accuracy (matched human performance) on the matched dev set, a 4.6% absolute improvement.

- SQuAD v1.1: Obtained a Test F1 score of 93.2, improving upon the previous best model by 1.5 points. BERT-Large ensemble achieved 94.4 F1.

- SQuAD v2.0: Achieved a Test F1 score of 83.1, a 5.1 point absolute improvement, demonstrating its capability in handling tasks with "no answer" options.

Ablation studies confirmed the importance of the design choices:

- MLM vs. Left-to-Right: A model pre-trained only with a standard Left-to-Right LM objective (like GPT) but using the BERT architecture performed significantly worse than BERT with MLM, highlighting the benefit of deep bidirectionality. A shallow concatenation approach (like ELMo) also underperformed BERT.

- NSP Task: Removing the NSP task degraded performance significantly on QNLI, MNLI, and SQuAD 1.1, supporting its value for capturing sentence relationships, although later work (e.g., RoBERTa) questioned its necessity and proposed alternatives.

- Model Size: BERT-Large consistently outperformed BERT-Base across tasks, especially those with smaller datasets, indicating the benefits of increased model capacity when sufficient pre-training data is available. Training longer also improved performance.

Implementation Considerations

- Pre-training: Requires substantial computational resources. The paper reports pre-training BERT-Base on 4 Cloud TPUs (16 TPU chips total) took 4 days, while BERT-Large on 16 Cloud TPUs (64 TPU chips total) also took 4 days. Access to large, clean text corpora is essential.

- Fine-tuning: Relatively fast and feasible on standard hardware (e.g., a single modern GPU). Requires careful hyperparameter tuning, though the suggested ranges often work well.

- Model Availability: Google released pre-trained models (Base and Large, cased and uncased) and the TensorFlow implementation. Subsequently, ports and further pre-trained variants became widely available through libraries like Hugging Face's Transformers (PyTorch and TensorFlow).

- Maximum Sequence Length: Limited to 512 tokens due to the learned positional embeddings. Longer documents require truncation or segmentation strategies.

[MASK]Token Discrepancy: The[MASK]token used during MLM pre-training is absent during fine-tuning, creating a potential mismatch. The 80/10/10 masking strategy aims to alleviate this.- Static Masking: The original implementation used static masking (each training instance masked the same way across epochs). Dynamic masking (re-masking instances each epoch) was explored later and found beneficial.

Conclusion

BERT represented a significant advancement in transfer learning for NLP by effectively pre-training deep bidirectional representations using the Transformer architecture and the innovative Masked LLM objective. Its simple yet powerful fine-tuning paradigm allowed a single pre-trained model to achieve state-of-the-art results on a diverse set of downstream tasks with minimal task-specific modifications, paving the way for subsequent developments in large-scale pre-trained LLMs.