The paper "Attention Is All You Need" (Vaswani et al., 2017 ) introduced the Transformer architecture, a sequence transduction model entirely based on attention mechanisms, eschewing recurrence and convolution. This approach aimed to improve parallelization and reduce training time while achieving state-of-the-art results in tasks like machine translation.

Architecture Overview

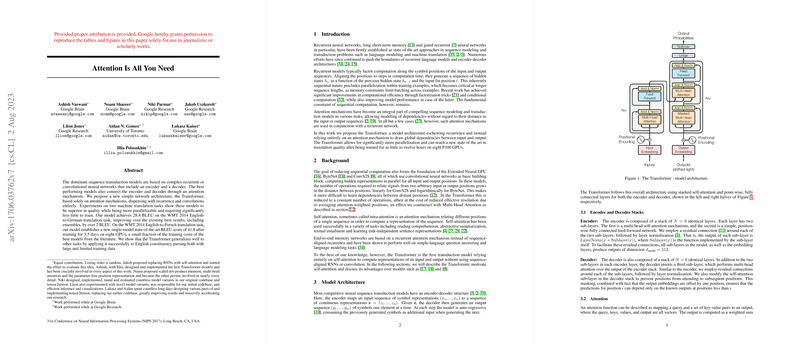

The Transformer follows an encoder-decoder structure, common in sequence-to-sequence tasks. Both the encoder and decoder are composed of a stack of identical layers.

- Encoder: The encoder stack consists of identical layers. Each layer has two primary sub-layers: a multi-head self-attention mechanism and a position-wise fully connected feed-forward network. Residual connections are employed around each of the two sub-layers, followed by layer normalization. The output of each sub-layer is , where is the function implemented by the sub-layer itself. All sub-layers in the model, as well as the embedding layers, produce outputs of dimension .

- Decoder: The decoder stack also consists of identical layers. In addition to the two sub-layers found in the encoder layer, the decoder inserts a third sub-layer, which performs multi-head attention over the output of the encoder stack. Similar to the encoder, residual connections and layer normalization are applied around each sub-layer. The self-attention sub-layer in the decoder stack is modified to prevent positions from attending to subsequent positions, ensuring the auto-regressive property. This is achieved by masking out (setting to ) all values in the input of the softmax corresponding to illegal connections.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

+---------------------+ +---------------------+

| Output | | Inputs |

| Probabilities | | |

+----------+----------+ +----------+----------+

^ |

| v

+----------+----------+ +----------+----------+

| Linear + Softmax | | Output Embedding |

+----------+----------+ +----------+----------+

^ |

| v

+----------+----------+ +----------+----------+

| Decoder Stack | | Input Embedding + PE|

| (N layers) | +----------+----------+

| - Masked Multi-Head | ^

| Self-Attention | |

| - Multi-Head Attn | |

| (Encoder Output) | |

| - Feed Forward | |

| - Add & Norm | |

+----------+----------+ |

^----------------------------+

|

+----------+----------+

| Encoder Stack |

| (N layers) |

| - Multi-Head |

| Self-Attention |

| - Feed Forward |

| - Add & Norm |

+----------+----------+

^

|

+----------+----------+

| Input Embedding + PE|

+----------+----------+

^

|

+----------+----------+

| Inputs |

+---------------------+

Figure 1: Transformer Model Architecture |

Attention Mechanisms

The core of the Transformer lies in its attention mechanisms, specifically Scaled Dot-Product Attention and Multi-Head Attention.

Scaled Dot-Product Attention

The input consists of queries and keys of dimension , and values of dimension . The attention output is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility function of the query with the corresponding key.

The formula is:

The dot products compute the compatibility. Scaling by prevents the dot products from growing too large in magnitude, which could push the softmax function into regions with extremely small gradients.

Multi-Head Attention

Instead of performing a single attention function with -dimensional keys, values, and queries, the authors found it beneficial to linearly project the queries, keys, and values times with different, learned linear projections to , , and dimensions, respectively. Attention is then performed in parallel on each of these projected versions. The outputs of the heads are concatenated and once again projected, resulting in the final values.

where

The projection matrices are , , , and $W^O \in \mathbb{R}^{hd_v \times d_{\text{model}}$. In the paper's implementation, heads are used. For each head, . The total computational cost is similar to that of single-head attention with full dimensionality.

Applications of Attention in the Transformer

Multi-Head Attention is used in three distinct ways:

- Encoder Self-Attention: In the encoder layers, all come from the output of the previous encoder layer. Each position in the encoder can attend to all positions in the previous layer of the encoder.

- Decoder Self-Attention: In the decoder layers, all come from the output of the previous decoder layer. However, self-attention is restricted (masked) so that each position can only attend to preceding positions (including itself). This maintains the auto-regressive property.

- Encoder-Decoder Attention: In the third sub-layer of the decoder, comes from the previous decoder layer, while and come from the output of the encoder stack. This allows every position in the decoder to attend over all positions in the input sequence.

Position-wise Feed-Forward Networks

In addition to attention sub-layers, each layer in the encoder and decoder contains a fully connected feed-forward network (FFN), applied position-wise and identically. This consists of two linear transformations with a ReLU activation in between:

The dimensionality of input and output is , and the inner-layer has dimensionality . While the linear transformations are the same across different positions, they use different parameters from layer to layer.

Positional Encoding

Since the model contains no recurrence or convolution, positional information is injected using positional encodings added to the input embeddings at the bottoms of the encoder and decoder stacks. The positional encodings have the same dimension as the embeddings, allowing them to be summed. The paper uses sine and cosine functions of different frequencies:

where is the position and is the dimension. This allows the model to easily learn to attend by relative positions, since for any fixed offset , can be represented as a linear function of .

Training Methodology

The model was trained on the WMT 2014 English-German dataset (~4.5 million sentence pairs) and the larger WMT 2014 English-French dataset (~36 million sentence pairs).

- Optimizer: Adam optimizer was used with , , and .

- Learning Rate: The learning rate was varied according to the formula:

with . This increases the learning rate linearly for the first

warmup_stepstraining steps, and then decreases it proportionally to the inverse square root of the step number. - Regularization: Two regularization techniques were employed:

- Residual Dropout: Dropout () was applied to the output of each sub-layer, before it was added to the sub-layer input and normalized. Dropout was also applied to the sums of the embeddings and positional encodings in both the encoder and decoder stacks.

- Label Smoothing: Label smoothing with was used during training. This hurts perplexity but improves accuracy and BLEU score.

- Hardware and Schedule: Training was performed on 8 NVIDIA P100 GPUs. The base model took 100,000 steps (12 hours). The larger model trained for 300,000 steps (3.5 days).

Experimental Results

The Transformer demonstrated significant improvements in both translation quality and training efficiency compared to previous recurrent and convolutional models.

- Machine Translation (WMT 2014):

- English-to-German: Achieved a BLEU score of 28.4, outperforming the best previously reported models (including ensembles) by over 2 BLEU.

- English-to-French: Established a new single-model state-of-the-art BLEU score of 41.8.

- Training Cost: The models trained significantly faster than alternatives. The base model trained on 8 P100 GPUs achieved the state-of-the-art BLEU scores in just 3.5 days, a fraction of the training cost reported for competitive models at the time (e.g., Google's GNMT). The paper reported total training FLOPs comparing Transformer (base) at vs SliceNet at .

- Parallelization: The reliance on attention mechanisms rather than recurrence allowed for significantly more parallelization during training, as computations within a layer could largely be performed simultaneously across sequence positions.

- Generalization (English Constituency Parsing): The Transformer was also successfully applied to English constituency parsing using the WSJ dataset, achieving competitive results (F1 score of 91.3 with limited data, 92.7 with semi-supervised learning) demonstrating its applicability beyond machine translation.

The primary claimed advantages were superior translation quality, significantly enhanced parallelizability leading to reduced training times, and strong generalization capabilities. The self-attention mechanism allows modeling of long-range dependencies more directly than RNNs, while avoiding the sequential computation bottleneck.

Conclusion

The "Attention Is All You Need" paper introduced the Transformer, an architecture that fundamentally shifted the paradigm in sequence modeling away from recurrent networks towards pure attention mechanisms. Its design facilitated parallel computation, drastically reducing training times while simultaneously achieving superior performance on benchmark tasks like machine translation. The core components – multi-head self-attention, positional encodings, and position-wise feed-forward networks combined with residual connections and layer normalization – became foundational elements for subsequent LLMs and other sequence processing tasks.