Hierarchical Transformers for Long Document Classification

The paper presents a methodology to extend the BERT (Bidirectional Encoder Representations from Transformers) model for handling long document classification, addressing limitations in BERT's capacity to handle sequences longer than a few hundred words. This research focuses on tasks such as topic identification in spoken conversations and customer satisfaction prediction based on call transcripts, where documents often exceed 5000 words.

Methodology

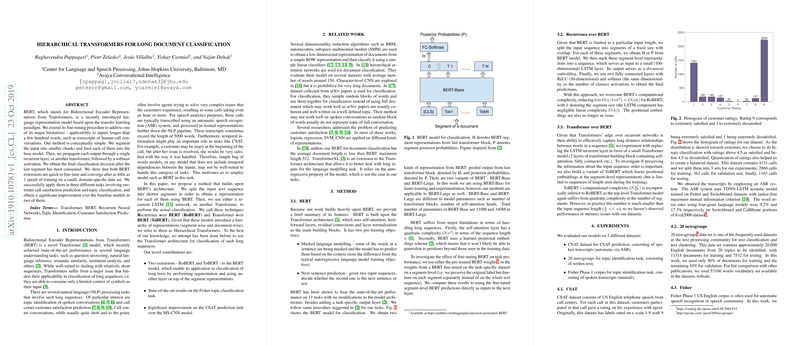

The proposed methods, termed as Recurrence over BERT (RoBERT) and Transformer over BERT (ToBERT), integrate BERT with hierarchical models to overcome its limitations. The approach involves segmenting long documents into smaller parts, then processing these segments with BERT.

- Recurrence over BERT (RoBERT): This technique utilizes a small LSTM (Long Short-Term Memory) layer to aggregate the sequence of segment-level representations obtained from BERT into a document-level representation. This setup allows the model to capture temporal dependencies essential for tasks like customer satisfaction prediction, where an emotional progression may occur throughout the call.

- Transformer over BERT (ToBERT): Instead of an LSTM layer, ToBERT employs another transformer model on top of BERT. This method leverages the transformer’s ability to model long-range dependencies more effectively, which is particularly beneficial when the order of information is crucial.

Experimental Results

The paper evaluates these methods across three datasets: CSAT (customer satisfaction), 20 Newsgroups (topic identification in written text), and the Fisher corpus (spoken conversations). Key findings include:

- Fine-tuning BERT on task-specific datasets improves performance over using pre-trained BERT features. Significant accuracy improvements are documented, notably on the Fisher dataset where ToBERT achieves a reported state-of-the-art accuracy of 95.48%.

- ToBERT generally outperforms RoBERT across most datasets, showcasing superior handling of long sequences due to its layered transformer architecture.

- The inclusion of position embeddings had minimal effect, except for a slight performance gain in CSAT, suggesting that temporal information can be crucial for tasks dependent on emotional or conversational progression.

- Comparison with baseline models, such as MS-CNN and SVM-MCE, reveals ToBERT's superior performance, indicating robust capability in processing long-form text.

Implications and Future Work

The paper demonstrates that hierarchical transformer approaches like RoBERT and ToBERT can effectively manage long document classification tasks. These methods, by leveraging BERT’s powerful contextual embeddings and extending its architecture to accommodate long sequences, provide a substantial improvement over traditional models that rely on simpler baselines or shorter document lengths.

The research opens avenues for further exploration into directly training models end-to-end on long documents, optimizing segment size and overlap, and exploring positional encoding in greater depth. These methodologies have practical applications in fields requiring the extraction of insights from extensive textual data, such as automated customer service analytics and large-scale text categorization.

Overall, the paper contributes valuable insights into extending the applicability of transformer-based models to a broader range of natural language processing tasks requiring long document handling. These hierarchical approaches lay the groundwork for future innovations in the field, aiming to bridge the gap between advanced language understanding models and practical, real-world text processing scenarios.