Privacy Concerns in Pretrained Clinical Models

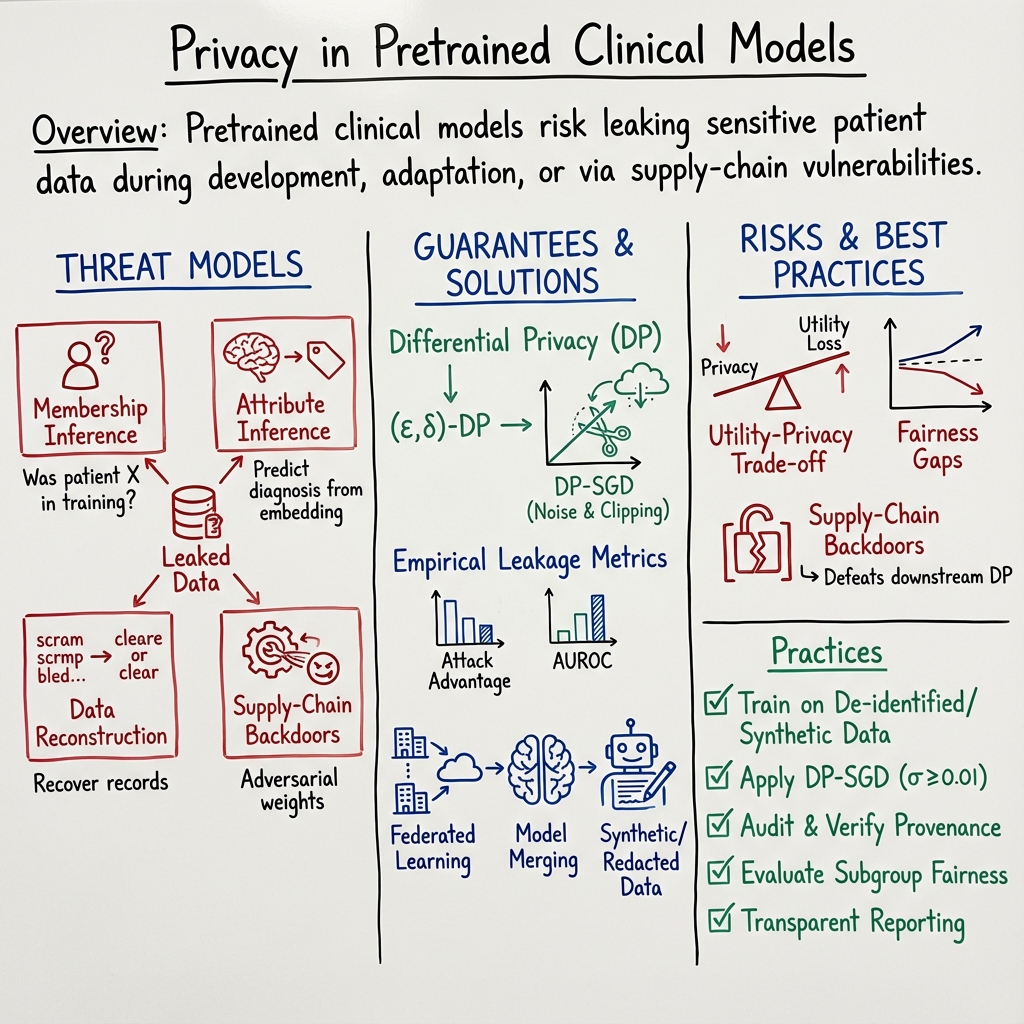

- Privacy concerns in pretrained clinical models encompass risks of data leakage, re-identification, and malicious backdoors due to model memorization of sensitive patient records.

- Mitigation strategies include applying differential privacy, federated learning, synthetic data generation, and algorithmic safeguards to balance privacy and utility.

- Empirical studies and formal metrics reveal trade-offs between model performance and data protection, highlighting challenges in multi-modal and supply-chain settings.

Privacy concerns in pretrained clinical models center on the risk that sensitive patient data incorporated during model development, adaptation, or fine-tuning may be unintentionally memorized, exposed, or compromised through statistical attacks, supply-chain vulnerabilities, or inadequate privacy guarantees. The increased scale and complexity of clinical foundation models—spanning language, multimodal, and structured EHR models—has amplified both empirical and theoretical risks around data leakage and re-identification. This article surveys the technical dimensions underpinning privacy threats, formal guarantees, evaluative frameworks, mitigation strategies, and practical limitations associated with privacy in pretrained clinical models.

1. Technical Threat Models of Privacy Leakage

Privacy attacks on pretrained clinical models fall into several formal categories: membership inference, attribute inference, data reconstruction, and supply-chain backdoors. Membership inference attacks aim to determine whether a specific patient example was present in the training set by exploiting subtle model behaviors, loss values, activations, or output probabilities (Jagannatha et al., 2021). Attribute inference attacks train downstream probes to predict sensitive attributes—such as diagnoses—from embeddings, masked token outputs, or internal representations (Mandal et al., 1 Feb 2025). Data reconstruction attacks attempt to recover original patient records, either via gradient inversion, embedding inversion, or generation sampling (Mandal et al., 1 Feb 2025). Privacy backdoors represent a distinct supply-chain vector: adversarially-modified pretrained weights can guarantee post hoc reconstruction of fine-tuning data, regardless of the application of differential privacy in the downstream pipeline (Feng et al., 2024).

Multi-modal clinical models (e.g., those processing speech or facial data) are exposed to additional leakage routes: speaker identity, age, and health states can be recovered from self-supervised embeddings (MFCC, HuBERT, FaceNet), and adversarial attacks on facial keypoint models can reconstruct identifiable images from embeddings (Mandal et al., 1 Feb 2025).

2. Formal Privacy Guarantees and Evaluation Metrics

Differential privacy (DP) constitutes the dominant mathematical framework for protecting individual patient records in deep clinical models (Dadsetan et al., 2024, Beaulieu-Jones et al., 2018, Baumel et al., 2024, Mandal et al., 1 Feb 2025). For a randomized mechanism , -DP ensures that the output distribution changes by at most under the addition or removal of a single patient record. In practice, DP-SGD modifies minibatch gradients by clipping per-example norms to and adding isotropic Gaussian noise of variance (Dadsetan et al., 2024).

Empirical leakage is measured variously as attack advantage , AUROC/AUPRC in membership inference sweeps, or precision/recall in data reconstruction benchmarks (Jagannatha et al., 2021, Tonekaboni et al., 14 Oct 2025). Quantitative metrics such as (train-vs-reference perplexity gap) and (test-vs-train perplexity difference) are used for leakage audits in generative LLMs (Moreno et al., 24 Apr 2025).

Trade-off curves (utility vs. privacy) chart the decline in model performance (e.g. micro-F1, AUROC) with shrinking , reflecting the adverse impact of increased noise or clipping on predictive accuracy and subgroup fairness (Dadsetan et al., 2024, Beaulieu-Jones et al., 2018). For multi-modal and synthetic models, privacy is also measured via reproduction-rate, -identifiability, and exposure scores.

3. Algorithmic Approaches to Privacy Preservation

Diverse algorithmic strategies are deployed to mitigate privacy concerns. DP-SGD is standard for language and tabular models, offering provable bounds on sample-level and group-level leakage when calibrated correctly (Jagannatha et al., 2021, Beaulieu-Jones et al., 2018, Baumel et al., 2024). Group-clipping and ghost-clipping are recent enhancements to accelerate private training or bound per-layer norm via partitioned gradient control (Dadsetan et al., 2024).

Federated learning frameworks permit multi-institution collaboration by localizing data and exchanging only model updates (weights, gradients), effectively eliminating centralized data exposure (Sharma et al., 2019, Beaulieu-Jones et al., 2018). Cyclical weight transfer extends this by cycling global models through sites sequentially, with each site applying DP-SGD locally and passing only noised updates to other participants (Beaulieu-Jones et al., 2018).

Model merging (PatientDx) bypasses fine-tuning altogether by fusing parameters from multiple base models (generalist, numerical-reasoning) using arithmetic or spherical interpolation, selecting hyperparameters with held-out scoring but without ever modifying weights with private patient gradients (Moreno et al., 24 Apr 2025). This approach achieves significant AUROC improvements and near-zero leakage on privacy audits when compared to fine-tuned baselines.

Redacted and pseudo-data augmentation reduce surface risk by substituting PHI with synthetic tokens or masked segments during pretraining. Models pretrained on pseudo data exhibit negligible privacy leakage as long as the pseudo database itself is correctly curated (Landes et al., 15 Jun 2025).

Synthetic data generation under DP-SGD allows the creation of privacy-guaranteed corpora for additional domain adaptation and downstream NER/use, maintaining F1 performance close to real corpora while bounding sample exposure (Baumel et al., 2024).

4. Empirical Risks, Utility Trade-offs, and Fairness Implications

Empirical studies establish that standard fine-tuning leaks up to 7% on sample-level membership attacks (GPT-2) and up to 3–4% for masked-LMs (BERT, DistilBERT) (Jagannatha et al., 2021). White-box attacks (attention, gradient inspection) consistently outperform black-box loss thresholding, and group-level leakage (over admissions, rare disease profiles) increases proportional to group size and disease rarity (Tonekaboni et al., 14 Oct 2025, Jagannatha et al., 2021). Models trained under DP-SGD with reliably suppress leakage below 1%, with only marginal F1 or AUROC loss; higher noise increases privacy at the cost of predictive degradation (Jagannatha et al., 2021, Dadsetan et al., 2024, Beaulieu-Jones et al., 2018).

In medical coding (ICD) tasks, enforcing can reduce micro-F1 by 40–60 points and exacerbate fairness gaps between demographic groups. Gender recall gaps more than triple, and ethnic recall gaps can widen under privacy constraints (Dadsetan et al., 2024). Models exhibit increased risk for rare codes, elderly patients, and long-tail subpopulations, necessitating subgroup-aware privacy audits and adaptive budget calibration (Tonekaboni et al., 14 Oct 2025).

For de-identification tasks, training on purely masked data () guarantees zero PHI leakage but may yield reduced contextual entity boundary detection. Pseudo-data reintroduces contextual entropy but must be rigorously audited to prevent synthetic database risks (Landes et al., 15 Jun 2025).

5. Supply-Chain Attacks: Privacy Backdoors in Pretrained Models

Recent research demonstrates that adversarially injected privacy backdoors in pretrained models constitute a critical and overlooked supply-chain vulnerability, making post hoc DP-SGD during downstream fine-tuning ineffective (Feng et al., 2024). By modifying hidden units (“data-traps”) in the initial weights , attackers guarantee reconstruction of individual fine-tuning examples based on observable changes in the final weights , either via white-box inspection (directly reading the gradient-induced shift) or via black-box input queries.

Mathematically, the backdoor ensures that fine-tuning steps realize maximal per-example gradient contribution at designated trap neurons, saturating theoretical DP bounds and allowing exact recovery of captured training examples. Empirical evaluations confirm near-perfect recovery rates for diverse architectures (MLP, ViT, BERT), illustrating that DP applied solely at fine-tuning does not defend against malicious initializations.

Defenses demand full-chain DP—protecting both pretraining and downstream adaptation—and cryptographic integrity verification of foundation models. Architectural auditing (random re-initialization, layer sanitization), supply-chain provenance, and post hoc backdoor detection tools (Neural Cleanse, ABS) represent interim mitigations, but remain open research topics.

6. Mitigation Strategies and Practical Recommendations

Effective privacy preservation in pretrained clinical models combines algorithmic, architectural, and procedural safeguards. Model developers should:

- Train only on fully de-identified or masked data, or use synthetic data generation with DP guarantees (Landes et al., 15 Jun 2025, Baumel et al., 2024).

- Apply DP-SGD with empirically validated noise levels (), targeting empirical leakage (Jagannatha et al., 2021).

- Employ federated or distributed learning so that raw data remains behind institutional firewalls (Sharma et al., 2019, Beaulieu-Jones et al., 2018).

- Audit pseudo databases and redaction pipelines to avoid inadvertent identifier inclusion (Landes et al., 15 Jun 2025).

- Systematically evaluate models under both sample- and group-level membership inference, adversarial prompting, and subgroup perturbation tests (Tonekaboni et al., 14 Oct 2025).

- For high-risk domains (mental health, multi-modal), augment training with autoencoder-based obfuscation, federated local-DP, and encrypted computation, while comprehensively measuring privacy–utility–fairness trade-offs (Mandal et al., 1 Feb 2025).

- Verify and sign the provenance of all model weights before fine-tuning; cryptographically guarantee model integrity to avoid privacy backdoors (Feng et al., 2024).

- Maintain transparency via “data cards” reporting provenance, privacy budgets, attack evaluations, subgroup audit results, and known risks (Lehman et al., 2021).

7. Challenges, Open Issues, and Research Directions

Despite advances, privacy in pretrained clinical models faces unresolved challenges. DP-utility trade-offs are context- and architecture-dependent; rare codes and underrepresented subgroups suffer amplified degradation or residual exposure. Existing supply-chain mitigations for privacy backdoors remain incomplete. For multi-modal clinical models, cross-domain leakage vectors (audio→text, video→attribute) and robust anonymization methods lack theoretical guarantees and standardized metrics (Mandal et al., 1 Feb 2025). Transparent, reproducible, and subgroup-focused evaluation regimes are increasingly essential.

Open areas for further research include formally robust multimodal anonymization, adaptive privacy budget targeting, real-time subgroup audit tools, scalable federated protocols for non-IID distributions, and full-chain cryptographic model integrity verification. The development of universally accepted privacy benchmarking suites for clinical AI remains an ongoing need.

In sum, privacy in pretrained clinical models demands a synergistic engagement with formal privacy accounting, empirical risk audits, architectural vigilance, supply-chain provenance, and context-specific algorithmic adaptation. These efforts collectively aim to maximize the safe, equitable, and regulatory-compliant deployment of advanced clinical AI systems across diverse healthcare environments.