Physics-Informed Neural Operators

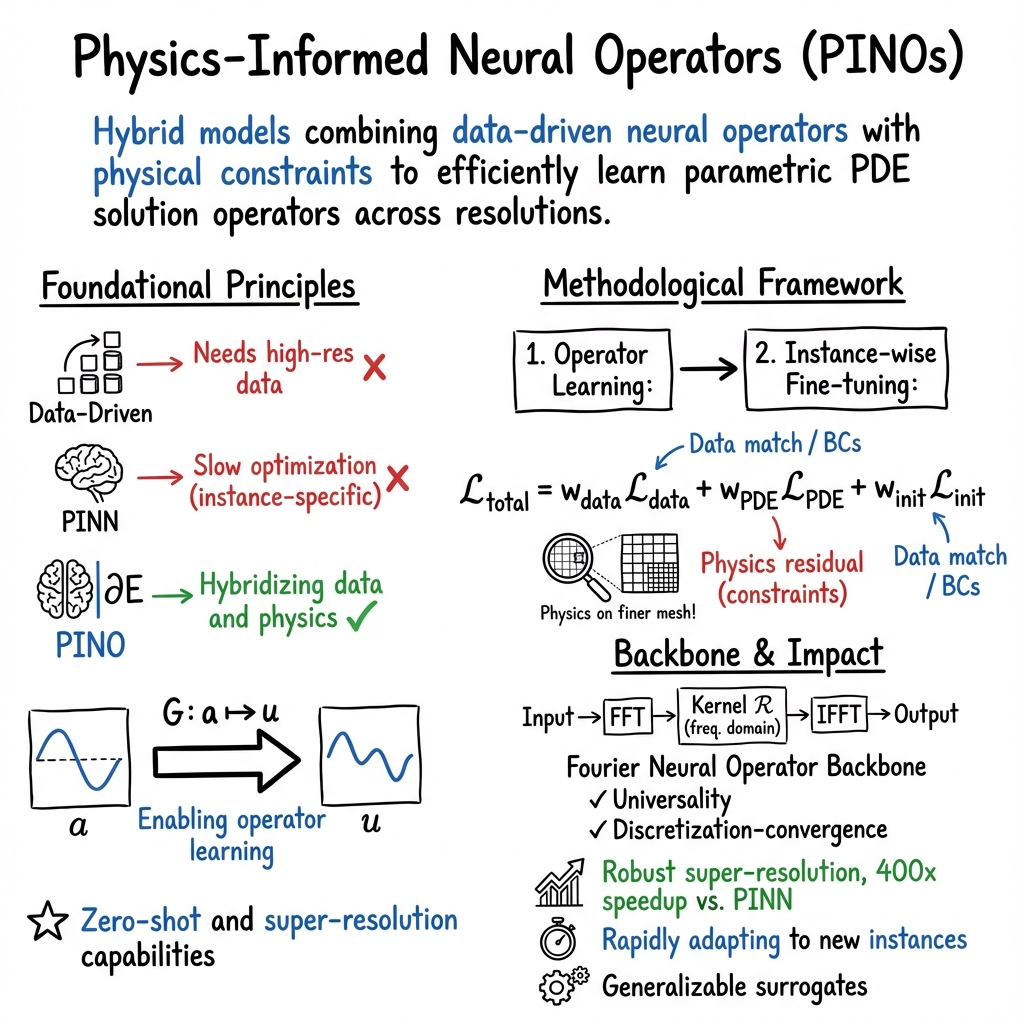

- Physics-Informed Neural Operators are hybrid models that merge data-driven operator learning with strict PDE constraints to robustly map input fields to solution functions.

- They utilize multi-resolution physics loss and Fourier Neural Operator backbones to achieve zero-shot super resolution and high accuracy in modeling parametric PDEs.

- PINOs accelerate simulation tasks by rapidly adapting to new conditions, making them ideal for real-time surrogate modeling in fluid dynamics, inverse problems, and multiphysics applications.

Physics-Informed Neural Operators (PINOs) are a class of hybrid machine learning models that unite data-driven neural operator frameworks with physical constraints, aiming to efficiently learn the solution operators of parametric partial differential equations (PDEs) across diverse families and resolutions. Unlike traditional neural networks or even standard physics-informed neural networks (PINNs), PINOs are designed to map between infinite-dimensional function spaces, such as mapping input fields (e.g., initial or boundary conditions) to solution functions, thus yielding generalizable and discretization-invariant surrogate models for complex, multi-scale systems.

1. Foundational Principles and Motivation

PINOs address key drawbacks inherent in traditional mesh-based solvers, purely data-driven operator learning, and pointwise PINNs. Pure data-driven neural operators require extensive high-resolution data and risk poor extrapolation, while classical PINNs are prone to optimization challenges—especially in multi-scale or long-time dynamics—since they are trained one instance at a time. PINOs resolve these issues by:

- Hybridizing data and physics: Incorporating both available input–output data (even at coarse resolution) and strong enforcement of the governing PDE as a physics-informed loss, often at a much finer resolution than the data alone would support.

- Enabling operator learning: Training a neural operator (often based on architectures such as the Fourier Neural Operator, FNO) to approximate the solution operator , with denoting input fields and the corresponding PDE solution.

- Zero-shot and super-resolution capabilities: Leveraging the ability to impose PDE constraints at higher resolutions allows accurate predictions beyond training grid scales (zero-shot super resolution) and robust extrapolation to new parameter regimes (Li et al., 2021).

This design enables PINOs to serve as fast, generalizable surrogates, particularly potent in parameterized physics simulations, multi-scale modeling, and inverse problem contexts.

2. Methodological Framework

The standard PINO workflow consists of two core phases:

- Operator Learning: Train a neural operator by minimizing a composite loss:

- : Supervised loss on available input–output pairs, typically in the norm.

- : Physics loss penalizing the PDE residual, often integrated over a finer mesh.

- : Initialization or boundary condition loss (especially relevant for time-dependent or coupled PDEs).

- Instance-wise Fine-tuning: For a particular PDE instance (e.g., a new initial condition), use the trained operator as initialization, then further optimize only the PDE-constrained loss, leading to lower instance-specific error.

The neural operator architecture is typically written as:

where:

- , : Pointwise lifting and projection operators (dense layers).

- : Pointwise linear operators.

- : Learned integral (kernel/convolution) operators—most commonly implemented via FFT-based convolution as in FNO.

- : Nonlinear activation (e.g., ReLU or GeLU).

A distinguishing feature is multi-resolution enforcement: physics losses can be evaluated at much higher resolution than the available training data, providing robust regularization and enabling super-resolution inference (Li et al., 2021, Rosofsky et al., 2022).

3. Fourier Neural Operator Backbone and Generalizations

The Fourier Neural Operator (FNO) serves as the backbone for many PINO schemes. In FNO, kernel convolutions are performed efficiently in the frequency domain:

where is a learned multiplication operator in Fourier space. This formulation provides two critical theoretical guarantees:

- Universality: FNOs are universal approximators for continuous operators on function spaces.

- Discretization-convergence: FNOs produce outputs that rigorously converge to the true solution as the mesh is refined, provided the PDE is posed with compatible boundary conditions.

Extensions to the FNO family include wavelet neural operators (WNOs), which offer localized representation for multi-scale spatial features (N et al., 2023), and domain-specific PINOs for magnetohydrodynamics (MHD), integral equations, or high-dimensional reliability analysis (see, e.g., (Rosofsky et al., 2023, Aghaei et al., 2024, Navaneeth et al., 2024)).

4. Applications, Performance, and Empirical Results

PINOs have demonstrated strong empirical performance and practical utility across a wide range of PDE problems:

| Domain | Problem Class | Reported Benefit | Reference |

|---|---|---|---|

| Fluid Dynamics | Burgers, Navier–Stokes, Darcy | <1% relative error, robust super-resolution, 400x speedup vs. PINN | (Li et al., 2021) |

| Shallow Water Systems | 2D coupled PDEs | Accurate field prediction, efficient multi-scale modeling | (Rosofsky et al., 2022) |

| Inverse Problems | Coefficient recovery | 3000x faster than MCMC, PDE-constrained physicality | (Li et al., 2021) |

| Magnetohydrodynamics | 2D incompressible MHD | Prediction errors <10% in laminar settings; large-scale speedup | (Rosofsky et al., 2023) |

| Chaotic Systems | Long-term statistics | 120x faster than FRS, ~5–10% error vs. >180% for closure models | (Wang et al., 2024) |

PINOs have proven especially adept at:

- Learning dynamics from few/sparse data points, outperforming purely data-driven operators in generalization and physical validity.

- Retaining accuracy in settings with high-frequency/chaotic content, where PINNs or closure models either fail or require excessive data.

- Rapidly adapting to new instances with minimal fine-tuning, enabling real-time surrogate inference in engineering applications.

5. Extensions and Architectures Beyond Vanilla PINO

Recent works extend the basic PINO framework in several directions:

- Symmetry-Augmented Training: Incorporating generalized (evolutionary) Lie symmetries in the loss function yields enhanced optimization signals and improved data efficiency. This can alleviate the “no training signal” problem found with conventional symmetry loss augmentation (Wang et al., 1 Feb 2025).

- Self-Improvement and No-Label Learning: Iterative self-training with pseudo-labels closes the accuracy gap between PINOs trained with data and those relying solely on physics loss, leading to significant improvements in both accuracy and training time (Majumdar et al., 2023).

- Multifidelity and Domain Decomposition: Domain and fidelity decomposition (e.g., stacking FBPINN and multifidelity operator networks) allow PINOs to better capture multi-scale dynamics by isolating local features and correcting coarse approximations (Heinlein et al., 2024).

- Operator Surrogates for Integral Terms: Hybrid PINO/PINN frameworks (e.g., opPINN) combine pre-trained operator surrogates for expensive kernels (such as collision operators) with meshfree PINN solvers, increasing efficiency for integro-differential PDEs (Lee et al., 2022).

- Memory and Generalization Efficiency: Layered hypernetwork designs and frequency-domain reduction (e.g., LFR-PINO) drastically cut parameter counts at comparable accuracy, enabling universal pre-trained PDE solvers with optional fine-tuning (Wang et al., 21 Jun 2025).

6. Implementation Considerations, Software, and Future Directions

Careful implementation is required to realize the potential of PINOs:

- Training benefits from multi-resolution enforcement of physics loss, high-frequency spectral regularization, and instance-wise fine-tuning.

- Software supporting PINO research frequently builds on performant machine learning frameworks (notably PyTorch) and makes use of FFT and wavelet libraries for spectral operations (Rosofsky et al., 2022, Rosofsky et al., 2023).

- Open-source tools and tutorials, along with interactive websites, have been provided to enable reproducibility and promote broader adoption (Rosofsky et al., 2022).

Challenges and ongoing research themes include:

- Scalability to complex geometries and high dimensions: While current FNO-based PINOs rely on FFTs (favoring periodic or structured domains), extensions incorporating adaptivity, generalized integral operators, or domain decompositions are active exploration areas.

- Optimization and transfer learning: Adaptive loss-balancing, advanced optimizer selection, and leveraging pretrained operators for rapid transfer across parameter regimes are promising future strategies.

- Theoretical foundations: Continued work on discretization convergence, representation equivalence, and the connection to operator generalization in function space (Li et al., 2021).

- Hybrid PINO/PINN architectures: Combining the strengths of function-space parameterization with fine-grained physics constraints and inverse problem capabilities.

7. Impact and Significance

PINOs represent a flexible, data- and computation-efficient solution class for learning and deploying parametric PDE solution operators. Their ability to incorporate physics at high resolution, generalize across parameter regimes, and rapidly adapt to new problem instances has made them attractive for a spectrum of challenging use cases, including, but not limited to:

- Real-time surrogate modeling in fluid dynamics, multiphysics, and materials design.

- High-throughput inverse modeling and property inference without retraining.

- Efficient uncertainty quantification, reliability analysis, and design optimization under physics constraints.

The continuing evolution of PINOs—through architectural innovations, theoretical advances, and convergence with operator-theoretic machine learning—positions them as a central technology in the deployment of AI-driven scientific computing and digital twins for complex, multi-scale, and nonlinear systems.