Object-Centric Learning

- Object-centric learning is a paradigm that represents complex scenes as discrete slots encoding individual object properties.

- It employs slot attention with iterative competitive assignment to achieve high segmentation accuracy and robust out-of-distribution generalization.

- Recent advancements extend this approach to video, reinforcement learning, and 3D modeling, enhancing scene abstraction and downstream applications.

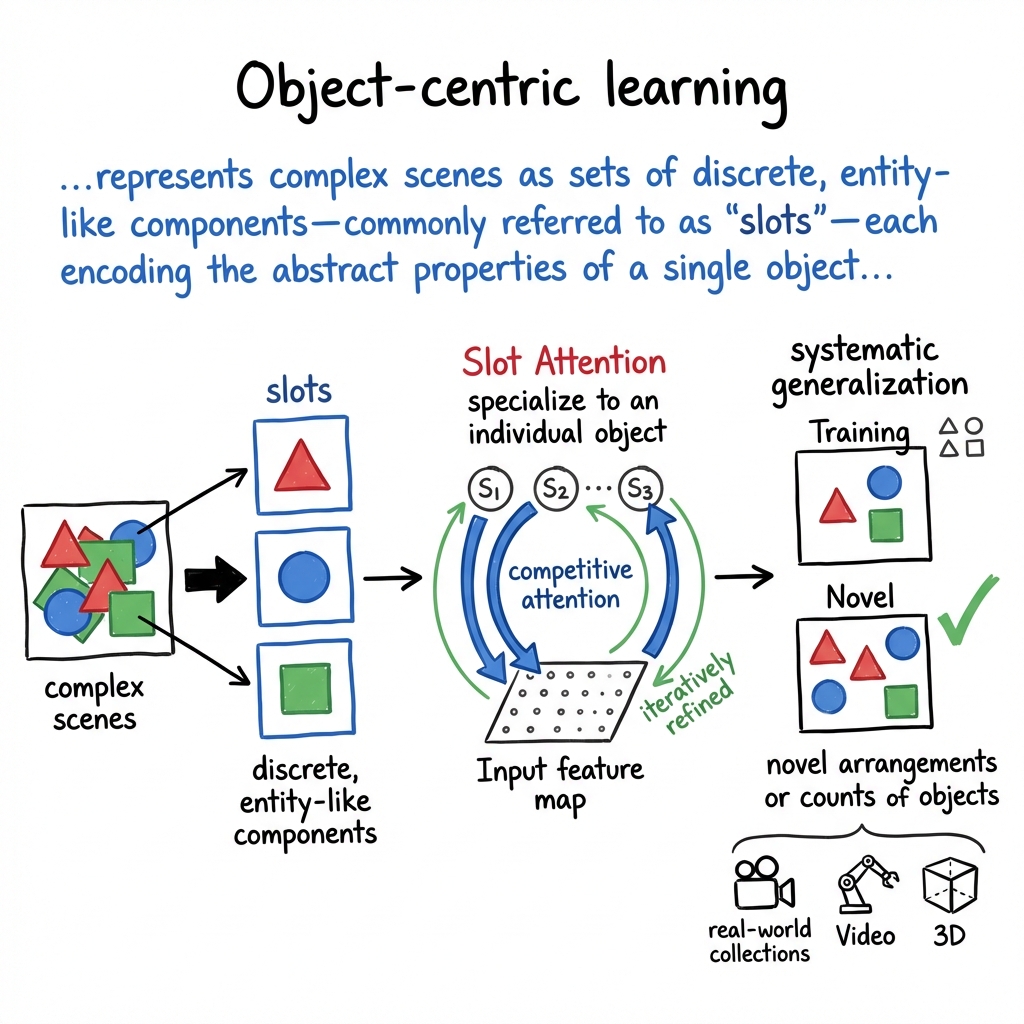

Object-centric learning is a paradigm in machine learning that seeks to represent complex scenes as sets of discrete, entity-like components—commonly referred to as "slots"—each encoding the abstract properties of a single object in an unsupervised, compositional manner. This approach aligns with the natural compositionality of visual environments, where scenes are structured as arrangements of distinct objects that can move, interact, and combine in novel ways. The object-centric framework underpins systematic generalization, efficient abstraction, compositional reasoning, and robust downstream perception, supporting applications ranging from unsupervised discovery to reinforcement learning, video understanding, and semantic reasoning.

1. Principles of Object-Centric Representation

Object-centric representation departs from conventional distributed scene embeddings by explicitly factoring the scene into entity-wise abstractions. Each object-centric "slot" is designed to bind to and encapsulate the appearance, spatial extent, and latent properties of a single object or entity. This compositional abstraction supports consistent generalization across novel arrangements or counts of objects, as evidenced by improved performance on out-of-distribution configurations and downstream reasoning tasks.

For example, Slot Attention (Locatello et al., 2020) achieves this by introducing a fixed set of latent slots , with each slot being iteratively refined via competitive attention to specialize to an individual object (section 2). Compositionality in such representations allows systematic recombination, such that known object slots can be rearranged and manipulated to explain novel scenes without retraining.

2. Slot Attention and Iterative Competitive Assignment

The Slot Attention architecture (Locatello et al., 2020) exemplifies the canonical object-centric pipeline. Given an input feature map (flattened as tokens), learnable slots are initialized from a Gaussian prior and refined over attention iterations. Each slot attends to inputs with softmax-normalized competitive assignment (normalized over slots):

where and are linear projections; expresses the degree to which slot "explains" input . Slot updates are performed as weighted sums of value projections, regularized per slot, followed by a recurrent (GRU) and feedforward update. This process, by design, is invariant to permutation of inputs and equivariant to slot ordering.

Emergence of object-centricity occurs without explicit supervision: training end-to-end for scene reconstruction leads each slot to specialize to distinct objects, demonstrably segmenting and reconstructing entity-level components on CLEVR, Multi-dSprites, and Tetrominoes benchmarks. Notably, increasing the number of slots at test time enables generalization to images with more objects than seen during training, reaffirming the compositional principle.

3. Compositionality, Theoretical Guarantees, and Alternative Objectives

Auto-encoding objectives provide only weak compositional bias: mere reconstruction can allow slots to focus on spatial regions or combine parts of multiple objects (Jung et al., 2024). Explicitly regularizing compositionality, for example by enforcing that mixed slots from two images yield a coherent (high-likelihood) composite (the "compositionality objective"), aligns representations more closely with true object identities. Score-distillation or diffusion-based priors can serve as compositional validators of the generated scene (Jung et al., 2024).

Theoretical foundations have been developed to explain when unsupervised object-centric representations can be identified (Brady et al., 2023). Under broad generative assumptions—compositionality (each pixel influenced by one slot) and irreducibility (each slot cannot be split into independent parts)—it is possible, in principle, to recover ground-truth object slots up to permutation and slot-wise invertible mapping. These identifiability conditions are predictive in practice: unsupervised models with low reconstruction loss and low compositional contrast indeed yield better slot-object alignment.

4. Methodological Innovations and Scaling to Real Data

The slot paradigm has evolved to overcome scaling and generalization barriers. Notable innovations include:

- Feature-based objectives: Replacing pixel reconstruction with similarity to frozen self-supervised features (e.g., DINO ViT) enables unsupervised segmentation on complex, real-world collections such as COCO and VOC (Seitzer et al., 2022). The DINOSAUR framework reconstructs patchwise DINO embeddings via slot attention and an MLP/Transformer decoder.

- Alternative supervision: Cyclic walk approaches (Wang et al., 2023) eschew explicit decoders, imposing part–whole consistency by enforcing feature–slot cyclic transitions, thus enabling efficient, memory-light learning on natural images.

- Reverse hierarchy guidance: Augmenting standard slot auto-encoding with top-down, slot-guided feature regularization improves the detection of small or rare objects and reduces intra-object feature variance, as shown in RHGNet (Zou et al., 2024).

- Disentangled slot attention: Separating intrinsic (identity, shape) and extrinsic (pose, scale) properties within slots allows for scene-invariant global prototypes and explicit manipulations (generation, retrieval, editing) (Chen et al., 2024).

- Grounded priors and dictionaries: Learning object-type-specific priors or canonical codebooks (CoSA-GSD) encourages slots to bind to stable, type-invariant entities (Kori et al., 2023).

- Diffusion decoders: Leveraging latent diffusion models (LSD) as slot-conditioned generators significantly improves compositional generation and segmentation under complex scene statistics (Jiang et al., 2023).

Scalable application to real-world domains is further enabled by zero-shot transfer benchmarks; models pre-trained on diverse, natural images generalize more robustly to new datasets (Didolkar et al., 2024), with fine-tuning of self-supervised encoders yielding performance gains across synthetic and real scenes.

5. Quantitative Performance and Generalization

Empirical results demonstrate that object-centric models equipped with slot attention and its successors achieve high segmentation accuracy (foreground ARI 95% on CLEVR, 90% on Multi-dSprites), systematic generalization to more objects than present during training, and competitive performance in downstream property prediction (e.g., % on CLEVR10). In real-world settings, feature-reconstruction-based slot models achieve up to and on COCO (Seitzer et al., 2022), and further improvements are achieved via fine-tuned encoders (Didolkar et al., 2024).

Efficiency considerations favor decoderless or feature-based approaches (cyclic walks (Wang et al., 2023)) and highlight reduced training time and memory use (e.g., Slot Attention trains on CLEVR6 in 24 hours versus 7 days for IODINE/MONet).

Robustness to distribution shift is generally high for object-centric methods when shifts affect object properties; however, unstructured or global changes (cropping, occlusion, background alteration) can still present challenges (Dittadi et al., 2021). Controlled studies confirm that slot separation acts locally: OOD perturbations to one object have limited effect on other slots and object-level inference.

6. Extensions: Video, Reinforcement Learning, Multimodal, and 3D

Object-centric learning has been extended to video, reinforcement learning, multimodal, and 3D domains:

- Video and temporal grouping: Recurrent slot architectures (VideoSAUR (Zadaianchuk et al., 2023)) integrate temporal feature similarity loss to bias grouping toward rigid, co-moving entities, achieving state-of-the-art segmentation on real and synthetic video datasets.

- Reinforcement learning: Object-centric world models and modular slot-based observations enable rapid skill acquisition, transfer, and compositional goal specification (SMORL (Zadaianchuk et al., 2020), OC-STORM (Zhang et al., 27 Jan 2025)), with marked gains in sample efficiency and policy robustness in visually complex domains.

- Language grounding: Integration with neural-symbolic question answering exploits slot representations for referring-expression comprehension and visual QA, outperforming vision-only or slot-only models (Wang et al., 2020).

- 3D object-centric NeRFs: Online, variational inference pipelines (SOOC3D (Wang et al., 2023)) can recover view-invariant per-object radiance fields, scale to large scenes via cognitive maps, and provide a foundation for robotic scene understanding and downstream physical reasoning.

7. Empirical and Conceptual Progress, Remaining Challenges, and Directions

Recent advances have addressed key limitations of unsupervised discovery and generalization. The integration of foundation segmentation models (Segment Anything, HQES), feature-based reconstruction, and compositional regularization enables training-free or training-light object-wise decomposition at scale (Rubinstein et al., 9 Apr 2025). In many benchmarks, segmentation-based pipelines now match or surpass traditional slot-based methods—especially in zero-shot, out-of-distribution regimes.

Open challenges remain:

- Instance counting / slot adaptivity: Most approaches require a fixed, pre-defined number of slots; dynamic or hierarchical slot allocation remains an active area.

- Foreground selection and interpretation: Robustly identifying which segmented regions correspond to true objects (vs. artifacts or partial segments) is nontrivial; mask selection bottlenecks performance in end-to-end applications.

- Downstream task alignment: Evaluation and training objectives are still often discovery- or segmentation-centric. There is a community shift toward benchmarks and objectives based on downstream goals (robust classification, relational reasoning, causal inference).

- Compositional semantics and global prototypes: Learning scene-invariant, reusable object prototypes that disentangle intrinsic and extrinsic properties is an emerging area, with recent progress in disentangled slot models (Chen et al., 2024).

The broader implication is a move from studying object-centric learning as an unsupervised discovery challenge toward its foundational role in scalable, structured perception and reasoning across modalities and domains. Future directions emphasize multimodal entity perception, foundation world modeling, dynamic scene understanding, and integration with foundation models for robust and interpretable machine perception.