MS-ASL: ASL Gesture Recognition Dataset

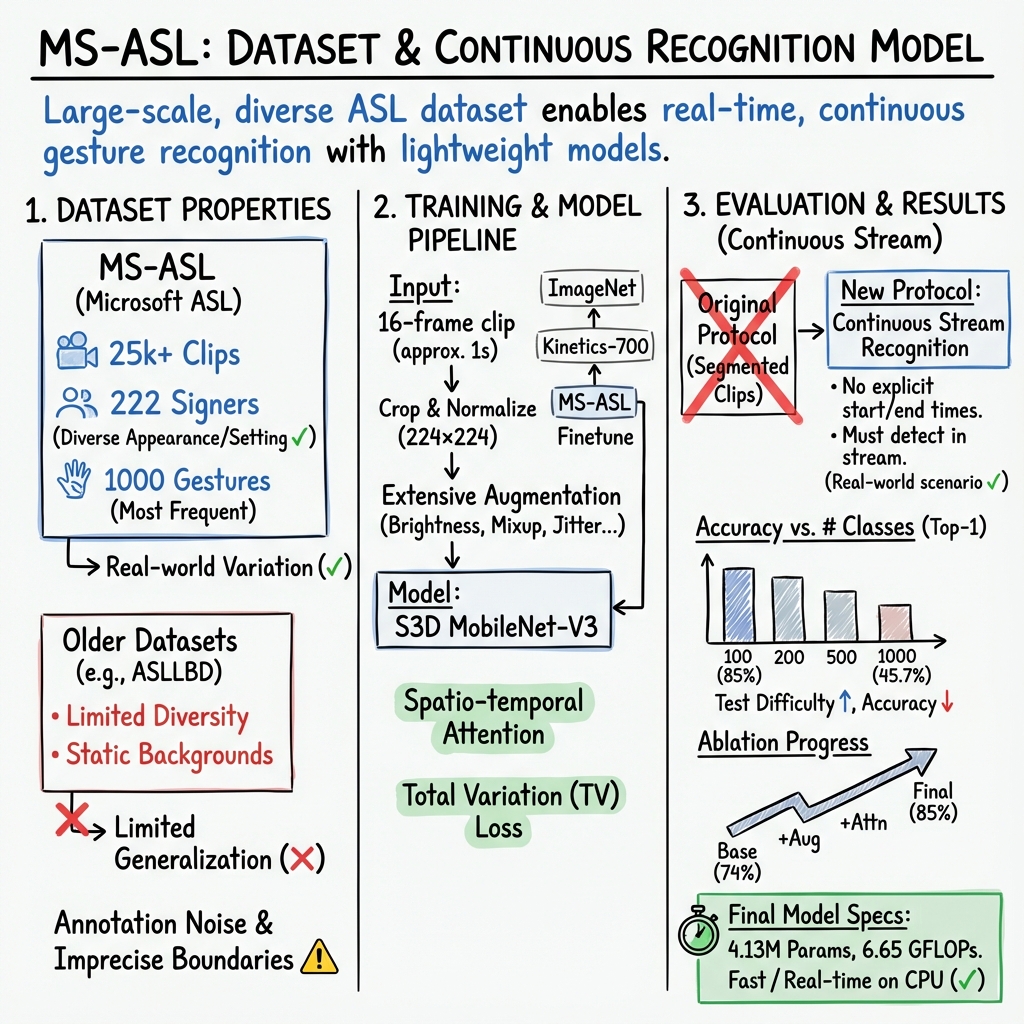

- MS-ASL is a large-scale, multi-signer dataset containing over 25,000 video clips covering 1,000 frequent ASL gestures, ensuring diverse representation.

- The dataset features predefined training, validation, and test splits, which facilitate reproducible research and robust benchmarking of ASL recognition systems.

- Advanced preprocessing and augmentation strategies, including spatio-temporal attention and metric-learning, have enhanced model accuracy for real-time continuous gesture recognition.

The MS-ASL (Microsoft American Sign Language) dataset is a large-scale, multi-signer dataset designed to advance automatic ASL gesture recognition. Comprising over 25,000 video clips performed by 222 distinct signers and spanning the 1,000 most frequently used ASL gestures, MS-ASL constitutes a significant resource for training, evaluating, and benchmarking vision-based sign language recognition systems. It includes predefined splits into training, validation, and test sets, thus facilitating reproducible research and rigorous evaluation protocols. MS-ASL's scale, diversity, and realistic signer and background variation distinguish it as a major step forward relative to its predecessors.

1. Dataset Properties and Structure

MS-ASL is referenced as a foundational dataset for ASL gesture recognition [DBLP:journals/corr/abs-1812-01053]. Its core attributes include:

- Composition: Over 25,000 short video clips, 222 unique signers, and coverage of the 1,000 most frequent ASL gestures.

- Splits: Predefined partitions for training, validation, and testing facilitate methodologically consistent experimentation.

- Diversity: Signers vary in appearance and setting, mitigating background and subject biases present in earlier datasets such as ASLLBD.

- Annotation Scheme: Each video is labeled with a gesture class and annotated with temporal bounds, although the annotation process contains a non-negligible amount of noise and sometimes imprecise gesture boundaries.

The MS-ASL dataset's diversity and scale enable it to better address robustness and generalization in unconstrained, real-world ASL recognition scenarios.

2. Data Preparation and Augmentation Strategies

Model training utilized the largest possible training subset, encompassing all 1,000 gesture classes. Empirical investigations demonstrated that restricting training to only the 100-class subset—contrary to prior work—did not yield improved performance and in some cases led to overfitting. The consistent use of the full 1,000 classes for training, with evaluation metrics focused on test splits of 100, 200, 500, and 1,000 classes, constitutes a methodological shift.

Data preprocessing included the following steps:

- Temporal Windowing: Each inference input comprised a 16-frame segment sampled at 15 Hz (corresponding to ~1 second of video, closely matching gesture lengths in MS-ASL). During inference, central segments from annotated gesture clips were selected; sequences shorter than 16 frames were zero-padded by duplicating the first frame.

- Spatial Cropping and Normalization: For each sequence, the maximal bounding box covering the signer's face and both raised hands was computed (mean-ed over the sequence), and videos were spatially resized to 224 × 224 pixels, yielding an input tensor of 16 × 224 × 224 for the network.

- Augmentation: Extensive augmentations included random brightness, contrast, saturation, hue, random crop erasing, mixup, and temporal jitter, supporting appearance and temporal robustness.

- Pretraining Regime: The 2D backbone was pretrained on ImageNet, with subsequent pretraining on Kinetics-700 for the S3D MobileNet-V3 3D configuration, and then finetuned on the MS-ASL dataset.

3. Evaluation Methodology and Metrics

The evaluation regime was shifted from the original MS-ASL protocol—which provided gesture-segmented clips and thus ground-truth gesture start and end times—to a continuous stream recognition paradigm. In this stricter framework, models must recognize gestures without explicit alignment, mirroring real-world continuous sign language understanding scenarios. For benchmarking:

- Test Input: The central temporal window of the annotated gesture was used. No test-time augmentation or over-sampling was applied.

- Metrics:

- Mean top-1 accuracy (primary metric)

- Mean Average Precision (mAP)

- Top-5 Metrics: Not reported, as top-5 accuracy was deemed not representative of robustness in live deployment.

- Label Noise: The dataset contains "significant noise in annotation", with mislabelings and temporal boundary mismatches; this likely results in conservative estimates of true live-mode model performance.

4. Experimental Results and Model Specification

Empirical results demonstrate the effect of test set difficulty as the number of gesture classes increases. Key findings from the continuous recognition protocol are provided below.

| MS-ASL split | top-1 | mAP |

|---|---|---|

| 100 signs | 85.00 | 87.79 |

| 200 signs | 79.66 | 83.06 |

| 500 signs | 63.36 | 70.01 |

| 1000 signs | 45.65 | 55.58 |

Detailed ablation shows progressive improvement through successive augmentation and loss strategies. The final model leverages spatio-temporal attention and Total Variation (TV) loss:

| Method | top-1 | mAP |

|---|---|---|

| AM-Softmax (base) | 73.93 | 76.01 |

| + temporal jitter | 76.49 | 78.63 |

| + dropout in each block | 77.11 | 79.45 |

| + continuous dropout | 77.35 | 79.85 |

| + extra metric-learning losses | 80.75 | 82.34 |

| + pr-product | 80.77 | 82.67 |

| + mixup | 82.61 | 86.40 |

| + spatio-temporal attention | 83.20 | 87.54 |

| + hard TV-loss | 85.00 | 87.79 |

The final continuous ASL recognition model is characterized by:

| Spatial size | Temporal size | Embedding size | MParams | GFlops |

|---|---|---|---|---|

| 224 × 224 | 16 | 256 | 4.13 | 6.65 |

The model is described as being the fastest ASL recognition model with competitive metrics, supporting real-time deployment on standard Intel$^\textregistered$ CPUs.

5. Comparative Context and Methodological Advances

Direct comparison to previous MS-ASL baselines is precluded due to the modified, stricter evaluation (continuous stream recognition rather than clip-level segmentation). This approach represents a more difficult scenario, aligned with real-world application modalities. Earlier work on datasets such as ASLLBD did not generalize due to limited signer diversity and static backgrounds. In contrast, MS-ASL’s variability is crucial for learning robust, appearance-invariant models.

Even the baseline (AM-Softmax with metric-learning) achieved competitive results (73.93% top-1 on test-100). The inclusion of additional metric-learning losses, advanced augmentations, spatio-temporal attention, and TV regularization improved top-1 accuracy to 85.00% on the 100-class test set. Notable is the model's parameter efficiency: 4.13M parameters and 6.65 GFLOPs, supporting real-time execution.

6. Challenges, Limitations, and Implications

Significant annotation noise remains a challenge, with inconsistent labels and gesture boundaries; as a result, evaluation likely underestimates achievable live-mode accuracy. The comparatively limited dataset size and residual class imbalance create susceptibility to overfitting, necessitating aggressive data augmentation and metric-learning approaches. The transition to continuous recognition protocols elevates the difficulty and better reflects operational use but further complicates direct benchmarking with prior segmentation-dependent studies. A plausible implication is the need for further research on robust sequence segmentation and label refinement in large-scale, open-world SLR settings.

7. Associated Loss Functions and Training Formulations

Relevant mathematical formulations for training recognition models on MS-ASL include:

- AM-Softmax with max-entropy regularization:

where is the predicted distribution and the balancing coefficient.

- Total Variation (TV) Loss for spatio-temporal attention masks:

where is the confidence score at spatio-temporal coordinate , and indexes spatial-temporal neighbors.

MS-ASL represents a pivotal resource for advancing ASL gesture recognition. Its adoption has enabled the development and evaluation of lightweight, real-time models using metric-learning and attention mechanisms, establishing stricter benchmarks via challenging continuous recognition protocols and reinforcing its role as a critical underpinning for current and future ASL research.