Metacognitive Reuse Framework

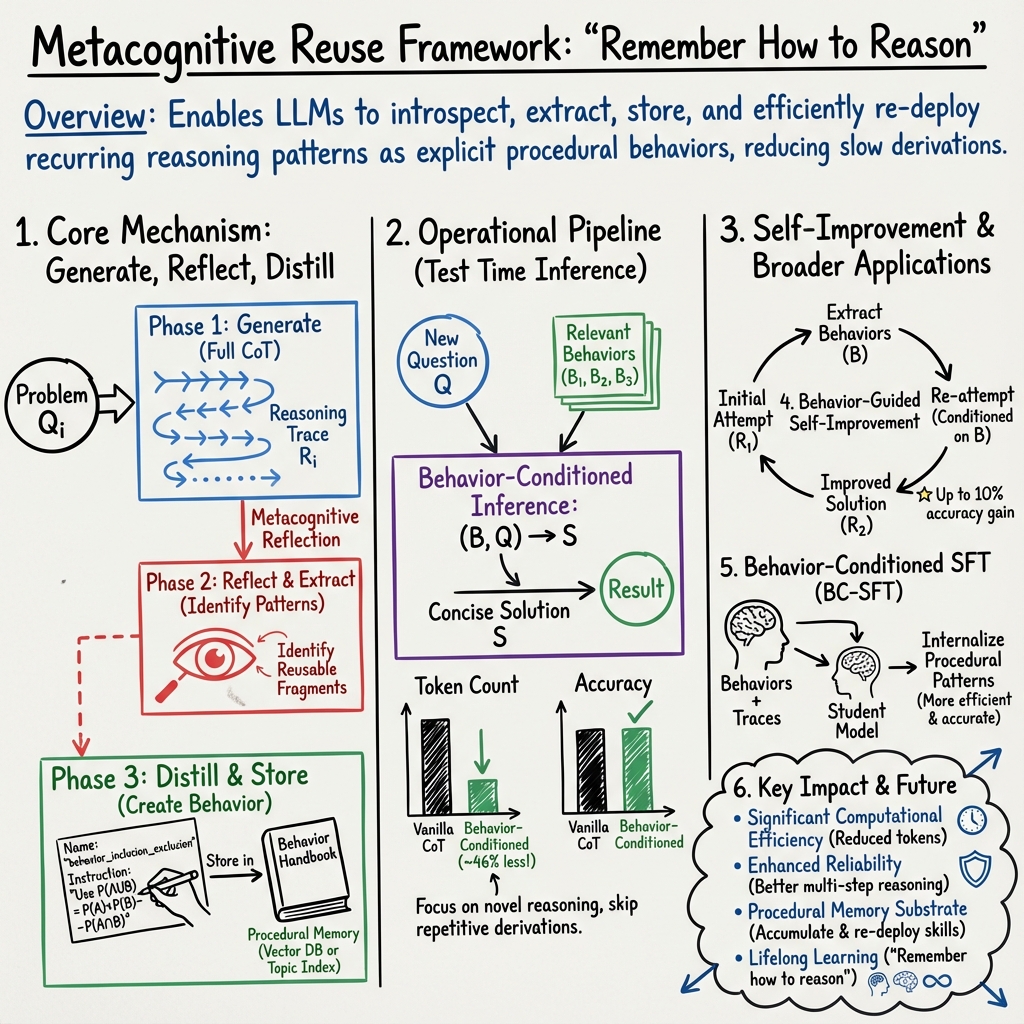

- The metacognitive reuse framework is a formal method that enables AI systems to introspect, extract, and reuse recurring reasoning patterns as concise procedural behaviors.

- It employs a three-phase process: initial full chain-of-thought solution, metacognitive reflection to extract patterns, and distillation into named behaviors for efficient retrieval.

- Experimental results reveal up to a 46% reduction in token usage and a 10% accuracy gain, demonstrating enhanced computational efficiency and self-improvement in LLMs.

A metacognitive reuse framework formalizes how an intelligent system can introspectively analyze its reasoning process to extract, store, and efficiently re-deploy recurring inference patterns as explicit procedural behaviors. This paradigm endows LLMs and similar agents with the ability to “remember how to reason,” transitioning from repeatedly emitting lengthy derivations to composing solutions via concise, modular behaviors. Not only does this approach drastically improve computational efficiency, it also enables self-improvement and more reliable, systematic generalization across problem domains (Didolkar et al., 16 Sep 2025). The following sections detail the key methodological components, operational pipeline, theoretical underpinnings, empirical findings, and broader implications.

1. Metacognitive Reuse Mechanism

The core mechanism involves three phases: (1) initial solution generation as a full chain-of-thought (CoT) for a multi-step problem, (2) metacognitive reflection on the generated trace to identify and extract repeated and generalizable reasoning fragments, and (3) distillation of these fragments into succinct, named behaviors that can be reused directly in further problem-solving.

Operationally, for each problem :

- The model generates a solution with reasoning trace via an initial "Solution Prompt".

- A subsequent "Reflection Prompt" is used to analyze , identifying sub-sequences that correspond to reusable skills or decision patterns.

- These fragments are transformed into (name, instruction) pairs by a "Behavior Prompt", where each behavior encapsulates a procedural principle (e.g., application of inclusion-exclusion, systematic casework counting, or canonical factorizations in algebra).

- The behavior extraction process is strictly prompt-driven and leverages the model’s own self-explanation capabilities.

The result is an evolving repository of behaviors that is continuously augmented as new problems are solved and reflected upon. Formally, the behavior-conditioned inference step at test time can be expressed as:

where are relevant retrieved behaviors for the new question , and is a concise, guided solution.

2. Behavior Handbook: Structure and Retrieval

Extracted behaviors are systematically stored in a "behavior handbook", a structured procedural memory containing named instructions. Each behavior is indexed either by subject domain annotations (for topic-keyed retrieval) or embedded into dense vector representations (for content-based retrieval with vector databases like FAISS).

| Behavior Name | Instruction Example |

|---|---|

| behavior_inclusion_exclusion | Use to avoid double-counting |

| behavior_completing_square | Rewrite as |

- For topic-annotated datasets (e.g., MATH), behaviors are fetched by simple matching.

- For unstructured domains (e.g., AIME), queries and behaviors are embedded via a pre-trained model (e.g., BGE-M3), and high-similarity behaviors are retrieved for each query.

This design enables efficient procedural knowledge recall, acting as a memory substrate for reasoning skills that truncates repetitive, low-level derivations.

3. Behavior-Conditioned Inference

At inference, the model is supplied with a curated set of relevant behaviors along with the problem. The prompt structure is: (Behaviors, Question) Solution. This guidance allows the model to bypass repetitive internal derivations of well-established steps, focusing on novel or composite reasoning.

Experimental results show:

- Up to 46% reduction in test-time reasoning token count compared to vanilla CoT solvers.

- Matched or improved accuracy, indicating that concise behaviors safely replace extended derivations for well-learned skills.

Behavior-conditioned inference thus allows the LLM to optimize its limited context length for exploration and higher-level reasoning, rather than recapitulating known patterns.

4. Behavior-Guided Self-Improvement

The framework supports online self-improvement without parameter updates. After an initial attempt at a solution, the model extracts behaviors from its own chain-of-thought and then re-attempts the problem (or a similar one) while explicitly conditioning on these behaviors. The process is as follows:

- Generate initial solution for .

- Metacognitively extract behaviors from .

- Re-solve using .

Empirical comparisons with a naive "critique-and-revise" baseline reveal that behavior-conditioned self-refinement yields up to a 10% accuracy gain, especially under relaxed token budgets. This demonstrates that LLMs can "learn from themselves" by preserving and reusing their own extracted strategies.

5. Behavior-Conditioned Supervised Fine-Tuning (BC-SFT)

The framework also integrates with supervised fine-tuning (SFT) to distill procedural reasoning capabilities into model parameters. The pipeline is:

- For each training instance, generate behaviors and behavior-conditioned solution traces.

- Use these pairs to fine-tune a student model, with the teacher providing annotated behaviors as context.

- After training, the student model internalizes these procedural patterns and can produce concise, effective reasoning—even for initially non-reasoning (or "calculator-style") models.

Experiments confirm that BC-SFT is more effective than vanilla SFT at both accuracy and token efficiency, as well as in converting base models into robust CoT reasoners.

6. Impact and Implications

The metacognitive reuse framework constitutes a principled methodology for converting slow, resource-intensive derivations into fast procedural hints, resulting in:

- Significant improvements in computational efficiency (reduced token usage at inference and during training).

- Enhanced solution quality (better accuracy and reliability in mathematical and multi-step reasoning benchmarks).

- Procedural memory substrate, where LLMs systematically accumulate and re-deploy their reasoning skills across examples and domains.

- Scalable applicability across a spectrum of tasks—mathematics, programming, theorem proving, dialogue—by abstracting domain-agnostic reasoning behaviors.

- Paving the way for LLMs and neural agents that do not merely "know what" to conclude, but "remember how" to reason, promoting compositionality, generalization, and lifelong learning.

This paradigm demonstrates the critical role of metacognitive processes—reflection, proceduralization, and memory—in enabling efficient, systematic, and adaptive reasoning in LLMs, advancing the field toward more autonomous and self-improving AI systems (Didolkar et al., 16 Sep 2025).