Logical Leakage in Backtesting

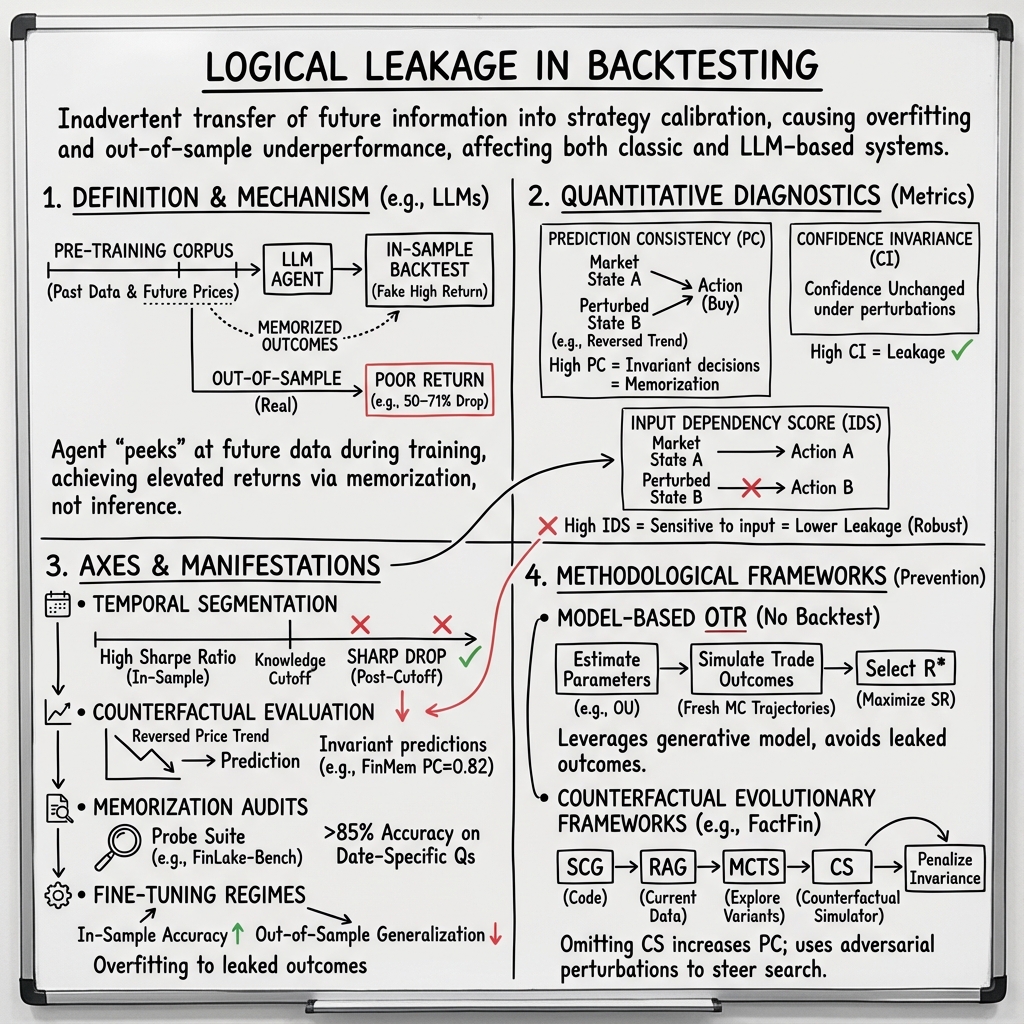

- Logical Leakage in backtesting is defined as the inadvertent use of future or realized outcomes in calibrating trading strategies, causing overfitting and reduced out-of-sample performance.

- It is measured using metrics like in-sample vs out-of-sample Sharpe ratios, Prediction Consistency, Confidence Invariance, and Input Dependency Score to reveal inflated performance.

- Preventative methodologies include simulation-based OTR selection, counterfactual optimization, and adversarial fine-tuning to ensure strategies generalize well beyond the test period.

Logical leakage in backtesting refers to the inadvertent transfer of information about realized outcomes—often unavailable in real-world, out-of-sample scenarios—into the calibration or evaluation of trading strategies. This phenomenon results in overfitting, where the backtested strategy’s parameters (chosen post hoc) capture idiosyncratic in-sample features rather than robust signals, thereby causing subsequent out-of-sample underperformance. Recent research has underscored long-standing risks of logical leakage not only in classic rule-based trading but also in the context of LLMs and other machine learning-driven financial agents.

1. Formal Definition and Mechanisms of Logical Leakage

Logical leakage emerges when parameter selection “peeks” at outcomes that would not be known ex ante, effectively embedding future information into predictive or decision-making processes. Formally, consider a trading rule —e.g., a profit-taking and stop-loss pair—and a backtest that computes its Sharpe ratio as

where denotes the realized profit over trades. Maximizing within a candidate set ,

leverages in-sample returns that incorporate randomness or noise unique to the data window. This process “leaks” historical outcomes into the construction of , making it tailored to the particularities of the backtest period instead of the underlying data-generating process. Bailey et al. define as overfit if its out-of-sample expected Sharpe falls below the median across (Carr et al., 2014).

In LLM-based financial agents, leakage manifests when an agent’s knowledge—acquired from broad-corpus pretraining—encompasses information about future prices, news, or events that fall within the same period as the backtest. Here, the agent achieves elevated backtest returns not via genuine inference but by reciting memorized outcomes from its training data (Li et al., 9 Oct 2025).

2. Quantitative Diagnostics and Leakage Metrics

Quantifying logical leakage requires measurements that can robustly distinguish genuine predictive skill from performance attributable to leaked information. In the classic context, the discrepancy between in-sample and out-of-sample Sharpe ratios serves as a direct indicator of overfit induced by logical leakage (Carr et al., 2014). Specifically, flat Sharpe “landscapes” imply no robust optimal trading rule (OTR), while sharp maxima typically signal overfitting to in-sample fluctuations.

In LLM-driven systems, Li et al. introduce counterfactual-based metrics:

- Prediction Consistency (PC):

High PC indicates decisions invariant to substantive input perturbations, evidencing memorization.

- Confidence Invariance (CI):

High CI implies the agent’s confidence remains unchanged under input changes, again implicating leakage.

- Input Dependency Score (IDS):

Higher IDS suggests the agent’s action distribution is sensitive to input variation, reflecting lower leakage (Li et al., 9 Oct 2025).

Empirically, LLM agents exhibit PC0.7 and CI0.7 on counterfactually perturbed market states (i.e., memorization), while robust systems target low PC/CI and high IDS.

3. Axes and Manifestations of Leakage

Logical leakage through backtesting surfaces along multiple dimensions:

- Temporal Segmentation: When an LLM’s knowledge covers the backtest period, post-cutoff returns deteriorate sharply. For example, after the knowledge window closes, Sharpe ratios across evaluated LLM agents drop by 50–71.85%, even though underlying market conditions are stable (Li et al., 9 Oct 2025).

- Counterfactual Evaluation: Agents that produce invariant predictions under substantial perturbations (e.g., reversed price trends, altered fundamentals) provide evidence of outcome-memorization. For instance, FinMem’s PC=0.8213 and CI=0.8743 across ten large-cap stocks exemplify this effect (Li et al., 9 Oct 2025).

- Memorization Audits: Tools such as FinLake-Bench, a 2,000-question probe suite, reveal LLMs scoring 85% accuracy on date-specific market questions. Trend-prediction recall surpasses 90%, confirming the agents recall—rather than infer—historical outcomes.

- Fine-Tuning Regimes: Overfitting is evidenced by improvements in in-sample accuracy (e.g., Qwen2.5-7B rising from 51.6% to 72.2%) paired with pronounced degradation of out-of-sample generalization (down 21.5%), indicating the model is tuning to leaked-in-sample outcomes (Li et al., 9 Oct 2025).

4. Methodological Frameworks to Prevent Logical Leakage

Methodologies for leakage avoidance aim to ensure that strategy calibration and policy evolution do not utilize information that would not be present in live deployment:

Model-Based OTR Calculation Without Backtesting

Bailey et al. present a procedure for determining the optimal trading rule using the Ornstein–Uhlenbeck (OU) process as the generative model:

- Step 1: Estimate OU parameters and from observed data.

- Step 2: Discretize the profit-taking and stop-loss parameter grid.

- Step 3: For each grid point, simulate trade outcomes via fresh Monte Carlo trajectories of the OU process, independent of realized returns.

- Step 4: Select .

- Step 5: Conditional variants for fixed profit or stop-loss can be implemented. This protocol ensures that the optimization leverages only the data-generating process parameters, not in-sample realized outcomes, thus eliminating logical leakage (Carr et al., 2014).

Counterfactual Evolutionary Frameworks

FactFin wraps LLMs in a pipeline explicitly structured to penalize memorization:

- Strategy Code Generator (SCG): Forces models to output executable code mapping market state to strategy, requiring analytic reasoning.

- Retrieval-Augmented Generation (RAG): Structures unstructured data, ensuring dependencies on current (not historical) inputs.

- Monte Carlo Tree Search (MCTS): Used to stochastically explore strategy variants on historical data.

- Counterfactual Simulator (CS): Applies adversarial perturbations (noise, trend reversals) to data, measuring leakage metrics (PC, CI, IDS) to steer the search. Optimization explicitly penalizes strategies that fail to adjust outputs under counterfactual input scenarios. Empirical ablation demonstrates that omitting CS increases PC (0.3115 0.4858) and reduces total return on AAPL from 36.70% to 28.12%, underscoring the pivotal role of counterfactuals in leakage mitigation (Li et al., 9 Oct 2025).

5. Empirical Evidence and Benchmarking

The necessity of leakage-robust benchmarks is illustrated by FinLake-Bench, which uses knowledge cutoff–aligned question sets to distinguish memorized performance from genuine inference. Weighted accuracy on this benchmark reflects only those capabilities that could plausibly be used live, exposing leakage when near-perfect scores arise for periods spanned by the model’s pretraining (Li et al., 9 Oct 2025).

Out-of-sample backtests reveal that naive LLM agents’ Sharpe ratios decay by over 50% once evaluated outside their pretraining window. FactFin, by contrast, achieves superior and stable post-cutoff performance (e.g., TR=36.70%, SR=1.22 on AAPL), while also halving PC/CI and doubling IDS relative to the strongest baseline (Li et al., 9 Oct 2025).

6. Broader Implications and Practical Guidance

The systematic leakage uncovered in both classical and LLM-based frameworks demonstrates that naive backtesting can devolve into “data archaeology,” rewarding strategies finely tuned to in-sample idiosyncrasies or even explicit knowledge of future outcomes. Robust backtesting now requires:

- Treating LLMs as strategy generators rather than direct predictors,

- Structuring input data to ensure all features available for decision-making exist solely on or before the evaluation date,

- Penalty-driven optimization discouraging invariance to input variation,

- Counterfactual and adversarial evaluation to stress-test the causal validity of learned policies (Carr et al., 2014, Li et al., 9 Oct 2025).

A plausible implication is that leakage-aware design—combining model-driven simulation, counterfactual optimization, and explicit benchmarking—constitutes a necessary standard for deploying reliable financial agents that generalize beyond historical data.