LLM-Empowered Knowledge Graphs

- LLM-Empowered Knowledge Graph Construction is a paradigm shift that integrates large language models into traditional KG pipelines, enabling automated extraction and dynamic schema evolution.

- It leverages both schema-based methods to refine pre-defined ontologies and schema-free techniques to autonomously discover entities and relations, resulting in improved precision and scalability.

- Key challenges include mitigating error propagation and achieving consistent normalization, which are critical for balancing open discovery with logical rigor in evolving domains.

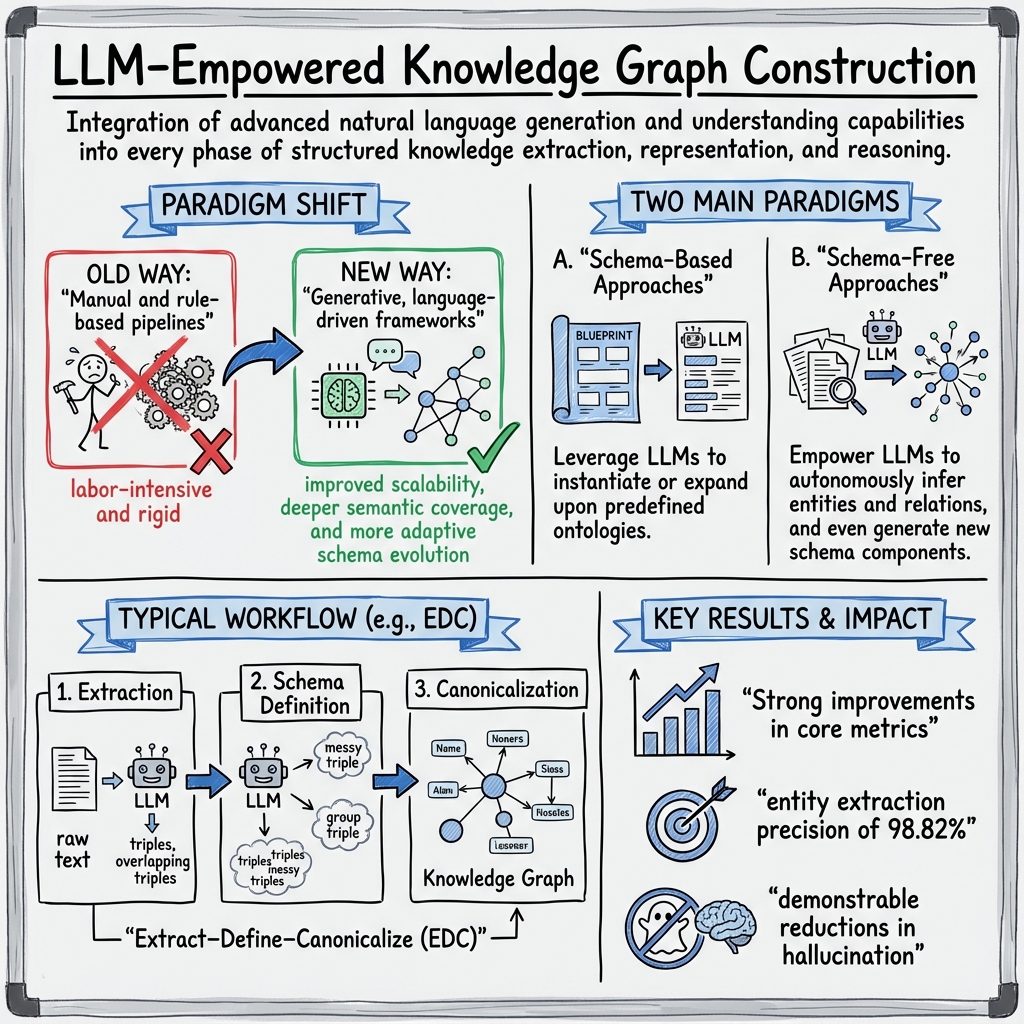

LLM-empowered knowledge graph (KG) construction refers to the integration of advanced natural language generation and understanding capabilities into every phase of structured knowledge extraction, representation, and reasoning. This paradigm shift moves KG construction from manual and rule-based pipelines to generative, language-driven frameworks that promise improved scalability, deeper semantic coverage, and more adaptive schema evolution. The following sections present a synthesized account of the principles, technical methodologies, representative frameworks, empirical results, and emerging trends that define this field.

1. Transformation of Classical Knowledge Graph Construction

Traditional KG construction is organized into a three-layered pipeline:

- Ontology Engineering: Human experts design domain ontologies specifying classes, relations, and constraints using tools and methodologies such as Protégé and METHONTOLOGY. While ensuring logical soundness, this process is labor-intensive and rigid, presenting scalability challenges in dynamic or open-ended domains (Bian, 23 Oct 2025).

- Knowledge Extraction: Early approaches use rule-based and statistical pattern matching. Neural techniques (e.g., BiLSTM-CRF, Transformers) later increased automation, but suffered from limited generalization and data sparsity.

- Knowledge Fusion: This final stage aligns and integrates heterogeneous sources, employing ontology-based alignment and embedding-based fusion, but remains vulnerable to error propagation.

The limitations inherent in this process—manual effort, brittle schema, and fragmented pipelines—set the stage for LLM-driven approaches, which leverage language generation and understanding for automation, semantic consistency, and adaptability (Bian, 23 Oct 2025).

2. LLM-Driven Paradigms: Schema-Based and Schema-Free Approaches

LLM-empowered KG construction is characterized by two complementary paradigms (Bian, 23 Oct 2025):

A. Schema-Based Approaches

- Leverage LLMs to instantiate or expand upon predefined ontologies. Extraction is closely guided by explicit schemas, which may originate from expert-designed frameworks or from formalized Competency Questions (CQs) provided as input (Kommineni et al., 2024, Feng et al., 2024).

- Representative techniques—such as “CQbyCQ” or ontology-grounded pipelines—use multi-stage prompting so that extracted triples and ontological definitions remain logically consistent and normalized. Relations and entity types are aligned to external knowledge bases (e.g., Wikidata) via vector similarity and LLM validation, producing machine-interpretable, interoperable KGs (Feng et al., 2024).

B. Schema-Free Approaches

- Emphasize flexibility and open-domain discovery by empowering LLMs to autonomously infer entities and relations, and even generate new schema components from input text (Sun et al., 30 May 2025, Zhang et al., 2024).

- Open Information Extraction (OIE) and staged pipelines such as Extract–Define–Canonicalize (EDC) remove schema limitations by decoupling raw extraction from schema application, enabling automatic post-hoc canonicalization and redundancy removal (Zhang et al., 2024). Self-canonicalization and schema inference are applied to abstract and cluster similar relations and entities, with LLMs generating definitions and verifying alignments through semantic similarity search.

- This paradigm is often essential for rapidly evolving domains, facilitating adaptability and open-ended knowledge discovery.

3. Technical Frameworks and Workflow Architectures

Numerous technical frameworks have emerged, each embodying distinct combinations of LLM prompting, modular design, and semantic reasoning:

| Framework/Method | Core Components / Workflow | Key Innovations |

|---|---|---|

| REBEL, ChatGPT pipelines | Joint entity/relation extraction, ontology creation by prompting | End-to-end triplet extraction, automatic ontology generation (Trajanoska et al., 2023) |

| Iterative Zero-Shot Prompting (Carta et al., 2023) | Multi-stage LLM prompting, candidate extraction, clustering | Zero-shot, external-knowledge-agnostic scalability |

| EDC (Extract-Define-Canonicalize) (Zhang et al., 2024) | Extraction, schema definition, canonicalization, refinement | Decouples schema handling, enables self-canonicalization, vector search |

| Cross-Data/E-OED (Bui et al., 2024) | Entity discovery via embedding clustering, relation mapping, RAG for QA | Handles cross-source, low-resource domain challenges |

| Ontology-Grounded LLM Construction (Feng et al., 2024) | CQ generation, relation matching to Wikidata, OWL/Turtle output | High interpretability, interoperability, minimal human input |

| GKG-LLM (Zhang et al., 14 Mar 2025) | Curriculum learning, unified seq2seq instruction, LoRA+ fine-tuning | Unified handling of KG, EKG, CKG; robust generalization |

| LKD-KGC (Sun et al., 30 May 2025) | Knowledge dependency parsing, autoregressive schema, unsupervised extraction | Cross-document entity schema inference, clustering-driven deduplication |

| AttacKG+ (Zhang et al., 2024) | Modular LLM pipeline: rewritting, event parsing, tactic labeling, summarization | Multi-layered schema for event (cyberattack) graphs |

| OL-KGC (Guo et al., 28 Jul 2025) | Neural-perceptual structural embeddings + automated ontology extraction | Ontology-to-text prompts, logical rule integration |

| AGENTiGraph (Zhao et al., 5 Aug 2025) | Multi-agent NLU, NER/RE, task planning, graph update via LLM | Multi-turn, intent-driven enterprise KG management |

This modularization underpins “editor's term”: multi-stage LLM KGC—workflows designed to exploit LLM semantic understanding in task-specialized subcomponents.

4. Empirical Results and Performance Analysis

Referencing standard semantic extraction and KG completion tasks, papers consistently report strong improvements in core metrics:

- Entity/Relation Extraction: LLM-based approaches achieve entity extraction precision of 98.82%, recall of 93.18%, and F1 of 95.92% on expert-annotated datasets; relation extraction precision frequently exceeds 75%, with qualitative analyses highlighting enhanced graph structure connectivity (Carta et al., 2023, Trajanoska et al., 2023).

- Completion and Canonicalization: OL-KGC integration of neural and ontological features leads to state-of-the-art accuracy—e.g., up to 2.25% improvement over strong baselines on WN18RR and demonstrable reductions in hallucination (Guo et al., 28 Jul 2025).

- Scalability and Domain Adaptability: Unsupervised frameworks such as LKD-KGC outperform baselines by 10–20% in precision and recall across technical domains without pre-existing schemas, while large-scale academic KG construction in the NLP domain demonstrates robust few-shot extraction at scale (620K entities, >2M relations) (Lan et al., 20 Feb 2025, Sun et al., 30 May 2025).

- Knowledge Retrieval and QA: Integration of LLM-generated KGs with retrieval augmentation (e.g., GraphRAG in OpenTCM (He et al., 28 Apr 2025)) and community summary mechanisms (NLP-AKG) leads to increased QA F1-scores, improved trustworthiness, and, in domain-specific trials, expert ratings approaching or exceeding state-of-the-art baselines.

Empirical trends suggest schema post-processing, LLM-driven disambiguation, and the use of retrieval/augmentation loops (iterative feedback, vector search, multi-agent coordination) significantly enhance both the utility and correctness of constructed KGs.

5. Limitations, Challenges, and Technical Open Problems

Key limitations highlighted in the synthesis are:

- Scalability: Even though LLMs generalize well, scaling to broad domains or long documents can stress context window limits, especially for schema-based extraction; modular processes such as EDC (Zhang et al., 2024) and autoregressive schema evolution (Sun et al., 30 May 2025) serve to mitigate but not fully eliminate these issues.

- Normalization and Consistency: Variability in output due to prompt sensitivity, lack of consistent entity/relation naming, and over-/under-generalization during splitting and abstraction stages require post-hoc canonicalization and explicit clustering (Chen et al., 13 Oct 2025).

- Dependency on Human Expertise: While several frameworks achieve near zero-shot or unsupervised operation, human-in-the-loop evaluation and curation remain essential—particularly in evaluating accuracy, completeness, and semantic faithfulness (Kommineni et al., 2024).

- Error Propagation: Errors introduced during early extraction (especially in open extraction stages) may persist and be magnified in fusion, schema construction, or downstream inference (Bian, 23 Oct 2025).

- Inference Reliability and Evaluation: Semantic correctness can be compromised by LLM hallucinations, spurious triple generation, or inconsistent reasoning—prompting the integration of KGs as constraint-based “fact memories” for later LLM reasoning layers (Feng et al., 2024).

The trade-off between flexibility (schema-free approaches) and logical consistency (schema-driven pipelines) is a recurring technical tension.

6. Emerging Trends and Future Directions

Three major research frontiers are identified:

- KG-Based Reasoning for LLMs: Embedding constructed KGs as dynamic, factual memory substrates for LLM-based agents is anticipated to enhance inference, reduce spurious reasoning, and enable explainable, causal inference. Graph-augmented reasoning architectures (e.g., KG-RAR (Wu et al., 3 Mar 2025)) signal movement towards interactive, interpretable LLM systems.

- Autonomous and Lifelong Knowledge Memory: Vision of agentic systems where KGs serve as continuously updated, temporally aware, and multimodal long-term memory (A-MEM, Zep frameworks, not explicit in above texts but cited in (Bian, 23 Oct 2025)). This supports autonomous, real-time adaptation in evolving domains.

- Multimodal and Cross-Domain KGC: Extending KG construction to integrate non-textual modalities (images, audio, video) is suggested, drawing on work in medical imaging (KG-MRI), vision–language (VaLiK), and other domains that require fusion of symbolic and continuous semantic understanding.

A plausible implication is that KGs will increasingly underpin cognitive middle layers in complex retrieval-augmented generation (RAG) systems, supporting not merely fact lookup but contextual retrieval, planning, query decomposition, and multi-hop reasoning (Bian, 23 Oct 2025, He et al., 28 Apr 2025).

7. Impact and Interplay: Symbolic and Neural Integration

LLMs now empower KG construction at several levels:

- Semantic Unification and Schema Induction: Language-driven extraction and module orchestration, with built-in adaptability, contextual memory, and stepwise semantic refinement.

- Open Discovery vs. Logical Rigor: Balancing exploratory, schema-free extraction with post-processing enforcing consistency, normalization, and logical constraint satisfaction.

- Bidirectional Synergy: LLMs build KGs, which then in turn are used to guide, constrain, and ground LLM outputs in subsequent reasoning, QA, and retrieval tasks (Bian, 23 Oct 2025, Feng et al., 2024).

The resulting systems thus bridge traditional symbolic knowledge engineering—characterized by explainability and structure—with neural inference—marked by adaptability, generative power, and semantic depth. This trajectory is expected to result in more adaptive, transparent, and agentic knowledge systems, with broad applications in research, education, healthcare, enterprise analytics, and multimodal AI.

LLM-empowered knowledge graph construction, as defined by recent research (Bian, 23 Oct 2025, Trajanoska et al., 2023, Zhang et al., 2024, Feng et al., 2024, Sun et al., 30 May 2025, He et al., 28 Apr 2025), constitutes a pivotal methodological evolution toward knowledge systems that unify machine-understandable structure, dynamic semantic reasoning, and human-aligned transparency.