LLM-Adaptive Diarization

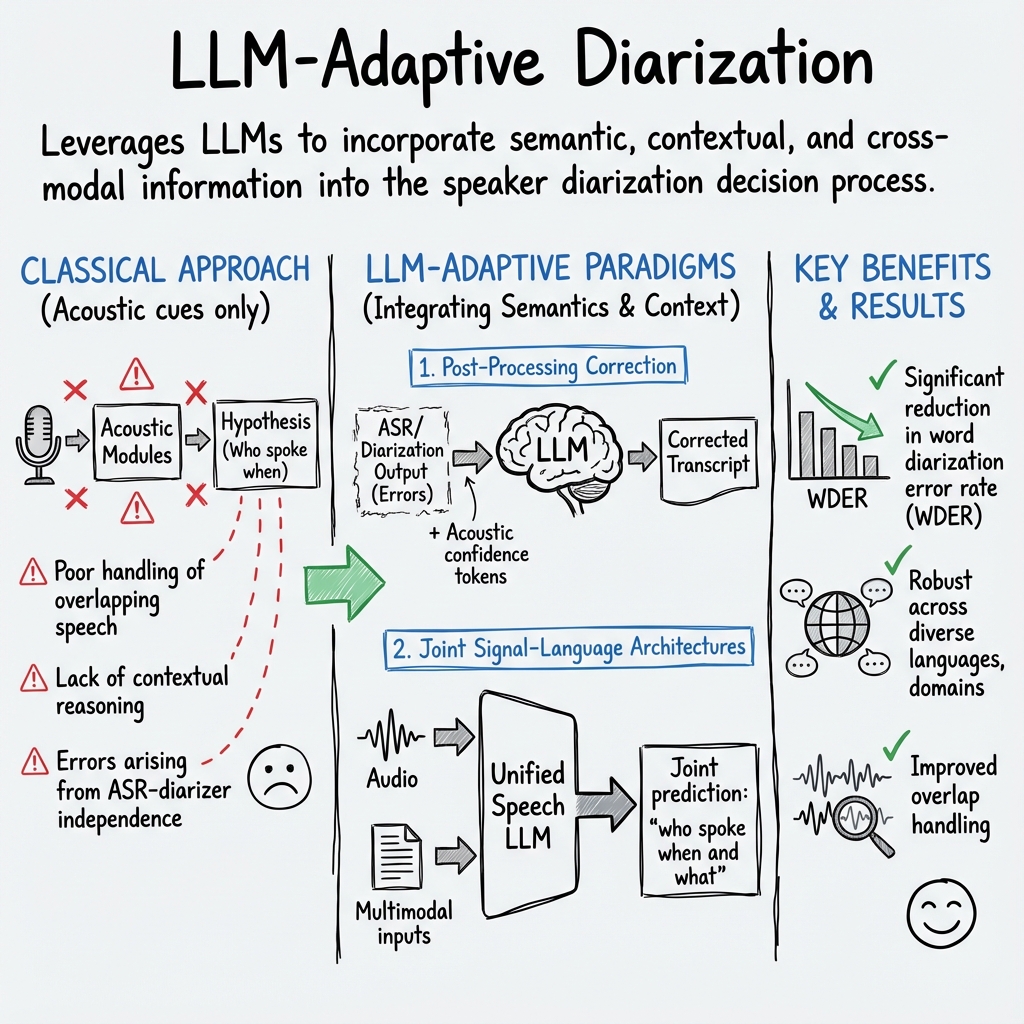

- LLM-Adaptive Diarization is a method that integrates large language models with semantic and acoustic cues to overcome limitations of traditional speaker diarization systems.

- Methodological advances include LLM-informed post-processing, acoustic-conditioned corrections, and joint end-to-end architectures, achieving error reductions up to 55.5% on benchmark datasets.

- These frameworks enable robust handling of overlapping speech, multilingual dialogues, and domain-specific challenges, significantly enhancing diarization performance.

LLM-Adaptive Diarization refers to the class of methodologies and frameworks that leverage LLMs either as core components or as corrective adaptors within speaker diarization pipelines. Unlike classical approaches that operate solely on acoustic cues or rely on modular, cascaded systems, LLM-adaptive diarization explicitly incorporates semantic, contextual, and cross-modal information—often parsed by an LLM—into the diarization decision process. This integration takes forms ranging from LLM-informed post-processing of diarization hypotheses to unified, end-to-end architectures where LLMs jointly condition or control both speaker detection and transcription. The field is motivated by the desire to address limitations in traditional systems, such as poor handling of overlapping speech, lack of contextual reasoning, and errors arising from ASR-diarizer independence, with the aim of delivering robust performance across diverse languages, domains, and application scenarios.

1. Methodological Advances in LLM-Adaptive Diarization

Recent LLM-adaptive diarization frameworks can be organized by the locus and nature of the LLM's involvement:

A. Post-Processing Correction Paradigms:

LLMs, often finetuned on diarization-specific prompt/completion pairs, operate as post-processors to correct speaker labels assigned by upstream diarization and ASR systems. Corrections are based on semantic, discourse, and pragmatic clues in the transcript, with approaches such as DiarizationLM demonstrating a 55.5% relative reduction in word diarization error rate (WDER) on Fisher and 44.9% on Callhome by simply refactoring diarization hypotheses in the LLM using a compact sequence of input tokens (Wang et al., 2024). The LLM outputs are re-aligned to the original transcript via algorithms such as the transcript-preserving speaker transfer (TPST) mechanism.

B. Acoustic-Conditioned LLM Correction:

A refinement over basic post-processing, these frameworks augment the textual transcript with interpretable acoustic confidence tokens (e.g., “low,” “med,” “high” per word) derived from frame-level speaker posteriors. The SEAL approach, for instance, concatenates these acoustic tokens with the transcript to provide fine-grained confidence traces for the LLM, which then corrects inconsistent speaker tags under constrained decoding rules to prevent hallucination, resulting in 24–43% reductions in speaker error rates on multiple benchmark datasets (Kumar et al., 14 Jan 2025).

C. Joint Signal-Language Architectures:

Unified architectures—such as SpeakerLM and the Unified Speech LLM—integrate acoustic encoders, speaker embedding modules, and LLMs into a single sequence modeling pipeline that jointly predicts “who spoke when and what” by generating both speaker tokens and text. These models use multimodal inputs, prompt-based speaker registration, or triplet-style diarization instructions to navigate overlapping and multilingual speaker conditions. Joint optimization and multi-stage progressive training on large-scale SDR data are used to mitigate error propagation and domain transfer issues (Yin et al., 8 Aug 2025, Saengthong et al., 26 Jun 2025).

D. Beam Search and Decoding Integration:

LLM-adaptive methods have also incorporated joint acoustic-lexical beam search. Methods such as the Contextual Beam Search Approach use acoustic model logits and LLM-derived probabilities over speaker-lexical sequence pairs, fusing them in a probabilistic scoring function. Up to 39.8% relative improvement in delta-SA-WER is reported over acoustics-only baselines (Park et al., 2023).

2. Core Algorithmic and Training Strategies

| Approach Category | LLM Input Features | Distinctive Training/Inference Approach |

|---|---|---|

| Post-processing | Text-only or text + diarization tags | Prompt-completion fine-tuning; TPST re-alignment |

| Acoustic-conditioned | Text + word-level acoustic confidence labels | Acoustic tokenization + constrained decoding |

| Joint end-to-end | Audio/semantic embeddings + speaker prompts | Multi-stage joint optimization; triplet conditioning |

| Beam search integration | Acoustic log-probs + LLM lexical log-probs | LLM-guided joint scoring in candidate search |

A brief overview of strategies:

- Fine-tuning on domain-specific prompt–completion pairs (e.g., Fisher, Callhome conversation transcripts), sometimes with ensemble models for ASR-agnostic correction (Efstathiadis et al., 2024).

- Permutation-invariant and constrained loss functions to ensure that the transcription remains unaltered while only the diarization decision is updated (Kumar et al., 14 Jan 2025, Wang et al., 2024).

- Structured input representation (e.g., Local Diarization and Recognition Format, triplet enrollment, special speaker tokens) to contextualize speaker turns for the LLM during joint decoding (Saengthong et al., 26 Jun 2025, Lin et al., 6 Jun 2025, Yin et al., 8 Aug 2025).

- Training pipelining and staged freezing/unfreezing (e.g., initial ASR-only training, followed by SDR alignment and full fine-tuning as in SpeakerLM) to enable robust multi-modal optimization (Yin et al., 8 Aug 2025).

3. Evaluation Metrics and Benchmarking

LLM-Adaptive diarization methods have prompted the community to refine diarization metrics beyond the classical diarization error rate (DER). Key metrics in these works include:

- WDER (Word Diarization Error Rate): The proportion of correctly recognized words tagged with incorrect speaker identity (Wang et al., 2024).

- cpWER (Concatenated Minimum-Permutation WER): Minimum WER achieved by matching reference speakers to hypotheses (Efstathiadis et al., 2024, Kumar et al., 14 Jan 2025).

- deltaCP/deltaSA: Measures improvement over baseline diarization by isolating the diarization-attributable component of transcription error (Efstathiadis et al., 2024).

- tcpWER/tcpCER (Turn-Constrained Permutation WER/Character Error Rate): Evaluates both diarization and transcription quality, with explicit error localization to turn boundaries (Saengthong et al., 26 Jun 2025, Lin et al., 6 Jun 2025).

- BER (Balanced Error Rate): Combines duration and segment error at a speaker-weighted level, addressing dominant-duration bias in DER for systems with high speaker or segment count imbalance (Liu et al., 2022).

Performance improvements are routinely demonstrated on datasets such as Fisher, Callhome, RT03-CTS, AliMeeting, LibriMix, and MLC-SLM challenge corpora, with LLM-adaptive methods consistently outperforming modular and acoustic-only baselines.

4. Practical Adaptation Strategies and Robustness

Adaptation and generalizability are critical themes in LLM-Adaptive Diarization:

- ASR-Agnostic Ensemble Correction: Model performance degrades when the LLM corrector is applied on transcripts from a different ASR system than seen in fine-tuning. An ensemble of LLMs, each trained on different ASR outputs, overcomes this by merging weights to yield universal correction, a key advancement for practical deployment (Efstathiadis et al., 2024).

- Domain and Registration Condition Adaptivity: Systems such as SpeakerLM implement “No-Regist,” “Match-Regist,” and “Over-Regist” modes, in which varying degrees of speaker information are available, allowing for robust operation in anonymous, personalized, or over-specified settings (Yin et al., 8 Aug 2025).

- Low Overhead Extensions: Sidecar/DB-style architectures integrate diarization capabilities into frozen or pretrained ASR models with sub-1k parameter additions and minimal compute increase, highlighting the feasibility of plug-in LLM adaptors even in resource-constrained environments (Meng et al., 2023).

- Error Correction under Imperfect Data: Approaches such as auxiliary VAD-based muting and post-hoc correction using global clustering reconcile local inconsistencies, especially when training or annotation noise is present, as in domain-specific challenges like MLC-SLM (Polok et al., 16 Jun 2025, Saengthong et al., 26 Jun 2025).

5. Multilinguality, Overlap Handling, and Overcoming Cascaded System Limitations

LLM-adaptive frameworks have demonstrated strong capability to generalize across:

- Languages and Domains: Unified speech LLM and DiCoW+DiariZen pipelines, with prompt-augmented and token-registered conditioning, handle multilingual two-speaker dialogues and domain transfer without extensive retraining (Saengthong et al., 26 Jun 2025, Polok et al., 16 Jun 2025).

- Overlapping Speech: Hybrid and joint-model systems adaptively select between diarization modules depending on overlap proportion, and end-to-end neural modules exploit explicit overlapping speaker detection and assignment, leveraging global LLM context and speaker embedding fusion (Huang et al., 28 May 2025, Yin et al., 8 Aug 2025, Lin et al., 6 Jun 2025).

- Joint Modeling Benefits: Joint end-to-end optimization not only reduces the traditional “error propagation” from modular separation—with failed diarization degrading subsequent ASR—but also allows integrated models to be trained with task-specific losses balancing transcription and diarization performance (e.g., minimizing Δcp = cpCER–CER) (Yin et al., 8 Aug 2025).

6. Future Directions and Scalability

Several future directions are identified:

- Scaling Model and Data: Larger, more diverse training corpora and larger LLM backbones are being tested to further improve generalizability in complex, noisy, multilingual, and high-overlap conditions (Yin et al., 8 Aug 2025, Saengthong et al., 26 Jun 2025).

- Real-Time and Streaming Integration: Investigating chunked and streaming inference, with cross-chunk context preservation and speaker continuity, to enable real-time transcription with aligned diarization (Lin et al., 6 Jun 2025, Saengthong et al., 26 Jun 2025).

- Extension to Visual and Cross-Modal Cues: BER and related frameworks suggest evaluating multi-modal systems (audio-visual diarization), potentially integrating LLMs that can process both text and visual features (Liu et al., 2022).

- Full Pipeline Unification: Research is trending toward eliminating the need for external clustering or post-alignment modules by integrating global context and alignment directly into the LLM-based workflow (Saengthong et al., 26 Jun 2025, Yin et al., 8 Aug 2025).

7. Implications and Summary

LLM-Adaptive Diarization leverages the representational and contextual strength of LLMs to resolve ambiguities, correct diarization errors in post-processing, and unify multi-modal sequence understanding for “who spoke when and what” prediction. By directly joining semantic, acoustic, and registration cues in highly parameter-efficient or fully end-to-end frameworks, these approaches yield substantial improvement over modular baselines across diverse evaluation conditions, languages, and speaker settings. Ongoing developments in ensemble corrections, multi-stage fine-tuning, and joint model design continue to drive performance and robustness in real-world deployments, supporting broader aims such as accurate meeting transcription, speaker-specific content analysis, and scalable, generalizable dialogue understanding systems.