LLaVA v1.5: Advancing Multimodal Models

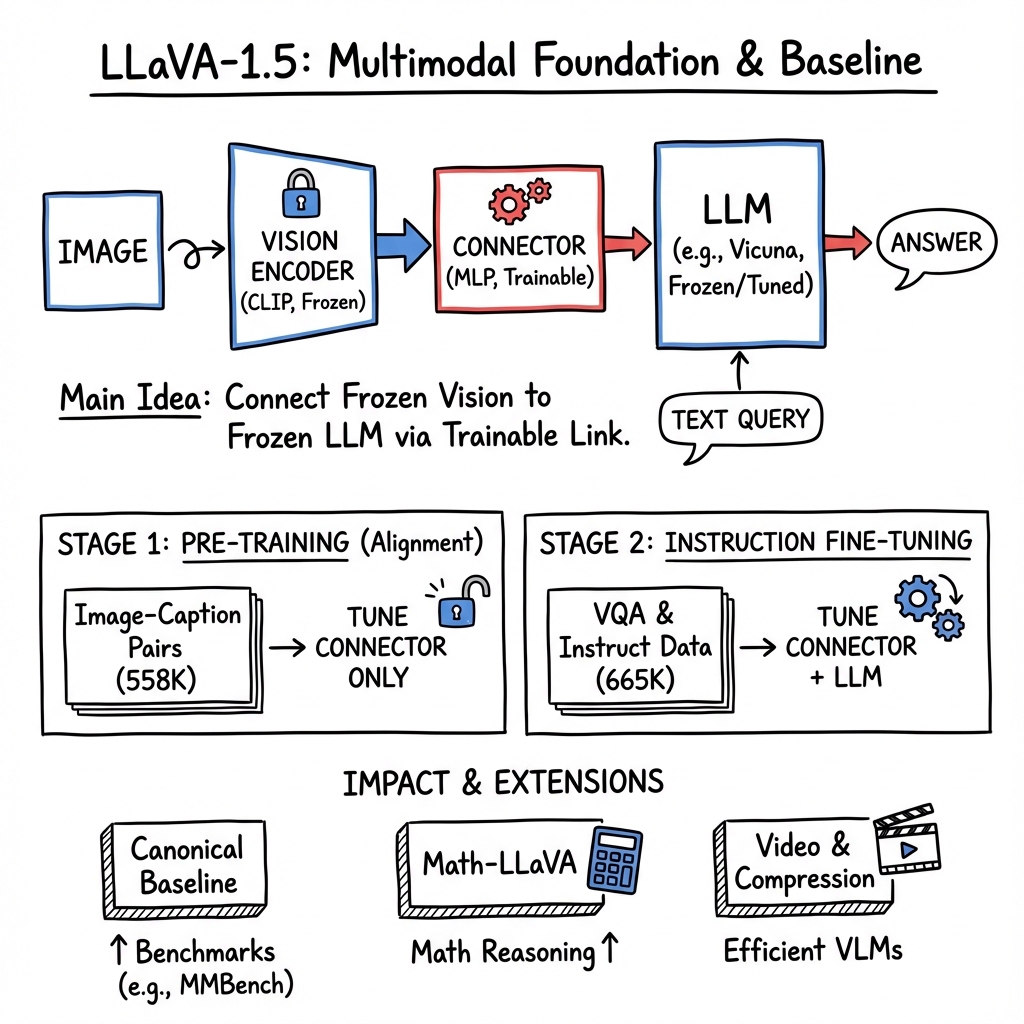

- The model LLaVA v1.5 achieves significant advancements by integrating a frozen CLIP vision encoder with an autoregressive LLM using a two-stage vision-language alignment and instruction tuning protocol.

- LLaVA v1.5 is a multimodal vision-language model that employs a lightweight MLP connector to map task-agnostic visual features into the LLM space, trained on over 1.2M image-caption and VQA-style pairs.

- LLaVA v1.5 serves as a canonical baseline for research, inspiring extensions like TG-LLaVA and Math-LLaVA while setting new standards for scalable, efficient multimodal model design.

LLaVA v1.5 is a large multimodal multi-purpose vision–LLM (VLM) that advances the state of the art in open-source instruction-following for images, and serves as the foundation for a broad family of VLM variants and extensions. LLaVA v1.5 employs a frozen CLIP vision transformer encoder, a lightweight connector (MLP projector), and an autoregressive LLM (e.g., Vicuna-7B/13B), jointly trained using a two-stage vision-language alignment and visual instruction tuning protocol. The LLaVA-1.5 pipeline, as well as its evaluation and derivatives, has become a canonical baseline for multimodal LLM research, enabling rapid development of efficient, scalable, and high-performing VLMs and video LLMs.

1. Baseline Architecture

LLaVA v1.5 utilizes the following principal components:

- Vision Encoder (Ev): A frozen CLIP ViT-L/14 (336 px resolution) that converts an RGB image into patch embeddings, with each patch projected to a -dimensional vector .

- Connector (C): A two-layer MLP (with GELU activation) maps CLIP's visual features into the LLM's embedding space: .

- LLM (L): An autoregressive LLM (e.g., Vicuna-7B or 13B) receives tokenized textual input (e.g., user queries) and, by concatenating , predicts the target sequence using .

In this pipeline, the vision encoder operates fully independently of the linguistic context, resulting in visual features that are "task-agnostic" until fusion within the LLM (Yan et al., 15 Sep 2024).

2. Training Regimen and Data

LLaVA-1.5 standardizes a scalable two-phase supervised training protocol:

- Pre-training (Vision–Language Alignment):

- Data: 558K open-source image–caption pairs.

- Optimizer: AdamW, batch 256, learning rate 1e-3, cosine decay, 0.03 warmup.

- Infrastructure: DeepSpeed ZeRO Stage 2 for distributed memory efficiency.

- Loss: Standard cross-entropy.

- Instruction Fine-Tuning:

- Data: 665K VQA-style and instruction-following conversations (LLaVA-665k), comprising subtasks such as TextVQA, COCO Captioning, GQA, OCR QA, Visual Grounding, and TQA.

- Optimizer: DeepSpeed ZeRO Stage 3, batch size 128, LR 2e-5, one epoch.

This recipe supports robust generalization over a suite of instruction-following tasks and allows plug-and-play adaptation via lightweight connector or head replacement (Yan et al., 15 Sep 2024, Liu et al., 10 Jun 2025).

3. Model Properties and Extensions

Parameterization and Modality Fusion

LLaVA-1.5 relies on a "frozen vision encoder, tuned connector, autoregressive decoder" paradigm. The vision-language interface is both efficient and modular, with early fusion occurring only at the LLM's input embedding layer. No cross-attention or task-specific adaptation is introduced in the vision encoder in the base LLaVA-1.5 model (Yan et al., 15 Sep 2024).

Representative Extensions

Several prominent models use LLaVA v1.5 as a foundation:

- TG-LLaVA: Enhances vision–text linkage by introducing learnable text-guided latent embeddings at both global and local visual feature levels, producing targeted feature optimization and detail perception, leading to consistent +1.0–2.2% benchmark improvements without extra data (Yan et al., 15 Sep 2024).

- LLaVA-Zip (DFMR): Introduces adaptive visual token compression using per-image feature variability, automatically downsampling visual tokens to fit LLM context budgets and reducing computational cost while yielding higher accuracy under hard token limits (Wang et al., 11 Dec 2024).

- LLaVA-c: Augments LLaVA-1.5 with spectral-aware consolidation (SAC) and unsupervised inquiry regularization (UIR), enabling task-by-task continual learning without catastrophic forgetting and achieving parity or better results compared to multitask joint training (Liu et al., 10 Jun 2025).

- Math-LLaVA: Fine-tunes LLaVA-1.5 on a massive synthetic/real MathV360K dataset for multimodal mathematical reasoning, driving vastly improved performance on MathVista (46.6% vs baseline 27.7%; near GPT-4V level) and broad math-focused benchmarks (Shi et al., 25 Jun 2024).

- SlowFast-LLaVA-1.5: Extends LLaVA-1.5 to video via a token-efficient two-stream model (SlowFast), supporting 128-frame sequence inputs with only ~$9$K tokens, delivering state-of-the-art performance in long-form video understanding at small model scales (Xu et al., 24 Mar 2025).

4. Quantitative Performance

LLaVA-1.5 is used as the baseline in a wide range of multimodal benchmarks, often serving as the comparative lower bound for newer methods:

| Benchmark | Base LLaVA-1.5 | TG-LLaVA | Math-LLaVA |

|---|---|---|---|

| MMBench | 65.2 | +2.2 pts | — |

| MathVista(Mini) | 27.7 | — | 46.6 (vs. 49.9 GPT-4V) |

| MMMU | 36.4 | +2.4 pts | 38.3 |

| LLaVA-Bench | — | +3.2 pts | — |

TG-LLaVA delivers 1.0–2.2% absolute gains over LLaVA-1.5 across ten datasets under strict parity of training data and compute (Yan et al., 15 Sep 2024). Math-LLaVA achieves near GPT-4V performance on mathematical minitest and outperforms LLaVA-1.5 by up to 19 points on MathVista (Shi et al., 25 Jun 2024). With token-efficient adaptive compression (DFMR), LLaVA-1.5 DFMR outperforms both fixed-token and random-compression variants on all tested resource-constrained settings (Wang et al., 11 Dec 2024).

5. Architectural Limitations and Motivations for Improvement

While LLaVA-1.5 provides strong instruction-following multimodal baselines, its decoupled vision encoder design is inherently task-blind and cannot leverage prompt-specific or instruction-specific cues for more focused feature extraction or region attention. Furthermore, the lack of adaptive visual token compression can restrict efficiency and scaling in multi-image or long-form input scenarios. These design limitations motivate text-guided visual adaptation (TG-LLaVA), continual consolidation (LLaVA-c), and dynamic token reduction (LLaVA-Zip) (Yan et al., 15 Sep 2024, Wang et al., 11 Dec 2024, Liu et al., 10 Jun 2025).

6. Adoption and Broader Impact

LLaVA-1.5 is widely used as a multimodal LLM research baseline due to its reproducibility, open-source training recipes, and flexible encoder–projector–LLM design. Its influence extends to video LLMs (SlowFast-LLaVA-1.5), large-scale democratized training frameworks (LLaVA-OneVision-1.5), and specialist reasoning augmentation (Math-LLaVA). These extensions validate the scalability and compositional nature of the LLaVA-1.5 foundation while illustrating clear research directions around task-aware vision fusion, efficient context management, and robustness in continual/transfer learning (Yan et al., 15 Sep 2024, An et al., 28 Sep 2025, Xu et al., 24 Mar 2025).

7. Future Directions

The LLaVA-1.5 foundation has set the stage for a new generation of scalable, fine-grained, instruction-tuned multimodal models. Anticipated extensions include RLHF-tuned variants (e.g., LLaVA-OV-1.5-RL), plug-and-play adapters for arbitrary vision encoders or LLMs, continual and curriculum-based learning strategies, and further adaptations for video, document, and high-resolution multi-image contexts (An et al., 28 Sep 2025, Xu et al., 24 Mar 2025). Evaluation across increasingly challenging benchmarks and real-world deployments is poised to remain a central theme.

For further details regarding architectural variants and domain-specific extensions, see (Yan et al., 15 Sep 2024, Wang et al., 11 Dec 2024, Liu et al., 10 Jun 2025, Shi et al., 25 Jun 2024, Xu et al., 24 Mar 2025), and (An et al., 28 Sep 2025).