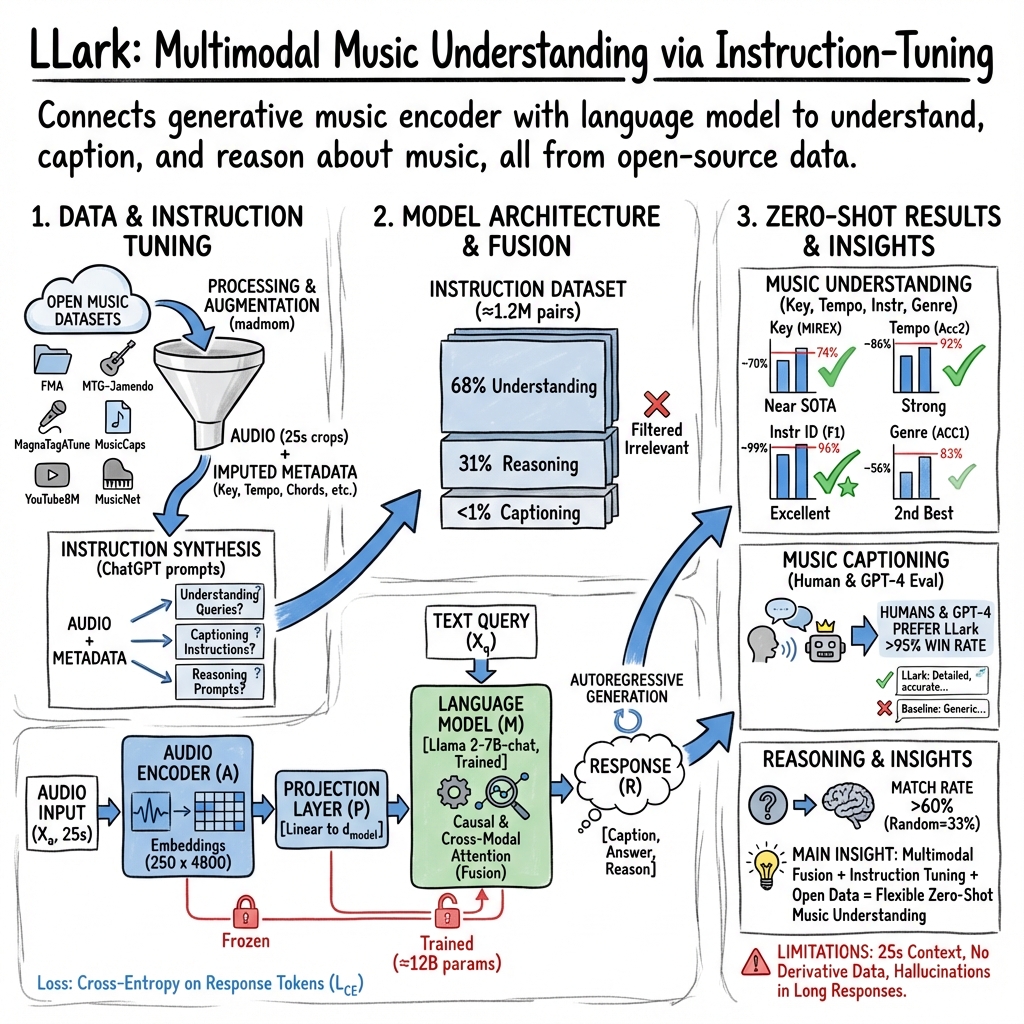

LLark: Multimodal Music Analysis Model

- LLark is a multimodal, instruction-tuned language model for music that fuses a Jukebox audio encoder with an Llama-based language model to achieve near state-of-the-art zero-shot performance in key, tempo, and instrument identification.

- It leverages a diversified dataset of over 164,000 music tracks with high-dimensional metadata augmentation and systematic instruction tuning via ChatGPT variants to ensure high instruction fidelity.

- Evaluations show LLark excels in music captioning and reasoning with high win rates, while exhibiting limitations in extended reasoning and non-Western musical contexts.

LLark refers to a multimodal instruction-tuned LLM explicitly developed for music understanding, captioning, and reasoning. It implements a fusion of a large music-generative audio encoder with an instruction-following LLM, trained entirely on open-source, Creative-Commons-licensed music data. The architecture, training paradigm, and evaluation protocols of LLark are oriented towards zero-shot performance and high instruction fidelity across diverse music analysis tasks (Gardner et al., 2023).

1. Dataset Construction and Instruction Tuning

LLark leverages six open-source music datasets with considerable breadth in genre, era, and annotation style, resulting in a corpus of approximately 164,000 distinct tracks. The principal sources are FMA, MTG-Jamendo, MagnaTagATune, MusicCaps, YouTube8M-MusicTextClips, and MusicNet. Each track is represented by a random 25 s crop for audio modeling; for MusicNet, multi-crop captioning is incorporated to exploit MIDI annotation density. Given the sparsity and heterogeneity of musical metadata, every audio crop is processed through madmom to impute global key, temporal structure (beat grid), tempo (BPM), and bar-aligned chords, yielding high-dimensional metadata augmentation.

Instruction-tuning is applied via systematic conversion of each (audio + metadata) example into triplets:

- is the raw audio waveform.

- is a query token sequence (open-form instruction or question).

- is the response token sequence (caption, answer).

Synthesizing the large volume of queries and responses is accomplished via prompting variants of ChatGPT (gpt-3.5-turbo, gpt-3.5-16k, and GPT-4) with instruction-oriented metadata JSONs, stratified into three task families: music understanding, open-ended captioning, and high-level reasoning. The final instruction-tuning dataset totals ≈1.2 M samples (68% Music Understanding, 31% Reasoning, <1% Captioning), strictly filtered to remove irrelevant or non-followed instructions.

2. Model Architecture and Fusion Strategy

LLark integrates three key architectural components:

- Audio encoder (): Jukebox-5B encoder at layer 36; input audio is mapped to a sequence of 250 mean-pooled embeddings per 25 s crop ( at 10 Hz).

- Projection module (): Single linear layer projecting 4800-D audio features to (the Llama embedding dimensionality).

- LLM (): Llama 2-7B-chat decoder, RLHF-tuned and fine-tuned for instruction following.

The inference graph for the response distribution is:

Audio tokens—post-projection—are interleaved or prepended with language tokens and processed with standard causal self-attention and cross-modal (audio-text) attention:

Parameterization is minimal: is frozen during fine-tuning; only and (aggregating to ≈12 B parameters) are updated, with no auxiliary adapters or weight tying beyond the projection layer.

3. Training and Optimization Procedure

The training objective is categorical cross-entropy over response tokens:

No auxiliary loss functions are used. Optimization details are:

- AdamW optimizer (β₁=0.9, β₂=0.999, ε=1e-6), no weight decay.

- Peak learning rate 5e-5 with a cosine decay schedule and a 3,000-step warm-up.

- Mini-batch size 32, run for 100,000 steps (∼54 h on 4×A100 80 GB GPUs).

- Audio encoder is frozen throughout; projection and language modules trained with bfloat16 precision.

4. Evaluation Tasks, Metrics, and Results

LLark is evaluated zero-shot on three principal families:

4.1. Music Understanding

Tasks include global key estimation (MIREX score on GiantSteps Key), tempo estimation (Acc2 ±4% octave, GiantSteps Tempo), genre classification (accuracy on GTZAN and MedleyDB), and instrument identification (segment-level F₁ on MedleyDB and MusicNet). LLark achieves near state-of-the-art results for key, tempo, and instrument identification, and is second best on genre classification relative to fine-tuned supervised models.

| Task | Baseline | IB-LLM | LTU-AS | LLark | SOTA |

|---|---|---|---|---|---|

| Key (MIREX) | 0.32 | 0.048 | 0.00 | 0.70 | 0.743 |

| Tempo (Acc2) | 0.77 | 0.05 | 0.00 | 0.86 | 0.925 |

| Genre @GTZAN (ACC1) | 0.10 | 0.71 | 0.30 | 0.56 | 0.835 |

| Instr ID @MusicNet (F₁) | 0.26 | 0.86 | 0.86 | 0.99 | 0.963 |

Ablations indicate substantial degradation (30–50 points) if the Jukebox encoder is replaced with CLAP or if Llama 2 is replaced with MPT-1B (especially for tempo estimation).

4.2. Music Captioning

Zero-shot tests on MusicCaps, MusicNet, and FMA are scored by head-to-head human raters (7-point Likert). LLark wins >99.6% of pairwise votes on MusicCaps, >99.7% on MusicNet, and >95.7% on FMA against best baselines (IB-LLM, LTU-AS, WAC, LP-MusicCaps). GPT-4 “musical detail” judges concur, with LLark >90% win rates.

4.3. Reasoning Tasks

On reasoning and audio-text matching (“given audio and a question, select correct answer from model outputs”), LLark attains a 60–70% match rate (random is 33%), with baselines scoring ≤25%. Again, GPT-4 judges prefer LLark >90% over all comparators.

4.4. Scaling and Data Efficiency

Diminishing returns are observed beyond ∼50% of training data volume, suggesting the model saturates instruction fidelity efficiently. Audio encoder ablation and LM swaps yield strong drops, confirming the necessity of both components.

5. Human Evaluations, Qualitative Examples, and Failure Modes

Human studies use pairwise comparison interfaces (Appen), randomizing order and rating captions/responses. Most raters are non-experts; ∼3% of MusicCaps samples are excluded for non-musicality. LLark adjusts response length according to instruction granularity (e.g. “describe in detail” vs. “describe in one word”), demonstrating robust instruction following.

Qualitative outputs include accurate tempo, key, and instrument identification for short queries, highly detailed multi-paragraph captioning, and sophisticated reasoning (e.g. instrument removal rationale). However, failures include regression on core musical details during extended reasoning, hallucinated musical captions for out-of-distribution sounds, defaulting to popular genres/tempos, and generic verbosity due to RLHF-induced chatbot bias.

6. Limitations and Prospective Work

Primary limitations are dictated by the architecture and data paradigm:

- 25 s context window limitation (Jukebox encoder); extended pieces require chunking.

- Only “no derivatives” CC audio is used; model weights and annotations cannot be released.

- Human assessments lack expert-level depth; models show Western-centric music and language bias.

- Known hallucinations persist in long-form responses.

Open research areas include scaling audio encoders (larger or hybrid models), upgrading the LM backbone (e.g. Llama 3), enhancing metadata richness (harmony, lyrics, structure), extending instruction context, improving benchmarks for open-ended tasks, and advancing bias mitigation through dataset diversification.

7. Context and Significance

LLark demonstrates that a multimodal, instruction-tuned paradigm—combining generative audio backbones with powerful LLMs and robust metadata augmentation—can deliver strong zero-shot results in music analysis, captioning, and reasoning. By strictly adhering to open data and scalable, instruction-oriented fusion, LLark matches or exceeds existing approaches in both structured tasks and free-form musical intelligence. The rigorous ablation and scaling studies confirm that architecture, data preparation, and instruction fidelity are jointly critical for generalizable, flexible musical understanding. LLark’s release of code and comprehensive evaluation protocols sets a standard for reproducibility and further advancement in multimodal music AI research (Gardner et al., 2023).