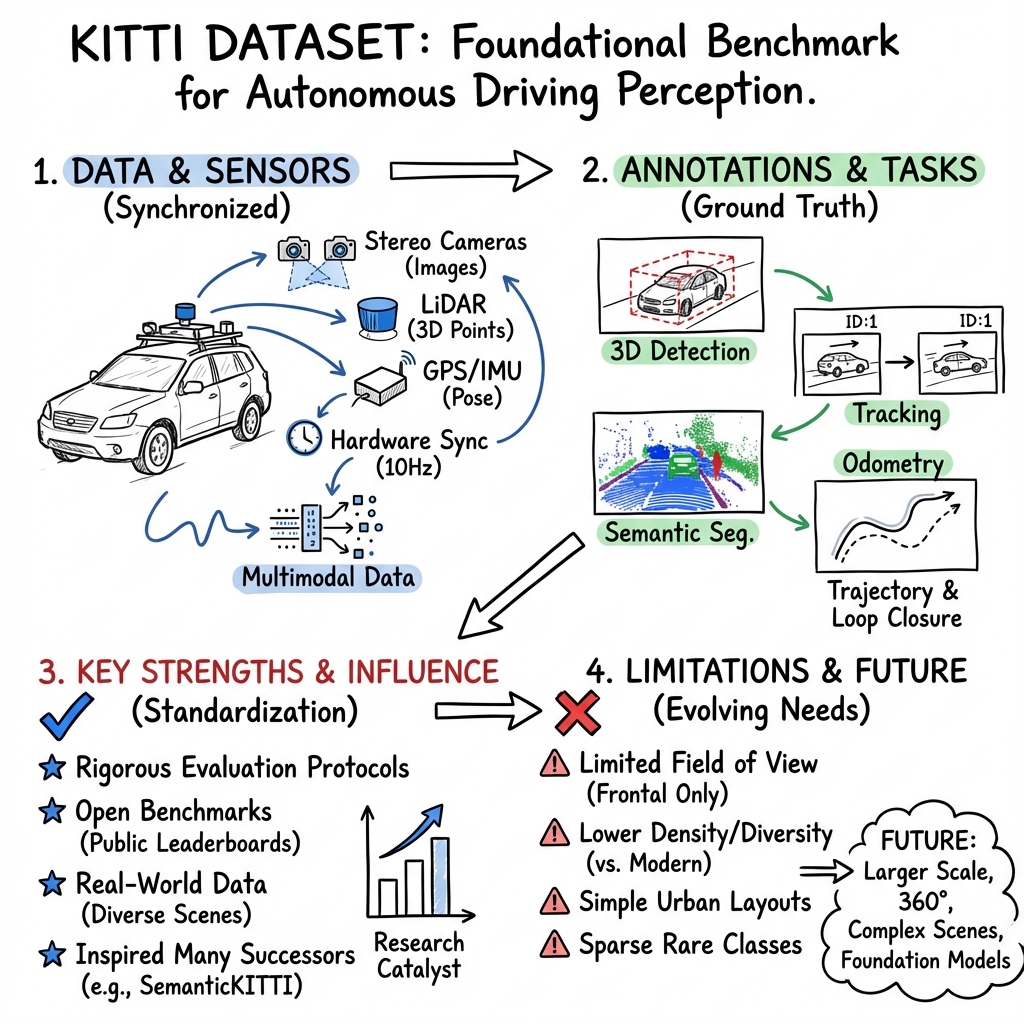

KITTI Dataset Benchmark

- KITTI dataset is a pivotal benchmark offering synchronized multimodal sensor data (stereo images, LiDAR, GPS/IMU) for autonomous driving research.

- It provides detailed annotations and evaluation protocols for tasks like stereo vision, object detection, SLAM, and semantic segmentation with specific metrics.

- The dataset’s design has influenced numerous extensions and successors, shaping robust perception algorithms in computer vision, robotics, and machine learning.

The KITTI dataset is an influential benchmark in autonomous driving research, providing synchronized multimodal sensor data—including high-resolution stereo camera images, 3D LiDAR point clouds, GPS/IMU poses, and associated ground-truth annotations—for key perception and scene understanding tasks. Initiated in Karlsruhe, Germany, KITTI has become foundational for computer vision, robotics, and machine learning communities, enabling rigorous evaluation and comparison of methods in tasks such as stereo vision, optical flow, visual odometry, loop closure, 2D/3D object detection, tracking, and semantic scene understanding.

1. Sensor Suite, Data Composition, and Protocols

The original KITTI acquisition platform utilized two high-resolution Point Grey Flea 2 cameras (1392×512 px) for stereo imaging, a Velodyne HDL-64E LiDAR scanner (64 beams, ~1.3M points/sec, 360° horizontal FOV), an OXTS RT 3003 GNSS/IMU, and additional supporting sensors. Data was captured at 10 Hz for LiDAR and 10/15 Hz for images, with all sensors hardware-synchronized and extrinsically calibrated via survey ground control and checkerboard-based camera-LiDAR calibration.

The dataset comprises scenes across diverse urban, suburban, and rural road categories. Notable benchmarks within KITTI include:

- Stereo 2012/2015: 194/200 stereo image pairs with ground-truth disparities from LiDAR.

- Optical Flow 2012/2015: Built on stereo sequences and semi-dense ground-truth for motion.

- Object Detection/Tracking: 7481 training and 7518 test images with 3D bounding boxes for vehicles, pedestrians, and cyclists, plus tracking annotations for multi-object association.

- Visual Odometry/SLAM: 22 raw odometry sequences with accurate ground-truth trajectories from RTK-GPS/IMU fused with SLAM-based alignment.

- Semantic Segmentation (SemanticKITTI): Dense, point-level ground-truth semantic labels for all LiDAR scans in the odometry benchmark, covering 43,000+ scans and 28 semantic classes (Behley et al., 2019).

KITTI’s data is released in custom binary and plain text formats, with rich calibration (.txt) files specifying camera intrinsics/extrinsics, LiDAR calibration, and time-stamped trajectory data.

2. Annotation Schemes and Evaluation Metrics

KITTI’s annotation pipelines established rigorous and reproducible ground-truth standards. Polygonal 2D bounding boxes and 3D cuboid annotations are provided for objects when visible within the image or point cloud FOV, paired with 3D tracking IDs for object persistence. For detection, benchmarks use three difficulty levels (Easy, Moderate, Hard), determined by bounding box size, occlusion, and truncation fraction.

Evaluation metrics are task-specific:

- Object Detection: Average Precision (AP), averaged over recall range, with 2D and BEV/3D box variants; AP leverages IoU thresholds of 0.7 (car) and 0.5 (pedestrian/cyclist). For orientation, Average Orientation Similarity (AOS) is also used.

- Tracking: CLEAR MOT metrics (MOTA, MOTP, ID switches), with association established via 3D/2D box overlap and consistent IDs.

- Visual Odometry/SLAM: Trajectory error is measured as percentage drift (translational, rotational) over different sequence lengths.

- Semantic Segmentation (point cloud): Mean Intersection over Union (mIoU), averaged across classes, as

where , , and are true positive, false positive, and false negative counts for class (Behley et al., 2019).

3. Extensions, Synthetic Counterparts, and Successor Datasets

The KITTI framework has directly inspired an array of significant dataset extensions and synthetic mirrors:

- SemanticKITTI: Full point-wise semantic labels for the LiDAR odometry benchmark, enabling scene understanding, sequence-level semantic mapping, and panoptic segmentation (Behley et al., 2019, Behley et al., 2020).

- Virtual KITTI 2: Photorealistic clones of KITTI tracking sequences, providing ground-truth for depth, flow, classes, and instance IDs under diverse weather and lighting conditions. Enables controlled experiments for robustness and domain adaptation (Cabon et al., 2020).

- KITTI-360: A successor with 360° stereo and fisheye imaging, pushbroom laser data, and dense, temporally-consistent 2D/3D semantic annotations, supporting SLAM, view synthesis, and dense scene understanding across over 150k frames and 1B 3D points (Liao et al., 2021).

- KITTI-CARLA, Synth-It-Like-KITTI: Synthetic datasets rendered in CARLA with KITTI-calibrated sensor setups, used for pretraining and transfer learning, and to paper domain adaptation (Marcus et al., 20 Feb 2025, Deschaud, 2021).

Several datasets for specialized tasks (e.g., instance motion segmentation, anomaly detection, and panoptic segmentation) are built directly on KITTI or inherit its sensor protocols and annotation schemes (Mohamed et al., 2020, Mu et al., 12 Jul 2025, Behley et al., 2020).

4. Strengths, Limitations, and Comparison to Recent Datasets

KITTI’s success is rooted in its careful sensor calibration, broad protocol coverage, and open, benchmark-based evaluation. However, several limitations are consistently noted across comparative literature:

- Sensor Coverage: Only frontal field of view is annotated (frontal 90° for camera/LiDAR), precluding full-surround perception (Patil et al., 2019).

- Scene Complexity: Traffic density and scene diversity are lower than in modern benchmarks (e.g., H3D, nuScenes, IPS300+), with simpler urban layouts and less occlusion (Patil et al., 2019, Caesar et al., 2019, Wang et al., 2021).

- Label Diversity and Density: Annotated 3D object classes (car, pedestrian, cyclist) are restricted, and instance density per frame is modest (average 5–6 objects), limiting training for rare classes and high-density situations (Pham et al., 2019, Wang et al., 2021).

- Benchmark Scope: No standardized protocol for 360° full-surround tracking, panoptic segmentation, or explicit anomaly/out-of-distribution evaluation (Patil et al., 2019, Mu et al., 12 Jul 2025).

- Calibration Sensitivity: Camera calibration affects odometry benchmarking, with improvements documented by optimizing intrinsic/extrinsic parameters over standard checkerboard routines, leading to reduced metric drift and even outperforming laser-based odometry (Cvišić et al., 2021).

In direct comparison:

- H3D increases label density 15-fold over KITTI, annotates full 360°, and targets crowded, interactive scenarios (Patil et al., 2019).

- nuScenes expands class coverage, adds radar, provides 360° multimodal annotations, and increases frame/object counts (100x images of KITTI, 7x labeled boxes) (Caesar et al., 2019).

- A*3D and IPS300+ augment scene diversity and label density, particularly under night and adverse weather, surpassing KITTI in object density by orders of magnitude (Pham et al., 2019, Wang et al., 2021).

- SemanticKITTI’s dense point-wise labels enable semantic and panoptic segmentation research, bridging perception and mapping (Behley et al., 2019, Behley et al., 2020).

5. Algorithms, Usage Patterns, and Impact

KITTI has shaped learning-based and geometric algorithms for stereo, detection, segmentation, and SLAM:

- Stereo Vision/Depth: The KITTI stereo benchmark is considered a reference for evaluating deep stereo architectures (e.g., RAFTStereo, iRaftStereo_RVC), which report region-specific “bad 3.0” and average disparity errors. Fine-tuning on KITTI is a standard procedure for real-world generalization assessment (Jiang et al., 2022).

- 3D Object Detection: Most LiDAR object detectors (e.g., VoxelNet, PointPillars, Voxel-R-CNN) are initially developed or extensively tuned on KITTI, using its three-level AP metrics. Studies repeatedly note that models robust on KITTI datasets can underperform on datasets with higher object density or harder scenes, highlighting the need for cross-benchmark validation (Pham et al., 2019, Marcus et al., 20 Feb 2025).

- Semantic Segmentation: KITTI (including SemanticKITTI) is leveraged to develop single-scan, multi-scan, and scene completion models. Despite advances in architecture (e.g., SqueezeSeg, DarkNet53, PointNet++), mIoU scores on LiDAR data remain lower than on image-based tasks, exposing the modal sparsity challenge (Behley et al., 2019).

- SLAM and Odometry: Visual and visual-inertial odometry methods, including SOFT2, ORB-SLAM2, and VISO2, use the KITTI odometry splits for performance benchmarking, with recent work improving camera calibration to match or outperform LiDAR-based ground truth (Cvišić et al., 2021).

- Bio-sensing/Vision Applications: KITTI scenes serve as realistic, variable driving stimuli for research into driver attention and hazard event detection, enabling development of multimodal safety monitoring and early-warning algorithms (Siddharth et al., 2019).

6. Influence on Dataset and Task Evolution

KITTI’s abstraction—data collected from a moving platform, open protocols, labeled across multiple domains—has made it a prototypical model for subsequent closed- and open-world datasets. Successors frequently employ or adapt its sensor suite, annotation format, or benchmarking style, and much of the advanced research in autonomous perception benchmarks results from lessons and bottlenecks identified in KITTI analyses.

Although approaches trained purely on KITTI generalize to only a subset of real-world diversity, its combination of geometric accuracy, precise calibration, and annotation rigor has made it invaluable for progress in stereo, detection, and scene understanding pipelines.

7. Prospects and Ongoing Developments

As research moves toward large-scale, foundation-model–driven and cross-modal learning, the need for greater scene diversity, higher annotation density, 360° surround-view, rare-object representation, and multi-scene calibration outpaces what KITTI provides. Recent datasets such as ROVR-Open-Dataset address these limitations by vastly increasing scale, scene variety, and challenging conditions, exposing generalization failures of models trained on KITTI alone (Guo et al., 19 Aug 2025). New synthetic and augmented reality variants (e.g., KITTI-AR, Virtual KITTI 2, KITTI-CARLA) play an expanding role in transfer learning and robustness assessment (Cabon et al., 2020, Deschaud, 2021, Mu et al., 12 Jul 2025).

Despite increased competition and the emergence of more specialized or larger-scale benchmarks, KITTI retains its centrality as a well-validated reference; algorithms evaluated on KITTI remain a comparative baseline for autonomous driving research. The continuing development of extensions (e.g., panoptic LiDAR segmentation, anomaly detection, active learning on SemanticKITTI) demonstrates that the dataset and its legacy protocols remain highly relevant for both classic and emerging tasks (Behley et al., 2020, Duong et al., 2023, Mu et al., 12 Jul 2025).

In summary, the KITTI dataset represents a crucial pillar in the development and benchmarking of autonomous driving perception systems, providing multimodal sensor data, accurate ground-truth annotations, and rigorous evaluation protocols. Its design principles have profoundly shaped contemporary dataset practice, though the field now routinely calls for greater density, diversity, and modality to meet the expanded requirements of real-world deployment, complex scene understanding, and robust, generalizable perception.