Generative Social Simulation

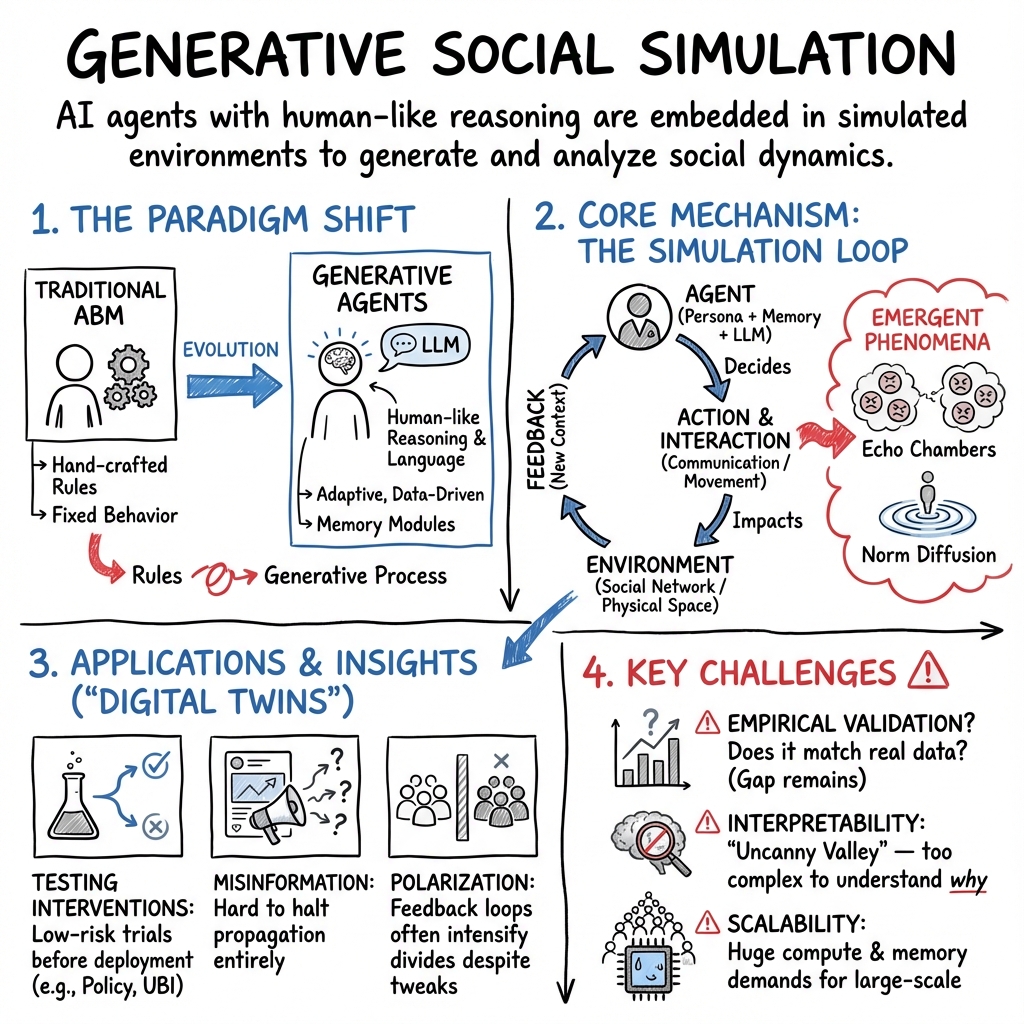

- Generative social simulation is a computational paradigm that embeds LLM-enhanced agents in simulated environments to study complex social dynamics.

- It integrates methods from agent-based modeling, generative AI, and network science to test interventions and analyze emergent social behaviors.

- Robust empirical calibration and scalable architectures ensure the simulations provide actionable insights for domains like crisis management and online communities.

Generative social simulation refers to the computational modeling paradigm in which artificial agents—often endowed with advanced reasoning and language abilities via models such as LLMs—are embedded within simulated environments to generate, analyze, and predict social system dynamics. This approach synthesizes techniques from classic agent-based modeling (ABM), multi-agent systems, generative artificial intelligence, and network science, enabling researchers to study emergent social phenomena, test interventions, and inform policy—all within a controlled, scalable, and often reproducible digital context.

1. Foundations and Key Principles

Generative social simulation extends traditional ABM in several dimensions:

- Agent Enrichment: Agents are directly empowered with human-like reasoning, cognition, and language generation capabilities via LLMs, moving beyond hand-crafted rule sets to more adaptive, data-driven behavior (Ghaffarzadegan et al., 2023, Xiao et al., 2023, Vezhnevets et al., 2023).

- Interaction Modalities: Simulations can support a wide range of environments—physical (robot navigation), social (norm diffusion, crisis management), and digital (social networks, online learning)—grounding agent actions in realistic spatial, temporal, and contextual cues (Baghel et al., 2020, Vezhnevets et al., 2023).

- Emergence and Feedback: Micro-level agent actions, decisions, and interactions yield macro-level phenomena (e.g., diffusion of norms or information, echo chambers, polarization), and feedback mechanisms shape agent updates and environment evolution (Ghaffarzadegan et al., 2023, Piao et al., 12 Feb 2025, Panayiotou et al., 2 Apr 2025).

- Hybridization with Generative AI: LLM integration supports narrative generation, reinterpretation of instructions, improvisational behaviors, and even “meta-reasoning” about agent goals, context, or past actions (Vezhnevets et al., 2023, Ferraro et al., 2024).

- Empirical Realism and Validation: Growing emphasis is placed on benchmarking simulated behavior against empirical datasets and measuring "empirical realism" through loss functions, text similarity, or task-oriented metrics (Münker et al., 27 Jun 2025, Zeng et al., 8 Jul 2025).

This paradigm reconceptualizes social simulation from explicit, handcrafted rules to a generative process in which emergent phenomena result from the interplay between agent cognition, context-aware interaction, and recursive feedback.

2. Architectures and Methodological Innovations

Generative social simulation systems typically exhibit multi-layered architectures, with key components including:

- Generative Agents: Each agent is parameterized by persona attributes, memory modules, and decision-making processes. LLMs are queried with textual prompts encoding the agent's internal state and external context, producing structured outputs (e.g.,

Choice–Reason–Contenttriplets) (Ghaffarzadegan et al., 2023, Orlando et al., 9 Feb 2025, Ferraro et al., 2024). - Simulation Environment: Realistic environments may be physical (CoppeliaSim-based social spaces (Baghel et al., 2020)), social (structured townships (Xiao et al., 2023)), or digital (simulated social networks (Chesney et al., 2024, Rende et al., 2024)). Environments enforce exogenous events, spatial logistics, and social structure (such as directed graphs or time-expanded crowd scenario graphs (Panayiotou et al., 2 Apr 2025)).

- Interaction and Communication: Communication between agents—and with the simulated environment—is structured through natural language API calls, retrieval-augmented generation (RAG) mechanisms, and associative memory systems (Shimadzu et al., 18 Mar 2025, Ji et al., 2024, Vezhnevets et al., 2023).

- Supervision and Control: Components such as the “Game Master” (inspired by tabletop gaming (Vezhnevets et al., 2023)), environmental controllers, and fact-checking modules (as in MOSAIC (Liu et al., 10 Apr 2025)) manage global state, validate feasibility of agent actions, and supervise collective outcomes.

- Scalability and Parallelization: Distributed architectures (exploiting frameworks like Ray and multi-GPU parallelism) support simulations at the scale of tens of thousands to hundreds of thousands of agents with minimal deviation in outcome metrics (Tang et al., 2024, Piao et al., 12 Feb 2025, Ji et al., 2024).

Algorithmic Highlights:

- Agent action:

- File-based and prompt-based configuration (YAML or JSON) for rapid setup (Rende et al., 2024, Xiao et al., 2023).

- Graph-based representations for both agent states (nodes) and episodic interactions (edges); used in both symbolic navigation (Baghel et al., 2020) and large-scale social graphs (Ji et al., 2024).

- Loss minimization formulations to ensure empirical fidelity: e.g., , as in (Münker et al., 27 Jun 2025).

3. Applications and Case Studies

Generative social simulation has been applied across a variety of domains, with demonstrable results:

| Domain | Example System/Study | Emergent Phenomena/Focus |

|---|---|---|

| Social norm diffusion | GABM (Ghaffarzadegan et al., 2023) | Emergence of norms, prompt sensitivity |

| Social navigation robotics | Toolkit (Baghel et al., 2020) | Data enrichment, GNN-based control |

| Public administration crisis | GABSS (Xiao et al., 2023) | Memory-driven response, rumor spread |

| Online social networks | MOSAIC (Liu et al., 10 Apr 2025), | Misinformation, content moderation |

| GraphAgent (Ji et al., 2024) | Graph property preservation | |

| Large-scale society simulation | AgentSociety (Piao et al., 12 Feb 2025) | Polarization, UBI, disaster response |

| Educational simulation | GCL (Wang et al., 2024), Simulife++ (Yan et al., 2024) | Social/cognitive presence, non-cognitive skills |

| Crowd simulations in virtual worlds | Gen-C (Panayiotou et al., 2 Apr 2025) | High-level crowd behavior generation |

| Empirical benchmarking | (Münker et al., 27 Jun 2025, Zeng et al., 8 Jul 2025) | Measurement of realism, model limits |

Key findings include:

- GABMs capture path-dependency in norm adoption, with even subtle prompt adjustments changing macroscopic outcomes (Ghaffarzadegan et al., 2023).

- Preference-based recommendations drive homophily and echo chamber formation, while randomization induces greater diversity (Ferraro et al., 2024, Orlando et al., 9 Feb 2025).

- Interventions such as chronological feeds or boosting bridging posts improved some fairness metrics while unintentionally intensifying polarization—a property explained as resulting from fundamental feedback loops (Larooij et al., 5 Aug 2025).

- Generative agent memory systems (e.g., BERT-based vectorized memory (Xiao et al., 2023)) and error correction modules (Tang et al., 2024) enable cognitive realism and simulation robustness at scale.

4. Challenges, Limitations, and Empirical Realism

Despite advances, several core challenges limit current generative social simulations:

- Empirical Realism and Validation: Successful simulation requires empirical calibration—fine-tuned models evaluated against real user data using loss functions (BLEU, semantic similarity, etc.) and domain-specific metrics (Münker et al., 27 Jun 2025). Significant cross-linguistic gaps remain; models tuned for English often perform poorly on German or other low-resource contexts, reflecting limitations of training data and evaluation frameworks.

- Interpretability and Abstraction: Highly expressive LLMs can obscure underlying causal mechanisms (the “uncanny valley” problem). Rich micro-level dialogue may mask—or fail to induce—system-level emergent phenomena required for theory building or prediction (Zeng et al., 8 Jul 2025).

- Role Consistency and Evolution: Maintaining consistent agent identities and personalities across time while allowing for plausible evolution is a persistent technical and conceptual problem (Zeng et al., 8 Jul 2025).

- Temporal and Structural Mismatch: Alignment between the natural time of conversation and the discrete time of simulation models can be problematic, undermining the fidelity of models intended to capture long-range diffusion or macro outcomes.

- Scalability and Resource Requirements: Large-scale simulations (e.g., 100k+ agents) benefit from highly parallelized backends but impose substantial compute and engineering burdens, including data transfer bottlenecks and memory constraints (Tang et al., 2024, Ji et al., 2024).

5. Interventions, Policy, and Societal Impact

Generative social simulation provides a platform for testing both micro-level behavioral nudges and macro-level systemic reforms, with studies revealing the subtle—and often disappointing—impact of proposed interventions:

- Social Media Reform: Modifications such as chronological feeds, suppression of social statistics, or boosting empathetic content produce only modest alleviations of echo chambers and partisan amplification (Larooij et al., 5 Aug 2025). Indeed, key dysfunctions appear robust to these algorithmic tweaks, implicating deeper structural feedbacks between reactive engagement and network growth.

- Content Moderation: Hybrid fact-checking (community plus algorithmic) improves factual engagement but does not entirely halt the propagation of non-factual content (Liu et al., 10 Apr 2025).

- Policy Simulation: Full-scale societal simulacra facilitate controlled “digital twin” experiments, such as synthetic UBI trials or disaster response, with simulated outcomes closely mirroring empirical data (Piao et al., 12 Feb 2025). This suggests use as a low-risk/low-cost proxy for field trials, though caution is needed in extrapolation due to abstraction and modeling assumptions.

A plausible implication is that generative social simulation—by grounding intervention research in dynamic, feedback-sensitive models—enables critical evaluation of reform ideas prior to deployment, highlighting cases where surface reforms are unlikely to yield substantive improvements.

6. Future Directions and Theoretical Developments

The field is advancing toward:

- Better Empirical Alignment: Emphasis on rigorous, language- and dataset-specific benchmarking; use of formal loss functions (, ) as confidence metrics for simulation fidelity (Münker et al., 27 Jun 2025).

- Hybrid Approaches: Combining LLM-driven generative agents with traditional, abstract models to recover both interpretability (for system-level phenomena) and linguistic/narrative richness (for micro-level interactions) (Zeng et al., 8 Jul 2025).

- Autonomous Knowledge Integration: Retrieval-augmented generation architectures enable agents to pull in up-to-date, external knowledge, increasing topical and situational realism (Shimadzu et al., 18 Mar 2025).

- Refined Memory and Role Systems: Sophisticated memory management (temporal, importance-weighted) and constraint-based personality evolution to maintain agent plausibility over extended simulation spans (Xiao et al., 2023, Ferraro et al., 2024).

- Open Science and Tooling: Broad release of simulation frameworks (e.g., MOSAIC, Crowd, GenSim, GraphAgent-Generator) lowers the barrier for adoption and interdisciplinary collaboration (Liu et al., 10 Apr 2025, Rende et al., 2024, Tang et al., 2024, Ji et al., 2024).

A plausible implication is that generative social simulation will become central to computational social science, enabling hypothesis testing, “virtual fieldwork,” and benchmarking of sociotechnical interventions in silico.

7. Controversies and Critical Perspectives

Some researchers question whether generative agents' proximity to human behavior is always beneficial. Notable dilemmas include:

- Interpretability versus Realism: The “uncanny valley” arises when agents are too expressive or context-sensitive, reducing model transparency and hampering theory extraction (Zeng et al., 8 Jul 2025).

- Empirical Validity: Without careful benchmarking and empirical grounding, simulations risk being misleading or unrepresentative, particularly when transferred outside their training context (Münker et al., 27 Jun 2025).

- Scope and Applicability: LLM-based simulations are ideally suited when linguistic nuance and dynamic role play are focal, less so for studies prioritizing system-level emergence or long-term evolutionary social phenomena (Zeng et al., 8 Jul 2025).

These debates highlight the necessity of methodological rigor, critical appraisal, and theoretical clarity when deploying generative social simulation in research and policy contexts.

In sum, generative social simulation represents an integrative, rapidly evolving approach to modeling complex social systems. By embedding models of cognition, memory, and language generation within large-scale, dynamic environments, researchers can probe the emergence of norms, test policy interventions, simulate crises, and shed light on the deep feedbacks underlying societal phenomena. Ongoing work aims to negotiate the tension between empirical richness and theoretical tractability, with an increasing focus on open tools, rigorous benchmarking, and targeted deployment in domains where generative realism and interpretive clarity can both be achieved.